Apply by Dec 15, 2025: apply.interfolio.com/175750

#DigitalHumanities #DH

Apply by Dec 15, 2025: apply.interfolio.com/175750

#DigitalHumanities #DH

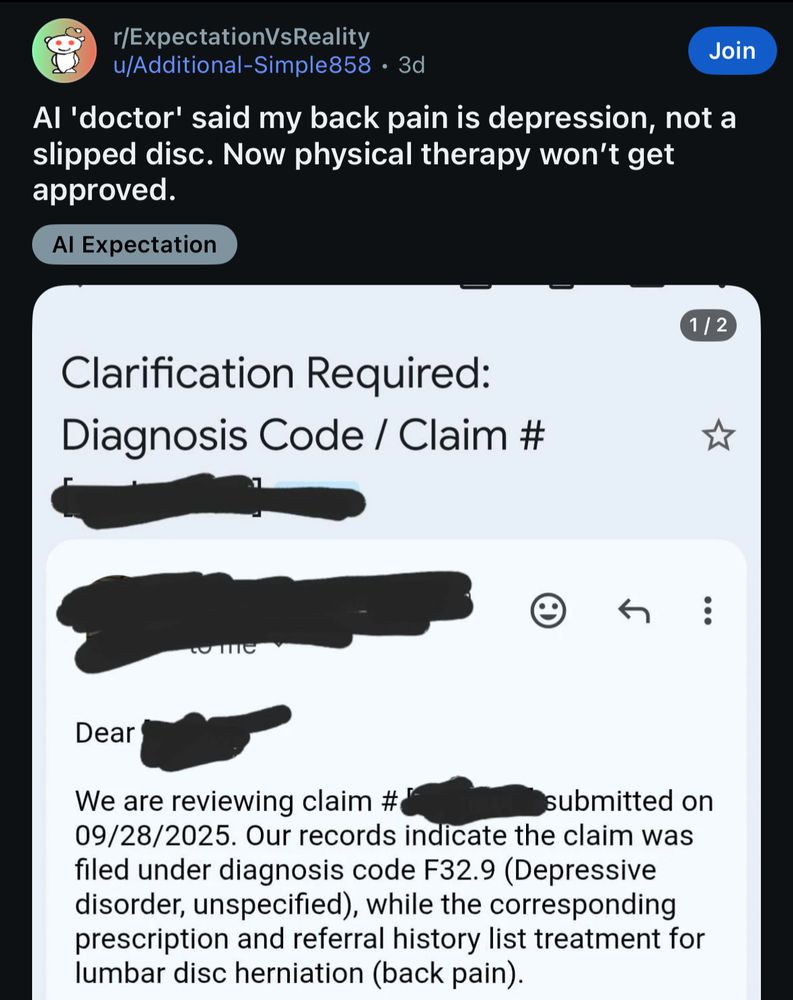

This is also an important Q for contested conditions, eg chronic pain, which are increasingly understood as a disease.

#bioethics #stigma

www.sciencedirect.com/science/arti...

This is also an important Q for contested conditions, eg chronic pain, which are increasingly understood as a disease.

#bioethics #stigma

www.sciencedirect.com/science/arti...

www.birmingham.ac.uk/events/objec...

www.birmingham.ac.uk/events/objec...

a 🧵 1/n

Drain: arxiv.org/abs/2511.04820

Strain: direct.mit.edu/qss/article/...

Oligopoly: direct.mit.edu/qss/article/...

a 🧵 1/n

Drain: arxiv.org/abs/2511.04820

Strain: direct.mit.edu/qss/article/...

Oligopoly: direct.mit.edu/qss/article/...

a 🧵 1/n

Drain: arxiv.org/abs/2511.04820

Strain: direct.mit.edu/qss/article/...

Oligopoly: direct.mit.edu/qss/article/...

AQ1: You cite Le Puma et al. in the text, but there is no corresponding reference.

AQ2: You cite La Puma et al. in the references, but there is no corresponding citation.

If we still had human copy editors, they would know exactly what happened here.

AQ1: You cite Le Puma et al. in the text, but there is no corresponding reference.

AQ2: You cite La Puma et al. in the references, but there is no corresponding citation.

If we still had human copy editors, they would know exactly what happened here.

www.levernews.com/science-for-...

www.levernews.com/science-for-...

Applications for the 2025–2026 Humanities Research Awards are due November 15, 2025.

The HRA provides valuable resources for faculty and graduate students pursuing research projects that intersect with the humanities.

Learn more: bit.ly/humanities-research-awards-2025

Applications for the 2025–2026 Humanities Research Awards are due November 15, 2025.

The HRA provides valuable resources for faculty and graduate students pursuing research projects that intersect with the humanities.

Learn more: bit.ly/humanities-research-awards-2025

(radio silence)

March 2024: Query letter sent

(apology followed by radio silence)

April 2025: Paper withdrawn

(acknowledgment of withdrawal)

October 2025: Reviewer reports and rejection received

(radio silence)

March 2024: Query letter sent

(apology followed by radio silence)

April 2025: Paper withdrawn

(acknowledgment of withdrawal)

October 2025: Reviewer reports and rejection received

bci-hub.org/documents/ep...

bci-hub.org/documents/ep...