- 100B-A6B MoE architecture

- 535 tok/s

- Competitive with Qwen3-30B-A3B

🤔🤔🤔

huggingface.co/inclusionAI/...

- 100B-A6B MoE architecture

- 535 tok/s

- Competitive with Qwen3-30B-A3B

🤔🤔🤔

huggingface.co/inclusionAI/...

Happy to see some European action in the usable model space.

Mistral blog post: mistral.ai/news/mistral-3

Happy to see some European action in the usable model space.

Mistral blog post: mistral.ai/news/mistral-3

Instruction-tuned (non-thinking), Apache 2, European open model keeps up

mistral.ai/news/mistral-3

Instruction-tuned (non-thinking), Apache 2, European open model keeps up

mistral.ai/news/mistral-3

Fine-tuned via SFT on competitive coding (Codeforces). Thanks @ben_burtenshaw!

To run it locally, click Use this model on @huggingface and select Jan: huggingface.co/bartowski/b...

Fine-tuned via SFT on competitive coding (Codeforces). Thanks @ben_burtenshaw!

To run it locally, click Use this model on @huggingface and select Jan: huggingface.co/bartowski/b...

It achieves:

- AIME24: 69.0

- AIME25: 53.6

- LiveCodeBench: 44.4

It achieves:

- AIME24: 69.0

- AIME25: 53.6

- LiveCodeBench: 44.4

2505.08311, cs․CL, 13 May 2025

🆕AM-Thinking-v1: Advancing the Frontier of Reasoning at 32B Scale

Yunjie Ji, Xiaoyu Tian, Sitong Zhao, Haotian Wang, Shuaiting Chen, Yiping Peng, Han Zhao, Xiangang Li

2505.08311, cs․CL, 13 May 2025

🆕AM-Thinking-v1: Advancing the Frontier of Reasoning at 32B Scale

Yunjie Ji, Xiaoyu Tian, Sitong Zhao, Haotian Wang, Shuaiting Chen, Yiping Peng, Han Zhao, Xiangang Li

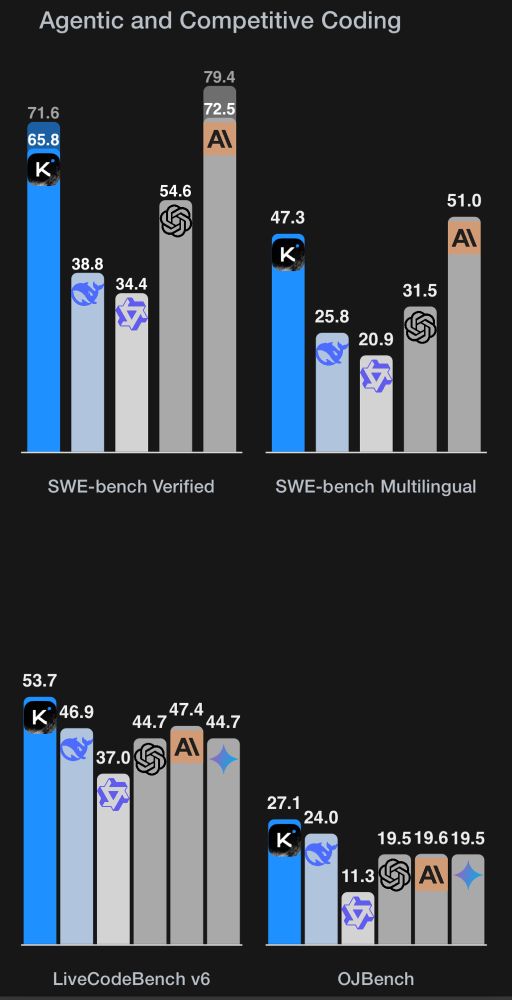

an open weights model that’s competitive with Claude 4 Opus

- 1T, 32B active MoE

- a true agentic model, hitting all the marks on coding & tool use

- no training instability, due to MuonClip optimizer

new frontier lab to watch!

moonshotai.github.io/Kimi-K2/

an open weights model that’s competitive with Claude 4 Opus

- 1T, 32B active MoE

- a true agentic model, hitting all the marks on coding & tool use

- no training instability, due to MuonClip optimizer

new frontier lab to watch!

moonshotai.github.io/Kimi-K2/

| Details | Interest | Feed |

arxiv.org/pdf/2506.11928

arxiv.org/pdf/2506.11928

- performs similar to Qwen3-235B-A22B

- 10% the training cost of Qwen3-32B

- 10x throughput of -32B

- outperforms Gemini-2.5-flash on some benchmarks

- native MTP for speculative decoding

qwen.ai/blog?id=4074...

- performs similar to Qwen3-235B-A22B

- 10% the training cost of Qwen3-32B

- 10x throughput of -32B

- outperforms Gemini-2.5-flash on some benchmarks

- native MTP for speculative decoding

qwen.ai/blog?id=4074...

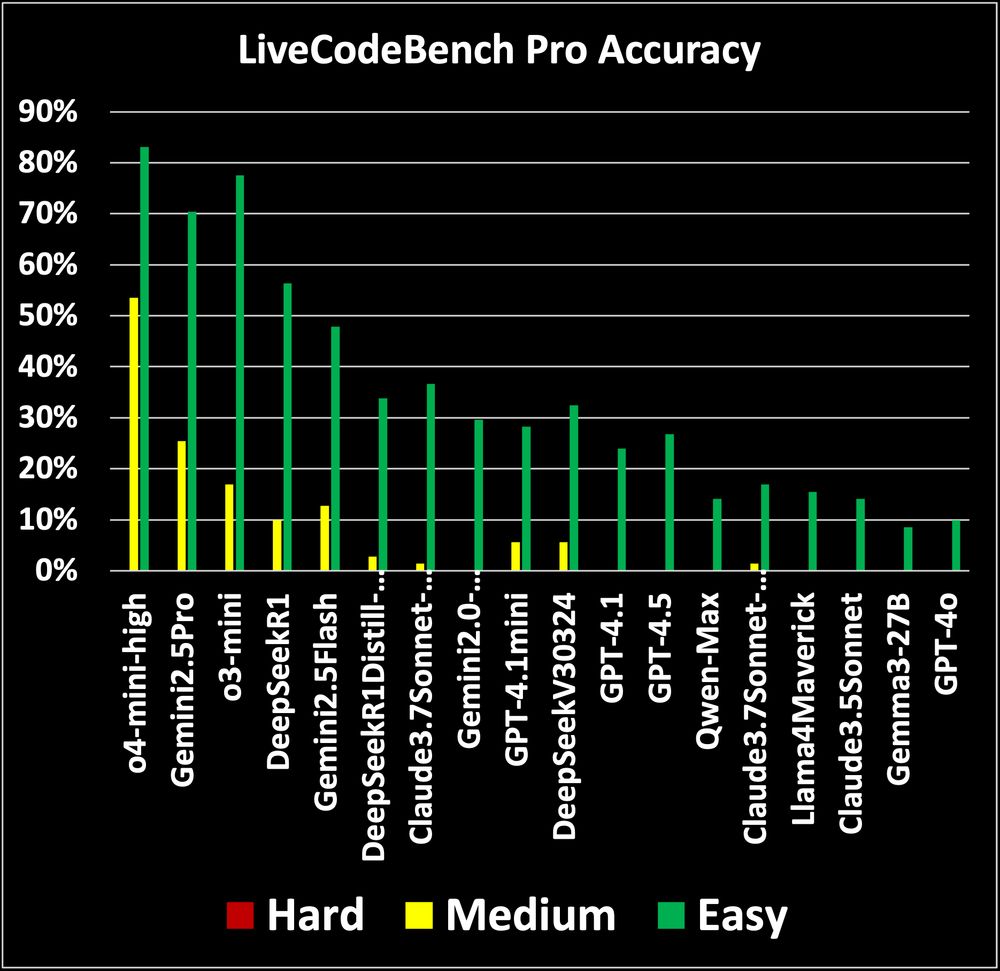

🤖 Matches Gemini 2.5 Pro on reasoning (AAI 60)

💸 ~25× cheaper to run than rivals

⚡ 2.5× faster than GPT-5 API

🏆 #1 on LiveCodeBench

The intelligence–cost frontier just got broken.

🤖 Matches Gemini 2.5 Pro on reasoning (AAI 60)

💸 ~25× cheaper to run than rivals

⚡ 2.5× faster than GPT-5 API

🏆 #1 on LiveCodeBench

The intelligence–cost frontier just got broken.

#DeepseekR1 #OpenSourceAI #LiveCodeBench #AIbenchmark #LLM #CodeAI #OpenAI #MachineLearning #AICommunity

#DeepseekR1 #OpenSourceAI #LiveCodeBench #AIbenchmark #LLM #CodeAI #OpenAI #MachineLearning #AICommunity