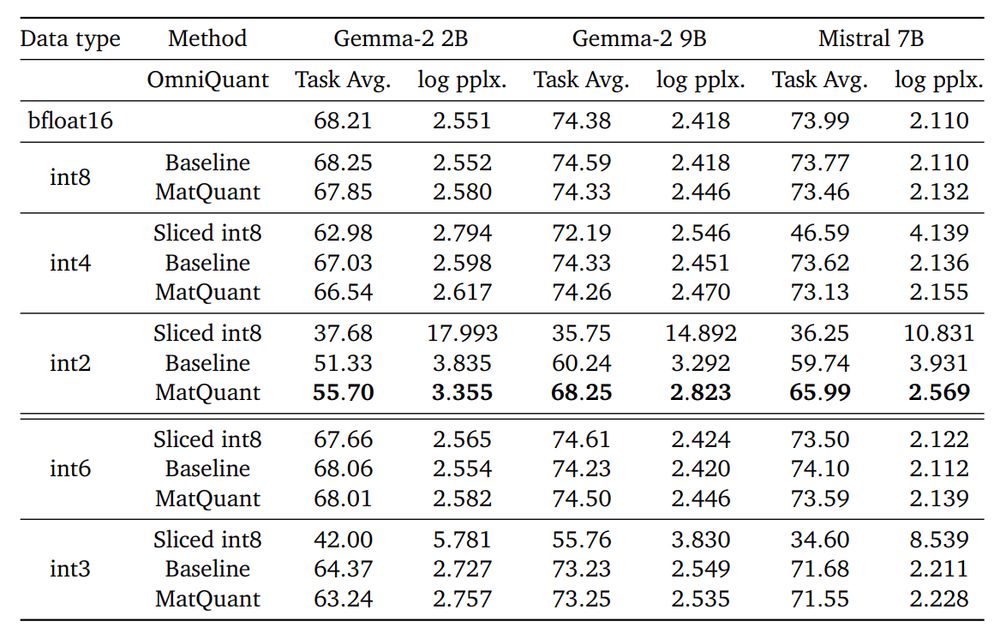

• Keeps int8 and int4 accuracy high. They perform just as well as traditional methods, while using a shared structure.

• Massively improves int2 models with up to 8% accuracy boost.

• Lets you extract int6 and int3 models without separate training.

...

• Keeps int8 and int4 accuracy high. They perform just as well as traditional methods, while using a shared structure.

• Massively improves int2 models with up to 8% accuracy boost.

• Lets you extract int6 and int3 models without separate training.

...

moonshotai/Kimi-K2-Thinking

本リポジトリは、最新のオープンソース思考モデル「Kimi K2 Thinking」を公開し、その詳細を紹介することを目的としている。

本モデルは、ステップバイステップの推論と動的なツール呼び出しに優れる思考エージェントであり、複数の主要ベンチマークでSOTAを達成した。

ネイティブINT4量子化と256Kのコンテキストウィンドウにより、効率的な推論性能と高度な思考能力を両立させている。

moonshotai/Kimi-K2-Thinking

本リポジトリは、最新のオープンソース思考モデル「Kimi K2 Thinking」を公開し、その詳細を紹介することを目的としている。

本モデルは、ステップバイステップの推論と動的なツール呼び出しに優れる思考エージェントであり、複数の主要ベンチマークでSOTAを達成した。

ネイティブINT4量子化と256Kのコンテキストウィンドウにより、効率的な推論性能と高度な思考能力を両立させている。

- تم تصنيع معالج Snapdragon 6 Gen 4 بتقنية 4 نانومتر من TSMC، مما يوفر أداءً وكفاءةً محسنين.

- يتميز بوحدة معالجة رسومات أفضل بنسبة 29%، وترقية دقة ألعاب 1080 بكسل إلى 4K.

- يتضمن Snapdragon 6 Gen 4 دعمًا جديدًا لـ INT4 لتشغيل نماذج LLM المحسّنة في ذاكرة

www.dztechy.com/qualcomm-sna...

- تم تصنيع معالج Snapdragon 6 Gen 4 بتقنية 4 نانومتر من TSMC، مما يوفر أداءً وكفاءةً محسنين.

- يتميز بوحدة معالجة رسومات أفضل بنسبة 29%، وترقية دقة ألعاب 1080 بكسل إلى 4K.

- يتضمن Snapdragon 6 Gen 4 دعمًا جديدًا لـ INT4 لتشغيل نماذج LLM المحسّنة في ذاكرة

www.dztechy.com/qualcomm-sna...

Assuming this is INT4, we're probably looking at 8 AIE-ML Tiles @ ~1,46GHz, the slowest Xilinx AIE ever

Make no mistake, this is a low power co-processor much slower than the iGPU

Assuming this is INT4, we're probably looking at 8 AIE-ML Tiles @ ~1,46GHz, the slowest Xilinx AIE ever

Make no mistake, this is a low power co-processor much slower than the iGPU

gomoot.com/snapdragon-6...

#5g #blog #bluetooth 5.4 #cpu #gpu #int4 #kryo #lossless #lpddr5 #news #npu #picks #qualcomm #snapdragon6gen4 #tech #tecnologia #wifi6e

gomoot.com/snapdragon-6...

#5g #blog #bluetooth 5.4 #cpu #gpu #int4 #kryo #lossless #lpddr5 #news #npu #picks #qualcomm #snapdragon6gen4 #tech #tecnologia #wifi6e

Googleは、消費者向けのGPUに最適化された新世代のAIモデル「Gemma 3」を発表しました。これにより、手の届くハードウェア上で強力なAI機能を実現します。これらのモデルは、量子化対応トレーニング(QAT)を利用して、性能の質を維持しながらメモリ要件を大幅に削減します。例えば、Gemma 3の27BモデルのVRAM要件は、54GB(BFloat16)から14.1GB(int4)に減少し、NVIDIA RTX 3090のようなGPUで動作可能になります。これらのモデルは、人気のあるツールとの統合が容易に設計されており、 (1/2)

Googleは、消費者向けのGPUに最適化された新世代のAIモデル「Gemma 3」を発表しました。これにより、手の届くハードウェア上で強力なAI機能を実現します。これらのモデルは、量子化対応トレーニング(QAT)を利用して、性能の質を維持しながらメモリ要件を大幅に削減します。例えば、Gemma 3の27BモデルのVRAM要件は、54GB(BFloat16)から14.1GB(int4)に減少し、NVIDIA RTX 3090のようなGPUで動作可能になります。これらのモデルは、人気のあるツールとの統合が容易に設計されており、 (1/2)

Int4: mefuriaband

Tiktok: mefuriaband

www.instagram.com/mefuriaband?...

#sdv #banda #band

Int4: mefuriaband

Tiktok: mefuriaband

www.instagram.com/mefuriaband?...

#sdv #banda #band

Gamers find to stick with 4090 which should get cheaper

I'm still rocking 3090..

Gamers find to stick with 4090 which should get cheaper

I'm still rocking 3090..

"But it will have 24 sparse tensor tflops fp16, or 48 sparse tensor tops int8, or 96 Sparse tensor tops int4, out of its tensor cores, per ghz."

...is just lazy. It's a statdump. Nobody's reading that mess, it's not even remotely grammatical.

Try. Harder.

"But it will have 24 sparse tensor tflops fp16, or 48 sparse tensor tops int8, or 96 Sparse tensor tops int4, out of its tensor cores, per ghz."

...is just lazy. It's a statdump. Nobody's reading that mess, it's not even remotely grammatical.

Try. Harder.

moonshotai/Kimi-K2-Thinking

このリポジトリは、最新のオープンソース思考モデル「Kimi K2 Thinking」を公開し、その詳細を提供するものです。

K2 Thinkingは、MoEアーキテクチャに基づき、ステップバイステップの推論と動的ツール呼び出しに特化しています。

256Kの長大なコンテキストとINT4量子化により、高い性能と効率性を両立させ、主要な推論ベンチマークで最先端の結果を示しています。

moonshotai/Kimi-K2-Thinking

このリポジトリは、最新のオープンソース思考モデル「Kimi K2 Thinking」を公開し、その詳細を提供するものです。

K2 Thinkingは、MoEアーキテクチャに基づき、ステップバイステップの推論と動的ツール呼び出しに特化しています。

256Kの長大なコンテキストとINT4量子化により、高い性能と効率性を両立させ、主要な推論ベンチマークで最先端の結果を示しています。

Elon Musk files injunction against OpenAI to stop its move to become a for-profit company.[1] OpenAI's Altman confident Trump will keep US in AI lead.[2] Meta AI Releases Llama Guard 3-1B-INT4: A Compact and High-Performance AI Moderation…

http://bushaicave.com/2024/12/01/12-1-2024/

Elon Musk files injunction against OpenAI to stop its move to become a for-profit company.[1] OpenAI's Altman confident Trump will keep US in AI lead.[2] Meta AI Releases Llama Guard 3-1B-INT4: A Compact and High-Performance AI Moderation…

http://bushaicave.com/2024/12/01/12-1-2024/

「Grok 3 チャットボットは、10 万個の NVIDIA H100 GPU を搭載した Colossus スーパークラスターでトレーニングされると言われ、主要な AI モデルよりも優れたパフォーマンスを発揮する可能性がある」とのこと。

「Grok 3 チャットボットは、10 万個の NVIDIA H100 GPU を搭載した Colossus スーパークラスターでトレーニングされると言われ、主要な AI モデルよりも優れたパフォーマンスを発揮する可能性がある」とのこと。

and if you want to make any customizations, you can now configure any combination of BBQ, int4, int8, float32 + flat or HNSW 4/8

and if you want to make any customizations, you can now configure any combination of BBQ, int4, int8, float32 + flat or HNSW 4/8

And Turing still looks […]

And Turing still looks […]

https://www.marktechpost.com/2024/11/30/meta-ai-releases-llama-guard-3-1b-int4-a-compact-and-high-performance-ai-moderation-model-for-human-ai-conversations/

#Ai

https://www.marktechpost.com/2024/11/30/meta-ai-releases-llama-guard-3-1b-int4-a-compact-and-high-performance-ai-moderation-model-for-human-ai-conversations/

#Ai

moonshotai/Kimi-K2-Thinking

最新鋭のオープンソース思考モデルであるKimi K2 Thinkingを紹介し、提供する。

このモデルは、動的なツール呼び出しと多段階のステップバイステップ推論を実現する思考エージェントとして構築され、主要なベンチマークで最高水準の性能を示す。

また、INT4量子化と256Kコンテキストウィンドウにより、高い効率と推論能力を両立させている。

moonshotai/Kimi-K2-Thinking

最新鋭のオープンソース思考モデルであるKimi K2 Thinkingを紹介し、提供する。

このモデルは、動的なツール呼び出しと多段階のステップバイステップ推論を実現する思考エージェントとして構築され、主要なベンチマークで最高水準の性能を示す。

また、INT4量子化と256Kコンテキストウィンドウにより、高い効率と推論能力を両立させている。