Isabelle Lee

@wordscompute.bsky.social

ml/nlp phding @ usc, currently visiting harvard, scientisting @ startup;

interpretability & training & reasoning & ai for physics

한american, she, (slightly outdated) iglee.me

interpretability & training & reasoning & ai for physics

한american, she, (slightly outdated) iglee.me

Reposted by Isabelle Lee

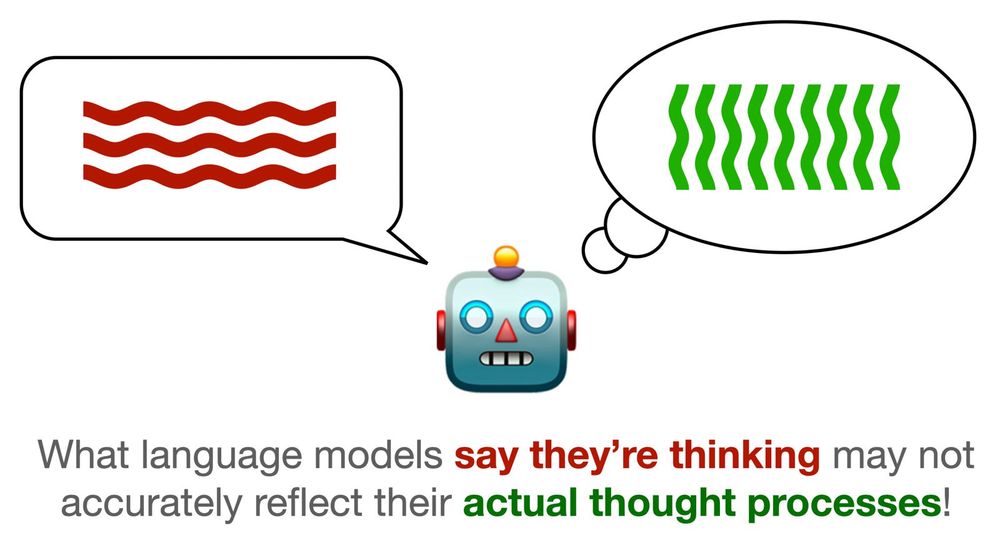

Excited to share our paper: "Chain-of-Thought Is Not Explainability"! We unpack a critical misconception in AI: models explaining their steps (CoT) aren't necessarily revealing their true reasoning. Spoiler: the transparency can be an illusion. (1/9) 🧵

July 1, 2025 at 3:41 PM

Excited to share our paper: "Chain-of-Thought Is Not Explainability"! We unpack a critical misconception in AI: models explaining their steps (CoT) aren't necessarily revealing their true reasoning. Spoiler: the transparency can be an illusion. (1/9) 🧵

weve reached that point in this submission cycle, no amount of coffee will do 😞🙂↔️😞

May 9, 2025 at 11:51 PM

weve reached that point in this submission cycle, no amount of coffee will do 😞🙂↔️😞

INCOMING

really excited to be headed to OFC in SF! so excited to revisit optical physics 😀

March 29, 2025 at 4:58 AM

INCOMING

titled: peer review

March 29, 2025 at 4:58 AM

titled: peer review

Reposted by Isabelle Lee

Life update: I'm starting as faculty at Boston University

@bucds.bsky.social in 2026! BU has SCHEMES for LM interpretability & analysis, I couldn't be more pumped to join a burgeoning supergroup w/ @najoung.bsky.social @amuuueller.bsky.social. Looking for my first students, so apply and reach out!

@bucds.bsky.social in 2026! BU has SCHEMES for LM interpretability & analysis, I couldn't be more pumped to join a burgeoning supergroup w/ @najoung.bsky.social @amuuueller.bsky.social. Looking for my first students, so apply and reach out!

March 27, 2025 at 2:24 AM

Life update: I'm starting as faculty at Boston University

@bucds.bsky.social in 2026! BU has SCHEMES for LM interpretability & analysis, I couldn't be more pumped to join a burgeoning supergroup w/ @najoung.bsky.social @amuuueller.bsky.social. Looking for my first students, so apply and reach out!

@bucds.bsky.social in 2026! BU has SCHEMES for LM interpretability & analysis, I couldn't be more pumped to join a burgeoning supergroup w/ @najoung.bsky.social @amuuueller.bsky.social. Looking for my first students, so apply and reach out!

really excited to be headed to OFC in SF! so excited to revisit optical physics 😀

March 15, 2025 at 1:42 AM

really excited to be headed to OFC in SF! so excited to revisit optical physics 😀

Reposted by Isabelle Lee

Transformers employ different strategies through training to minimize loss, but how do these tradeoff and why?

Excited to share our newest work, where we show remarkably rich competitive and cooperative interactions (termed "coopetition") as a transformer learns.

Read on 🔎⏬

Excited to share our newest work, where we show remarkably rich competitive and cooperative interactions (termed "coopetition") as a transformer learns.

Read on 🔎⏬

March 11, 2025 at 7:13 AM

Transformers employ different strategies through training to minimize loss, but how do these tradeoff and why?

Excited to share our newest work, where we show remarkably rich competitive and cooperative interactions (termed "coopetition") as a transformer learns.

Read on 🔎⏬

Excited to share our newest work, where we show remarkably rich competitive and cooperative interactions (termed "coopetition") as a transformer learns.

Read on 🔎⏬

Reposted by Isabelle Lee

New paper–accepted as *spotlight* at #ICLR2025! 🧵👇

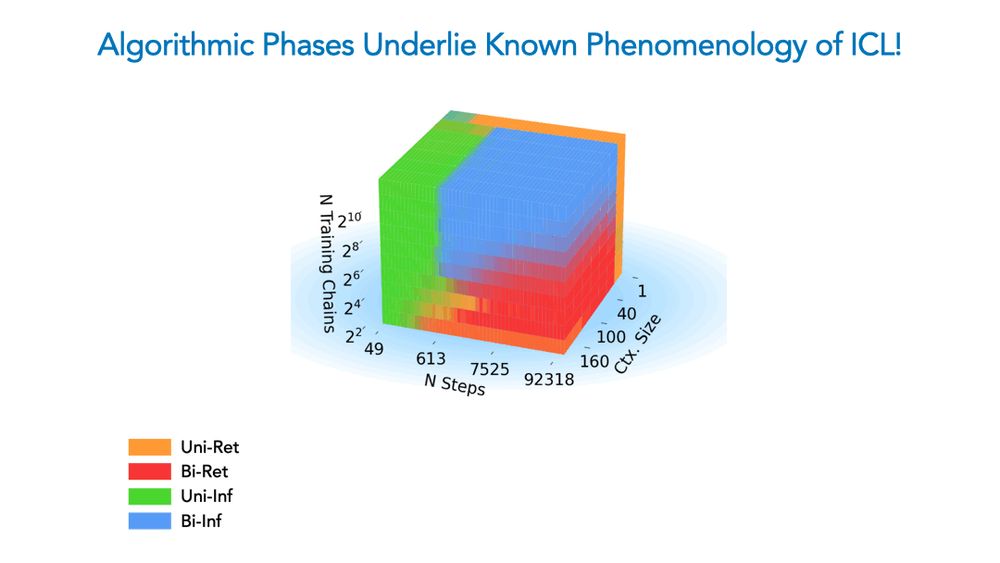

We show a competition dynamic between several algorithms splits a toy model’s ICL abilities into four broad phases of train/test settings! This means ICL is akin to a mixture of different algorithms, not a monolithic ability.

We show a competition dynamic between several algorithms splits a toy model’s ICL abilities into four broad phases of train/test settings! This means ICL is akin to a mixture of different algorithms, not a monolithic ability.

February 16, 2025 at 6:57 PM

New paper–accepted as *spotlight* at #ICLR2025! 🧵👇

We show a competition dynamic between several algorithms splits a toy model’s ICL abilities into four broad phases of train/test settings! This means ICL is akin to a mixture of different algorithms, not a monolithic ability.

We show a competition dynamic between several algorithms splits a toy model’s ICL abilities into four broad phases of train/test settings! This means ICL is akin to a mixture of different algorithms, not a monolithic ability.

Reposted by Isabelle Lee

Starlings move in undulating curtains across the sky. Forests of bamboo blossom at once. But some individuals don’t participate in these mystifying synchronized behaviors — and scientists are learning that they may be as important as those that do.

Out-of-Sync ‘Loners’ May Secretly Protect Orderly Swarms

Studies of collective behavior usually focus on how crowds of organisms coordinate their actions. But what if the individuals that don’t participate have just as much to tell us?

buff.ly

February 15, 2025 at 4:46 PM

Starlings move in undulating curtains across the sky. Forests of bamboo blossom at once. But some individuals don’t participate in these mystifying synchronized behaviors — and scientists are learning that they may be as important as those that do.

Reposted by Isabelle Lee

New piece out!

We explain why Fully Autonomous Agents Should Not be Developed, breaking “AI Agent” down into its components & examining through ethical values.

With @evijit.io, @giadapistilli.com and @sashamtl.bsky.social

huggingface.co/papers/2502....

We explain why Fully Autonomous Agents Should Not be Developed, breaking “AI Agent” down into its components & examining through ethical values.

With @evijit.io, @giadapistilli.com and @sashamtl.bsky.social

huggingface.co/papers/2502....

Paper page - Fully Autonomous AI Agents Should Not be Developed

Join the discussion on this paper page

huggingface.co

February 6, 2025 at 9:56 AM

New piece out!

We explain why Fully Autonomous Agents Should Not be Developed, breaking “AI Agent” down into its components & examining through ethical values.

With @evijit.io, @giadapistilli.com and @sashamtl.bsky.social

huggingface.co/papers/2502....

We explain why Fully Autonomous Agents Should Not be Developed, breaking “AI Agent” down into its components & examining through ethical values.

With @evijit.io, @giadapistilli.com and @sashamtl.bsky.social

huggingface.co/papers/2502....

Reposted by Isabelle Lee

Brian Hie harnessed the powerful parallels between DNA and human language to create an AI tool that interprets genomes. Read his conversation with Ingrid Wickelgren: www.quantamagazine.org/the-poetry-f...

The Poetry Fan Who Taught an LLM to Read and Write DNA | Quanta Magazine

By treating DNA as a language, Brian Hie’s “ChatGPT for genomes” could pick up patterns that humans can’t see, accelerating biological design.

www.quantamagazine.org

February 5, 2025 at 4:00 PM

Brian Hie harnessed the powerful parallels between DNA and human language to create an AI tool that interprets genomes. Read his conversation with Ingrid Wickelgren: www.quantamagazine.org/the-poetry-f...

Reposted by Isabelle Lee

How do tokens evolve as they are processed by a deep Transformer?

With José A. Carrillo, @gabrielpeyre.bsky.social and @pierreablin.bsky.social, we tackle this in our new preprint: A Unified Perspective on the Dynamics of Deep Transformers arxiv.org/abs/2501.18322

ML and PDE lovers, check it out!

With José A. Carrillo, @gabrielpeyre.bsky.social and @pierreablin.bsky.social, we tackle this in our new preprint: A Unified Perspective on the Dynamics of Deep Transformers arxiv.org/abs/2501.18322

ML and PDE lovers, check it out!

January 31, 2025 at 4:56 PM

How do tokens evolve as they are processed by a deep Transformer?

With José A. Carrillo, @gabrielpeyre.bsky.social and @pierreablin.bsky.social, we tackle this in our new preprint: A Unified Perspective on the Dynamics of Deep Transformers arxiv.org/abs/2501.18322

ML and PDE lovers, check it out!

With José A. Carrillo, @gabrielpeyre.bsky.social and @pierreablin.bsky.social, we tackle this in our new preprint: A Unified Perspective on the Dynamics of Deep Transformers arxiv.org/abs/2501.18322

ML and PDE lovers, check it out!

it’s finally raining in la:)

January 26, 2025 at 7:20 PM

it’s finally raining in la:)

i go on a really long walk almost every day, and at a high point in silverlake, i saw fire from all sides. and it's harder to breathe. and everything is orange.

January 9, 2025 at 2:14 PM

i go on a really long walk almost every day, and at a high point in silverlake, i saw fire from all sides. and it's harder to breathe. and everything is orange.

Reposted by Isabelle Lee

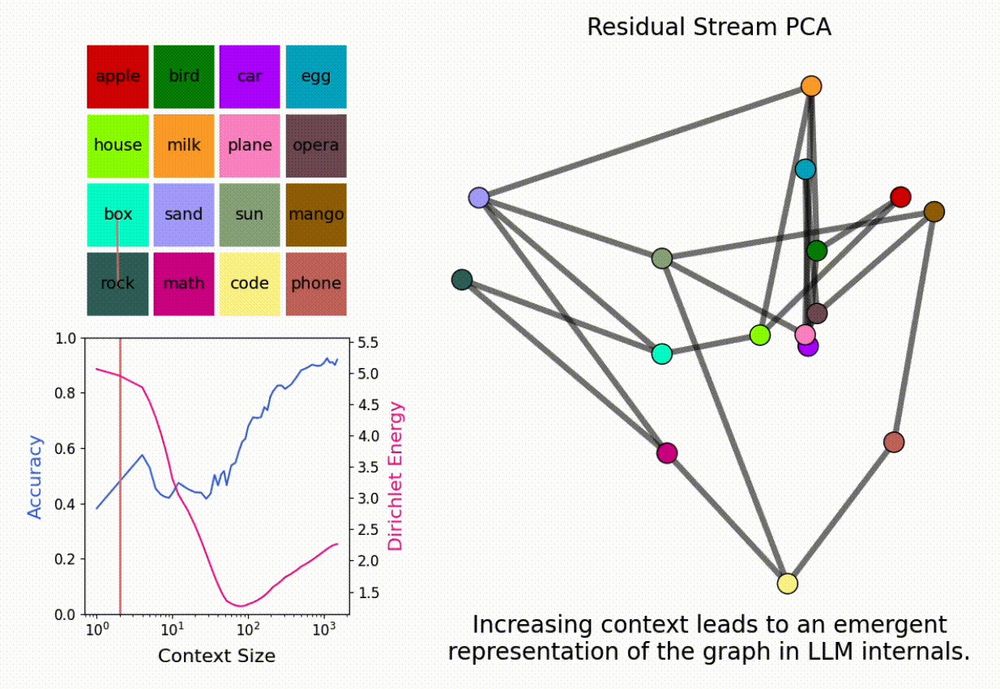

New paper <3

Interested in inference-time scaling? In-context Learning? Mech Interp?

LMs can solve novel in-context tasks, with sufficient examples (longer contexts). Why? Bc they dynamically form *in-context representations*!

1/N

Interested in inference-time scaling? In-context Learning? Mech Interp?

LMs can solve novel in-context tasks, with sufficient examples (longer contexts). Why? Bc they dynamically form *in-context representations*!

1/N

January 5, 2025 at 3:49 PM

New paper <3

Interested in inference-time scaling? In-context Learning? Mech Interp?

LMs can solve novel in-context tasks, with sufficient examples (longer contexts). Why? Bc they dynamically form *in-context representations*!

1/N

Interested in inference-time scaling? In-context Learning? Mech Interp?

LMs can solve novel in-context tasks, with sufficient examples (longer contexts). Why? Bc they dynamically form *in-context representations*!

1/N

Reposted by Isabelle Lee

Hollywood High School will serve as an evacuation site for the Sunset fire in Hollywood, KTLA reported. The school is at 1521 Highland Ave. www.latimes.com/california/s...

Sunset fire in Hollywood Hills: Evacuations, shelter

An evacuation zone was established between the 101 Freeway and Laurel Canyon and between Mulholland Drive and Hollywood Boulevard.

www.latimes.com

January 9, 2025 at 3:03 AM

Hollywood High School will serve as an evacuation site for the Sunset fire in Hollywood, KTLA reported. The school is at 1521 Highland Ave. www.latimes.com/california/s...

Reposted by Isabelle Lee

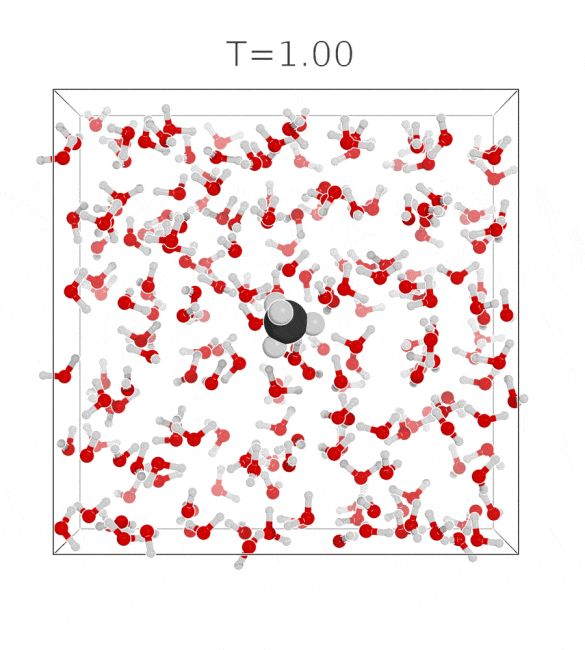

hello bluesky! we have a new preprint on solvation free energies:

tl;dr: We define an interpolating density by its sampling process, and learn the corresponding equilibrium potential with score matching. arxiv.org/abs/2410.15815

with @francois.fleuret.org and @tbereau.bsky.social

(1/n)

tl;dr: We define an interpolating density by its sampling process, and learn the corresponding equilibrium potential with score matching. arxiv.org/abs/2410.15815

with @francois.fleuret.org and @tbereau.bsky.social

(1/n)

December 17, 2024 at 12:32 PM

hello bluesky! we have a new preprint on solvation free energies:

tl;dr: We define an interpolating density by its sampling process, and learn the corresponding equilibrium potential with score matching. arxiv.org/abs/2410.15815

with @francois.fleuret.org and @tbereau.bsky.social

(1/n)

tl;dr: We define an interpolating density by its sampling process, and learn the corresponding equilibrium potential with score matching. arxiv.org/abs/2410.15815

with @francois.fleuret.org and @tbereau.bsky.social

(1/n)

Reposted by Isabelle Lee

Reposted by Isabelle Lee

The slides of my NeurIPS lecture "From Diffusion Models to Schrödinger Bridges - Generative Modeling meets Optimal Transport" can be found here

drive.google.com/file/d/1eLa3...

drive.google.com/file/d/1eLa3...

BreimanLectureNeurIPS2024_Doucet.pdf

drive.google.com

December 15, 2024 at 6:40 PM

The slides of my NeurIPS lecture "From Diffusion Models to Schrödinger Bridges - Generative Modeling meets Optimal Transport" can be found here

drive.google.com/file/d/1eLa3...

drive.google.com/file/d/1eLa3...

Reposted by Isabelle Lee

Reposted by Isabelle Lee

An Evolved Universal Transformer Memory

sakana.ai/namm/

Introducing Neural Attention Memory Models (NAMM), a new kind of neural memory system for Transformers that not only boost their performance and efficiency but are also transferable to other foundation models without any additional training!

sakana.ai/namm/

Introducing Neural Attention Memory Models (NAMM), a new kind of neural memory system for Transformers that not only boost their performance and efficiency but are also transferable to other foundation models without any additional training!

December 10, 2024 at 1:34 AM

An Evolved Universal Transformer Memory

sakana.ai/namm/

Introducing Neural Attention Memory Models (NAMM), a new kind of neural memory system for Transformers that not only boost their performance and efficiency but are also transferable to other foundation models without any additional training!

sakana.ai/namm/

Introducing Neural Attention Memory Models (NAMM), a new kind of neural memory system for Transformers that not only boost their performance and efficiency but are also transferable to other foundation models without any additional training!

Reposted by Isabelle Lee

Tomorrow (Dec 12) poster #2311! Go talk to @emalach.bsky.social and the other authors at #NeurIPS, say hi from me!

Modern generative models are trained to imitate human experts, but can they actually beat those experts? Our new paper uses imitative chess agents to explore when a model can "transcend" its training distribution and outperform every human it's trained on. arxiv.org/abs/2406.11741

December 11, 2024 at 6:13 PM

Tomorrow (Dec 12) poster #2311! Go talk to @emalach.bsky.social and the other authors at #NeurIPS, say hi from me!

Reposted by Isabelle Lee

Sometimes our anthropocentric assumptions about how intelligence "should" work (like using language for reasoning) may be holding AI back. Letting AI reason in its own native "language" in latent space could unlock new capabilities, improving reasoning over Chain of Thought. arxiv.org/pdf/2412.06769

December 10, 2024 at 2:59 PM

Sometimes our anthropocentric assumptions about how intelligence "should" work (like using language for reasoning) may be holding AI back. Letting AI reason in its own native "language" in latent space could unlock new capabilities, improving reasoning over Chain of Thought. arxiv.org/pdf/2412.06769

Reposted by Isabelle Lee

What counts as in-context learning (ICL)? Typically, you might think of it as learning a task from a few examples. However, we’ve just written a perspective (arxiv.org/abs/2412.03782) suggesting interpreting a much broader spectrum of behaviors as ICL! Quick summary thread: 1/7

The broader spectrum of in-context learning

The ability of language models to learn a task from a few examples in context has generated substantial interest. Here, we provide a perspective that situates this type of supervised few-shot learning...

arxiv.org

December 10, 2024 at 6:17 PM

What counts as in-context learning (ICL)? Typically, you might think of it as learning a task from a few examples. However, we’ve just written a perspective (arxiv.org/abs/2412.03782) suggesting interpreting a much broader spectrum of behaviors as ICL! Quick summary thread: 1/7

Reposted by Isabelle Lee

Can language models transcend the limitations of training data?

We train LMs on a formal grammar, then prompt them OUTSIDE of this grammar. We find that LMs often extrapolate logical rules and apply them OOD, too. Proof of a useful inductive bias.

Check it out at NeurIPS:

nips.cc/virtual/2024...

We train LMs on a formal grammar, then prompt them OUTSIDE of this grammar. We find that LMs often extrapolate logical rules and apply them OOD, too. Proof of a useful inductive bias.

Check it out at NeurIPS:

nips.cc/virtual/2024...

NeurIPS Poster Rule Extrapolation in Language Modeling: A Study of Compositional Generalization on OOD PromptsNeurIPS 2024

nips.cc

December 6, 2024 at 1:31 PM

Can language models transcend the limitations of training data?

We train LMs on a formal grammar, then prompt them OUTSIDE of this grammar. We find that LMs often extrapolate logical rules and apply them OOD, too. Proof of a useful inductive bias.

Check it out at NeurIPS:

nips.cc/virtual/2024...

We train LMs on a formal grammar, then prompt them OUTSIDE of this grammar. We find that LMs often extrapolate logical rules and apply them OOD, too. Proof of a useful inductive bias.

Check it out at NeurIPS:

nips.cc/virtual/2024...