interpretability & training & reasoning & ai for physics

한american, she, (slightly outdated) iglee.me

@bucds.bsky.social in 2026! BU has SCHEMES for LM interpretability & analysis, I couldn't be more pumped to join a burgeoning supergroup w/ @najoung.bsky.social @amuuueller.bsky.social. Looking for my first students, so apply and reach out!

@bucds.bsky.social in 2026! BU has SCHEMES for LM interpretability & analysis, I couldn't be more pumped to join a burgeoning supergroup w/ @najoung.bsky.social @amuuueller.bsky.social. Looking for my first students, so apply and reach out!

Excited to share our newest work, where we show remarkably rich competitive and cooperative interactions (termed "coopetition") as a transformer learns.

Read on 🔎⏬

Excited to share our newest work, where we show remarkably rich competitive and cooperative interactions (termed "coopetition") as a transformer learns.

Read on 🔎⏬

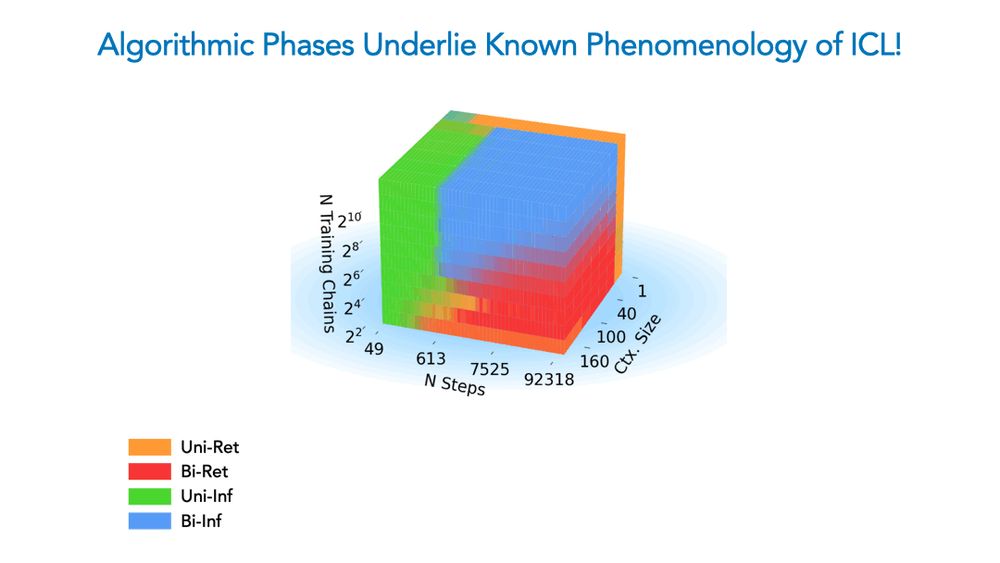

We show a competition dynamic between several algorithms splits a toy model’s ICL abilities into four broad phases of train/test settings! This means ICL is akin to a mixture of different algorithms, not a monolithic ability.

We show a competition dynamic between several algorithms splits a toy model’s ICL abilities into four broad phases of train/test settings! This means ICL is akin to a mixture of different algorithms, not a monolithic ability.

We explain why Fully Autonomous Agents Should Not be Developed, breaking “AI Agent” down into its components & examining through ethical values.

With @evijit.io, @giadapistilli.com and @sashamtl.bsky.social

huggingface.co/papers/2502....

We explain why Fully Autonomous Agents Should Not be Developed, breaking “AI Agent” down into its components & examining through ethical values.

With @evijit.io, @giadapistilli.com and @sashamtl.bsky.social

huggingface.co/papers/2502....

With José A. Carrillo, @gabrielpeyre.bsky.social and @pierreablin.bsky.social, we tackle this in our new preprint: A Unified Perspective on the Dynamics of Deep Transformers arxiv.org/abs/2501.18322

ML and PDE lovers, check it out!

With José A. Carrillo, @gabrielpeyre.bsky.social and @pierreablin.bsky.social, we tackle this in our new preprint: A Unified Perspective on the Dynamics of Deep Transformers arxiv.org/abs/2501.18322

ML and PDE lovers, check it out!

Interested in inference-time scaling? In-context Learning? Mech Interp?

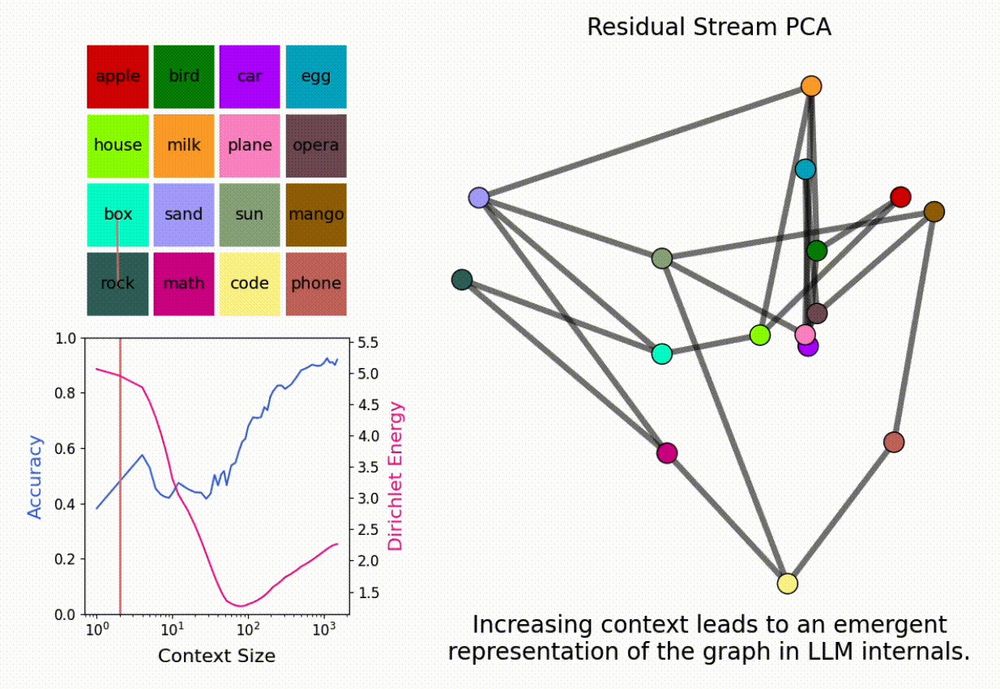

LMs can solve novel in-context tasks, with sufficient examples (longer contexts). Why? Bc they dynamically form *in-context representations*!

1/N

Interested in inference-time scaling? In-context Learning? Mech Interp?

LMs can solve novel in-context tasks, with sufficient examples (longer contexts). Why? Bc they dynamically form *in-context representations*!

1/N

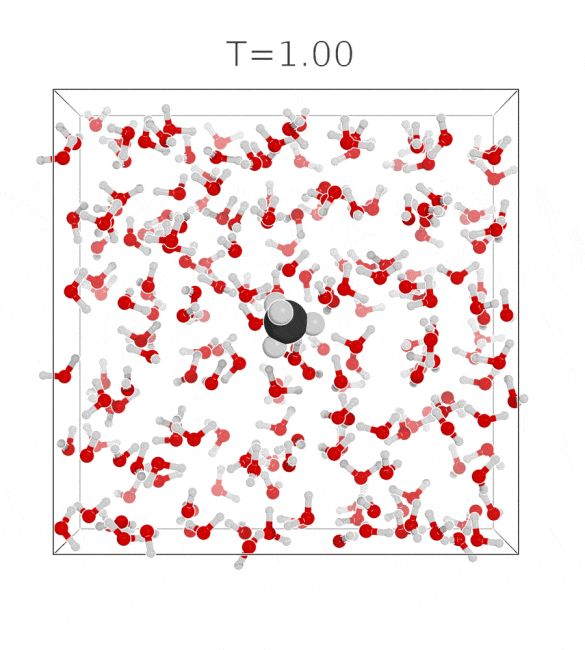

tl;dr: We define an interpolating density by its sampling process, and learn the corresponding equilibrium potential with score matching. arxiv.org/abs/2410.15815

with @francois.fleuret.org and @tbereau.bsky.social

(1/n)

tl;dr: We define an interpolating density by its sampling process, and learn the corresponding equilibrium potential with score matching. arxiv.org/abs/2410.15815

with @francois.fleuret.org and @tbereau.bsky.social

(1/n)

drive.google.com/file/d/1eLa3...

drive.google.com/file/d/1eLa3...

sakana.ai/namm/

Introducing Neural Attention Memory Models (NAMM), a new kind of neural memory system for Transformers that not only boost their performance and efficiency but are also transferable to other foundation models without any additional training!

sakana.ai/namm/

Introducing Neural Attention Memory Models (NAMM), a new kind of neural memory system for Transformers that not only boost their performance and efficiency but are also transferable to other foundation models without any additional training!

We train LMs on a formal grammar, then prompt them OUTSIDE of this grammar. We find that LMs often extrapolate logical rules and apply them OOD, too. Proof of a useful inductive bias.

Check it out at NeurIPS:

nips.cc/virtual/2024...

We train LMs on a formal grammar, then prompt them OUTSIDE of this grammar. We find that LMs often extrapolate logical rules and apply them OOD, too. Proof of a useful inductive bias.

Check it out at NeurIPS:

nips.cc/virtual/2024...