My webpage: https://vcastin.github.io/

That was quite popular and here is a synthesis of the responses:

That was quite popular and here is a synthesis of the responses:

Made with ❤️ at Apple

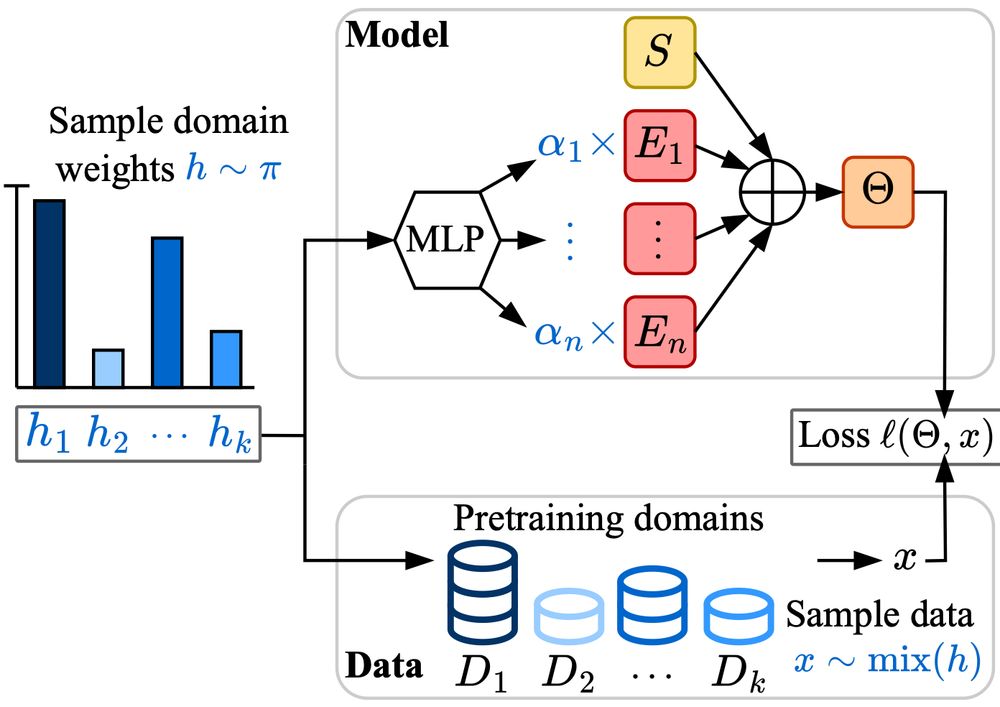

Thanks to my co-authors David Grangier, Angelos Katharopoulos, and Skyler Seto!

arxiv.org/abs/2502.01804

Made with ❤️ at Apple

Thanks to my co-authors David Grangier, Angelos Katharopoulos, and Skyler Seto!

arxiv.org/abs/2502.01804

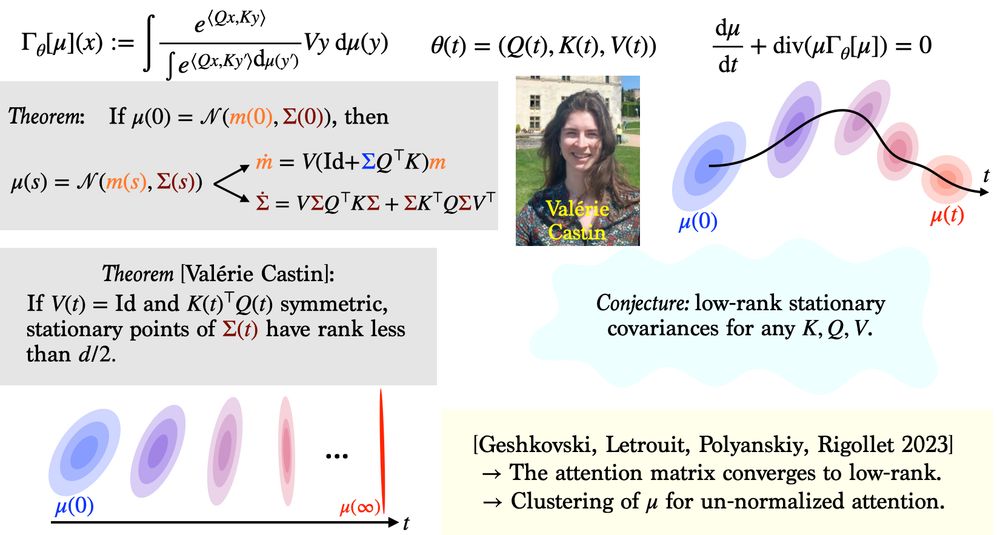

With José A. Carrillo, @gabrielpeyre.bsky.social and @pierreablin.bsky.social, we tackle this in our new preprint: A Unified Perspective on the Dynamics of Deep Transformers arxiv.org/abs/2501.18322

ML and PDE lovers, check it out!

With José A. Carrillo, @gabrielpeyre.bsky.social and @pierreablin.bsky.social, we tackle this in our new preprint: A Unified Perspective on the Dynamics of Deep Transformers arxiv.org/abs/2501.18322

ML and PDE lovers, check it out!

Make attention ~18% faster with a drop-in replacement 🚀

Code:

github.com/apple/ml-sig...

Paper

arxiv.org/abs/2409.04431

Make attention ~18% faster with a drop-in replacement 🚀

Code:

github.com/apple/ml-sig...

Paper

arxiv.org/abs/2409.04431

We take a step towards unravelling its mystery by explaining why the phenomenon of disentanglement arises in generative latent variable models.

Blog post: carl-allen.github.io/theory/2024/...

We take a step towards unravelling its mystery by explaining why the phenomenon of disentanglement arises in generative latent variable models.

Blog post: carl-allen.github.io/theory/2024/...