See https://www.visinf.tu-darmstadt.de/visual_inference

📢 Stop missing great workshop speakers just because the workshop wasn’t on your radar. Browse them all in one place:

robinhesse.github.io/workshop_spe...

(also available for @euripsconf.bsky.social)

#NeurIPS #EurIPS

📢 Stop missing great workshop speakers just because the workshop wasn’t on your radar. Browse them all in one place:

robinhesse.github.io/workshop_spe...

(also available for @euripsconf.bsky.social)

#NeurIPS #EurIPS

www.career.tu-darmstadt.de/tu-darmstadt...

www.career.tu-darmstadt.de/tu-darmstadt...

🌍 visinf.github.io/recover

Talk: Tue 09:30 AM, Kalakaua Ballroom

Poster: Tue 11:45 AM, Exhibit Hall I #76

🌍 visinf.github.io/recover

Talk: Tue 09:30 AM, Kalakaua Ballroom

Poster: Tue 11:45 AM, Exhibit Hall I #76

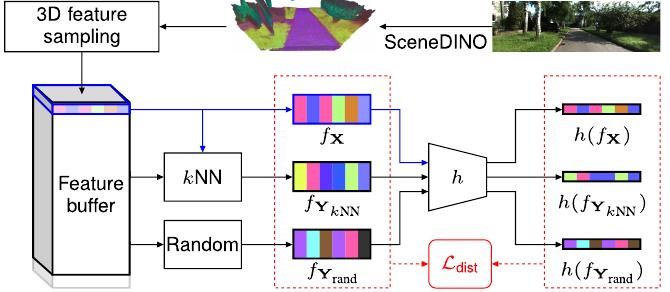

Come by our SceneDINO poster at NeuSLAM today 14:15 (Kamehameha II) or Tue, 15:15 (Ex. Hall I 627)!

W/ Jevtić @fwimbauer.bsky.social @olvrhhn.bsky.social Rupprecht, @stefanroth.bsky.social @dcremers.bsky.social

Come by our SceneDINO poster at NeuSLAM today 14:15 (Kamehameha II) or Tue, 15:15 (Ex. Hall I 627)!

W/ Jevtić @fwimbauer.bsky.social @olvrhhn.bsky.social Rupprecht, @stefanroth.bsky.social @dcremers.bsky.social

Work by Jannik Endres, @olvrhhn.bsky.social, Charles Cobière, @simoneschaub.bsky.social, @stefanroth.bsky.social and Alexandre Alahi.

Work by Jannik Endres, @olvrhhn.bsky.social, Charles Cobière, @simoneschaub.bsky.social, @stefanroth.bsky.social and Alexandre Alahi.

@ellis.eu @tuda.bsky.social

ellis.eu/news/ellis-p...

@ellis.eu @tuda.bsky.social

ellis.eu/news/ellis-p...

🎤 Prof. Dima Damen (Uni Bristol & Google DeepMind)

🗓️ Thursday, Sept 25, 2025. 10:30–11:30

Talk:Opportunities in Egocentric Vision

Discover new frontiers in egocentric video understanding, from wearable devices to large-scale datasets.

🔗 www.dagm-gcpr.de/year/2025/re...

🎤 Prof. Dima Damen (Uni Bristol & Google DeepMind)

🗓️ Thursday, Sept 25, 2025. 10:30–11:30

Talk:Opportunities in Egocentric Vision

Discover new frontiers in egocentric video understanding, from wearable devices to large-scale datasets.

🔗 www.dagm-gcpr.de/year/2025/re...

Have a top-tier paper from the last year (CVPR, NeurIPS, ICLR, ECCV, ICCV, etc.)?

Share your work with the vibrant GCPR community!

🗓️ Submission Deadline: July 28, 2025

🔗 Instructions: www.dagm-gcpr.de/year/2025/su...

Have a top-tier paper from the last year (CVPR, NeurIPS, ICLR, ECCV, ICCV, etc.)?

Share your work with the vibrant GCPR community!

🗓️ Submission Deadline: July 28, 2025

🔗 Instructions: www.dagm-gcpr.de/year/2025/su...

Aleksandar Jevtić, Christoph Reich, Felix Wimbauer ... Daniel Cremers

arxiv.org/abs/2507.06230

Trending on www.scholar-inbox.com

Aleksandar Jevtić, Christoph Reich, Felix Wimbauer ... Daniel Cremers

arxiv.org/abs/2507.06230

Trending on www.scholar-inbox.com

🌍: visinf.github.io/scenedino/

📃: arxiv.org/abs/2507.06230

🤗: huggingface.co/spaces/jev-a...

@jev-aleks.bsky.social @fwimbauer.bsky.social @olvrhhn.bsky.social @stefanroth.bsky.social @dcremers.bsky.social

🌍: visinf.github.io/scenedino/

📃: arxiv.org/abs/2507.06230

🤗: huggingface.co/spaces/jev-a...

@jev-aleks.bsky.social @fwimbauer.bsky.social @olvrhhn.bsky.social @stefanroth.bsky.social @dcremers.bsky.social

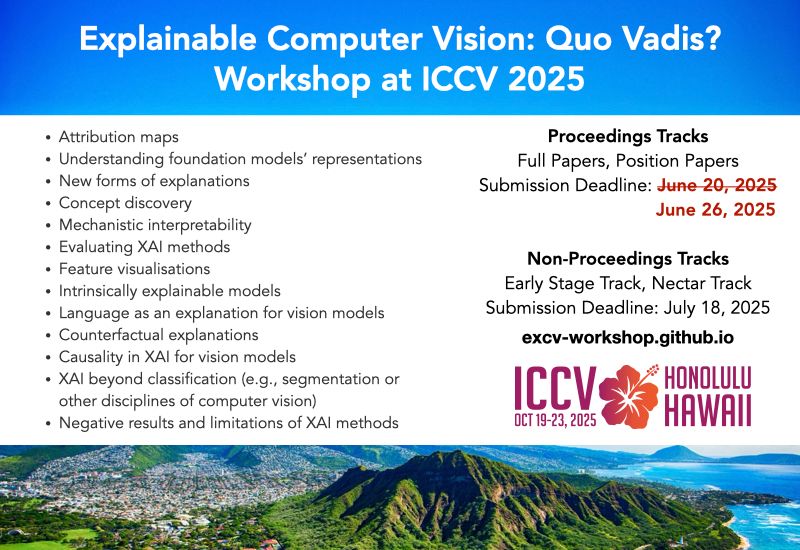

🗓️ Submissions open until June 26 AoE.

📄 Got accepted to ICCV? Congrats! Consider our non-proceedings track.

#ICCV2025 @iccv.bsky.social

🗓️ Submissions open until June 26 AoE.

📄 Got accepted to ICCV? Congrats! Consider our non-proceedings track.

#ICCV2025 @iccv.bsky.social

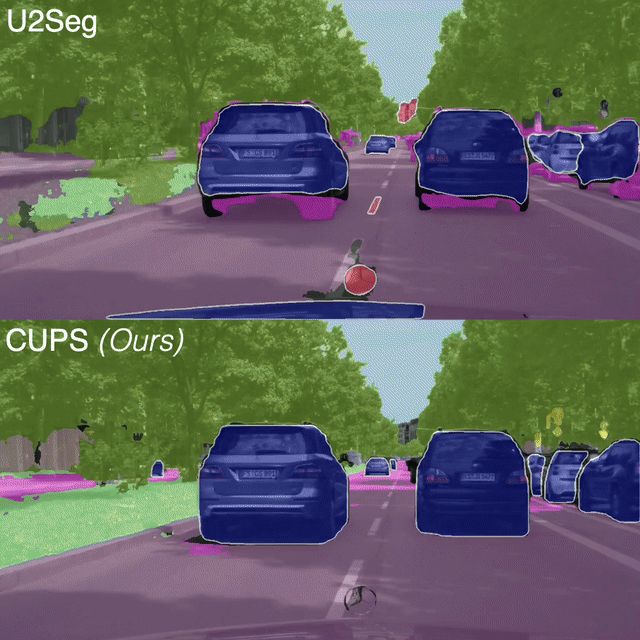

We present CUPS, the first unsupervised panoptic segmentation method trained directly on scene-centric imagery.

Using self-supervised features, depth & motion, we achieve SotA results!

🌎 visinf.github.io/cups

We present CUPS, the first unsupervised panoptic segmentation method trained directly on scene-centric imagery.

Using self-supervised features, depth & motion, we achieve SotA results!

🌎 visinf.github.io/cups

For more details check out cvg.cit.tum.de

For more details check out cvg.cit.tum.de

"RAI" ist eines der Projekte, mit denen sich die TUDa um einen Exzellenzcluster bewirbt.

www.youtube.com/watch?v=2VAm...

"RAI" ist eines der Projekte, mit denen sich die TUDa um einen Exzellenzcluster bewirbt.

www.youtube.com/watch?v=2VAm...

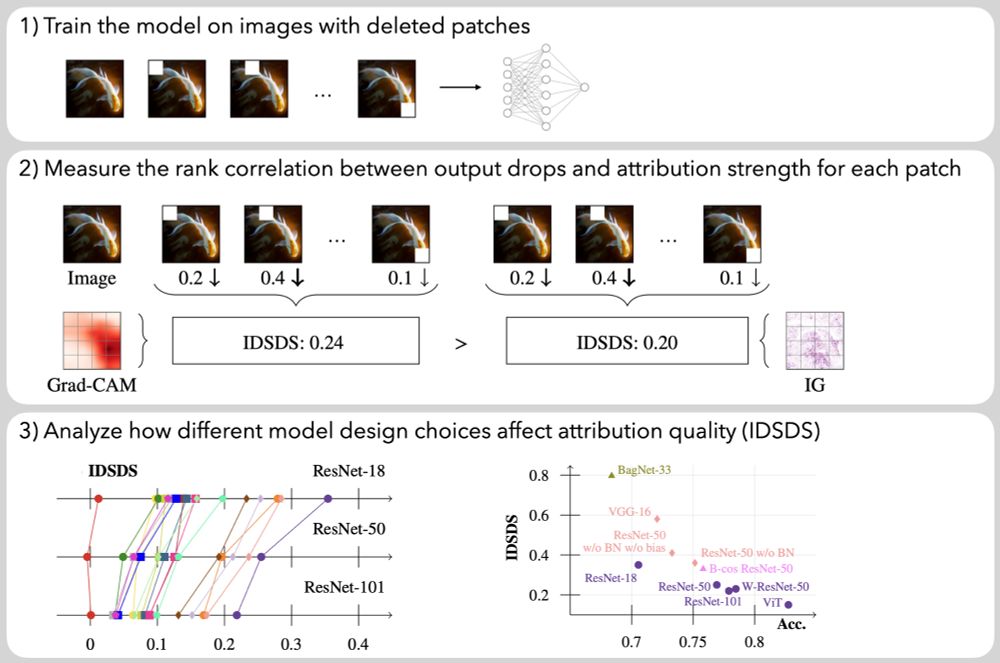

Paper: arxiv.org/abs/2407.11910

Code: github.com/visinf/idsds

Paper: arxiv.org/abs/2407.11910

Code: github.com/visinf/idsds

visinf.github.io/primaps/

PriMaPs generate masks from self-supervised features, enabling to boost unsupervised semantic segmentation via stochastic EM.

visinf.github.io/primaps/

PriMaPs generate masks from self-supervised features, enabling to boost unsupervised semantic segmentation via stochastic EM.