Simone Schaub-Meyer

@simoneschaub.bsky.social

190 followers

220 following

2 posts

Assistant Professor of Computer Science at TU Darmstadt, Member of @ellis.eu, DFG #EmmyNoether Fellow, PhD @ETH

Computer Vision & Deep Learning

Posts

Media

Videos

Starter Packs

Reposted by Simone Schaub-Meyer

Reposted by Simone Schaub-Meyer

Reposted by Simone Schaub-Meyer

Visual Inference Lab

@visinf.bsky.social

· Sep 29

ELLIS PhD Program: Call for Applications 2025

The ELLIS mission is to create a diverse European network that promotes research excellence and advances breakthroughs in AI, as well as a pan-European PhD program to educate the next generation of AI...

ellis.eu

Reposted by Simone Schaub-Meyer

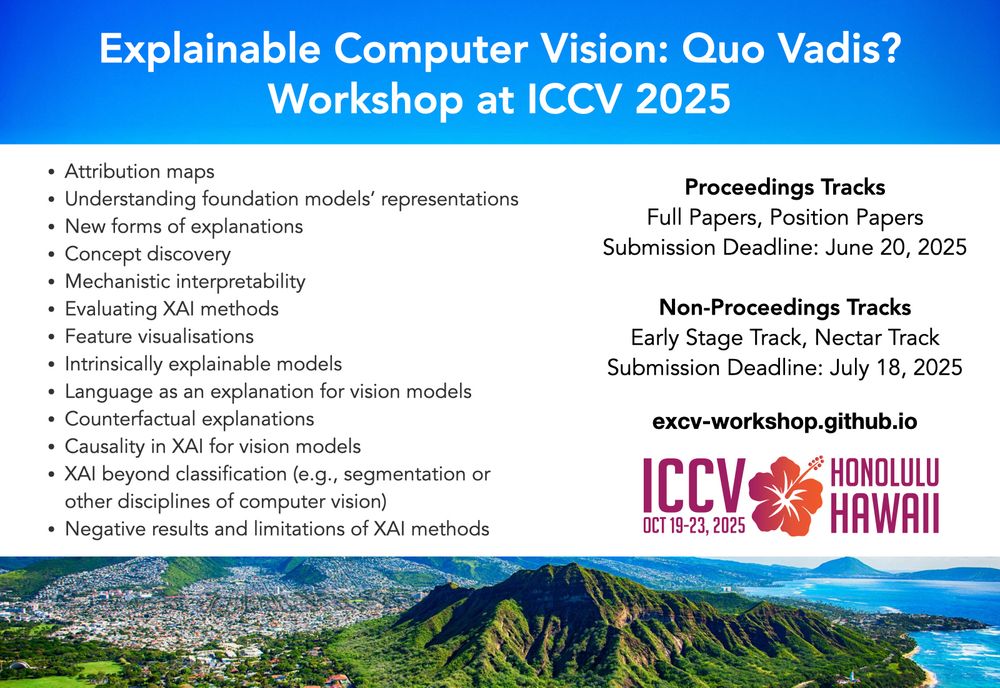

Visual Inference Lab

@visinf.bsky.social

· Sep 23

Reposted by Simone Schaub-Meyer

Reposted by Simone Schaub-Meyer

Reposted by Simone Schaub-Meyer

Reposted by Simone Schaub-Meyer

Reposted by Simone Schaub-Meyer

Reposted by Simone Schaub-Meyer

Reposted by Simone Schaub-Meyer

Reposted by Simone Schaub-Meyer

Visual Inference Lab

@visinf.bsky.social

· Jun 11

Reposted by Simone Schaub-Meyer

TU Darmstadt

@tuda.bsky.social

· May 22

Zwei Exzellenzcluster für die TU Darmstadt

Großer Erfolg für die Technische Universität Darmstadt: Zwei ihrer Forschungsprojekte werden künftig als Exzellenzcluster gefördert. Die Exzellenzkommission im Wettbewerb der prestigeträchtigen Exzell...

www.tu-darmstadt.de

Reposted by Simone Schaub-Meyer

Reposted by Simone Schaub-Meyer

Reposted by Simone Schaub-Meyer

Reposted by Simone Schaub-Meyer

Reposted by Simone Schaub-Meyer

Reposted by Simone Schaub-Meyer

Reposted by Simone Schaub-Meyer

Reposted by Simone Schaub-Meyer

TU Darmstadt

@tuda.bsky.social

· Mar 4

Neue Emmy Noether-Gruppe erforscht erklärbare KI für die Bild-und Videoanalyse

Die Deutsche Forschungsgemeinschaft hat Dr. Simone Schaub-Meyer in ihr Emmy Noether-Programm aufgenommen. Mit ihrer neuen Nachwuchsgruppe will sie Methoden erforschen und entwickeln, die das Verständn...

www.tu-darmstadt.de