See https://www.visinf.tu-darmstadt.de/visual_inference

by @dustin-carrion.bsky.social, @stefanroth.bsky.social @simoneschaub.bsky.social

🌍 visinf.github.io/emat

📄 arxiv.org/abs/2507.23642

💻 github.com/visinf/emat

Poster: Sun 4:40 PM - Exhibit Hall II #73

by @dustin-carrion.bsky.social, @stefanroth.bsky.social @simoneschaub.bsky.social

🌍 visinf.github.io/emat

📄 arxiv.org/abs/2507.23642

💻 github.com/visinf/emat

Poster: Sun 4:40 PM - Exhibit Hall II #73

by Xinrui Gong*, @olvrhhn.bsky.social *, @christophreich.bsky.social , Krishnakant Singh, @simoneschaub.bsky.social , @dcremers.bsky.social @stefanroth.bsky.social

by Xinrui Gong*, @olvrhhn.bsky.social *, @christophreich.bsky.social , Krishnakant Singh, @simoneschaub.bsky.social , @dcremers.bsky.social @stefanroth.bsky.social

by Gopika Sudhakaran, Hikaru Shindo, Patrick Schramowski, @simoneschaub.bsky.social, @kerstingaiml.bsky.social, @stefanroth.bsky.social

📄 arxiv.org/abs/2507.23543

Poster: Wed 2:45 PM, Exhibit Hall I #1501

by Gopika Sudhakaran, Hikaru Shindo, Patrick Schramowski, @simoneschaub.bsky.social, @kerstingaiml.bsky.social, @stefanroth.bsky.social

📄 arxiv.org/abs/2507.23543

Poster: Wed 2:45 PM, Exhibit Hall I #1501

by Baris Zongur, @robinhesse.bsky.social, @stefanroth.bsky.social

📄 arxiv.org/abs/2508.21695

Poster: Tue 11:45 AM, Exhibit Hall I #326

by Baris Zongur, @robinhesse.bsky.social, @stefanroth.bsky.social

📄 arxiv.org/abs/2508.21695

Poster: Tue 11:45 AM, Exhibit Hall I #326

by @jev-aleks.bsky.social *, @christophreich.bsky.social *, @fwimbauer.bsky.social , @olvrhhn.bsky.social , Christian Rupprecht, @stefanroth.bsky.social, @dcremers.bsky.social

🌍 visinf.github.io/scenedino/

by @jev-aleks.bsky.social *, @christophreich.bsky.social *, @fwimbauer.bsky.social , @olvrhhn.bsky.social , Christian Rupprecht, @stefanroth.bsky.social, @dcremers.bsky.social

🌍 visinf.github.io/scenedino/

by @skiefhaber.de , @stefanroth.bsky.social @simoneschaub.bsky.social

🌍 visinf.github.io/recover

Talk: Tue 09:30 AM, Kalakaua Ballroom

Poster: Tue 11:45 AM, Exhibit Hall I #76

by @skiefhaber.de , @stefanroth.bsky.social @simoneschaub.bsky.social

🌍 visinf.github.io/recover

Talk: Tue 09:30 AM, Kalakaua Ballroom

Poster: Tue 11:45 AM, Exhibit Hall I #76

Work by Jannik Endres, @olvrhhn.bsky.social, Charles Cobière, @simoneschaub.bsky.social, @stefanroth.bsky.social and Alexandre Alahi.

Work by Jannik Endres, @olvrhhn.bsky.social, Charles Cobière, @simoneschaub.bsky.social, @stefanroth.bsky.social and Alexandre Alahi.

by Baris Zongur, @robinhesse.bsky.social, and @stefanroth.bsky.social

📄: arxiv.org/abs/2508.21695

Talk: Wednesday, 1:30 PM, Oral Session 2

Poster: Wednesday, 3:30 PM, Poster 12

by Baris Zongur, @robinhesse.bsky.social, and @stefanroth.bsky.social

📄: arxiv.org/abs/2508.21695

Talk: Wednesday, 1:30 PM, Oral Session 2

Poster: Wednesday, 3:30 PM, Poster 12

by @skiefhaber.de, @stefanroth.bsky.social, and @simoneschaub.bsky.social

🌍: visinf.github.io/recover

Poster: Friday, 10:30 AM, Poster 14

by @skiefhaber.de, @stefanroth.bsky.social, and @simoneschaub.bsky.social

🌍: visinf.github.io/recover

Poster: Friday, 10:30 AM, Poster 14

by @olvrhhn.bsky.social *, @christophreich.bsky.social *, @neekans.bsky.social, @dcremers.bsky.social, Christian Rupprecht, @stefanroth.bsky.social 🌍: visinf.github.io/cups

Poster: Thursday, 1:30 PM, Poster 19

by @olvrhhn.bsky.social *, @christophreich.bsky.social *, @neekans.bsky.social, @dcremers.bsky.social, Christian Rupprecht, @stefanroth.bsky.social 🌍: visinf.github.io/cups

Poster: Thursday, 1:30 PM, Poster 19

Talk: Friday, 10:00 AM, Oral Session 5

Poster: Friday, 10:30 AM, Poster 12

Talk: Friday, 10:00 AM, Oral Session 5

Poster: Friday, 10:30 AM, Poster 12

by @dustin-carrion.bsky.social, @stefanroth.bsky.social, and @simoneschaub.bsky.social

🌍: visinf.github.io/emat

Poster: Wednesday, 03:30 PM, Postern 8

by @dustin-carrion.bsky.social, @stefanroth.bsky.social, and @simoneschaub.bsky.social

🌍: visinf.github.io/emat

Poster: Wednesday, 03:30 PM, Postern 8

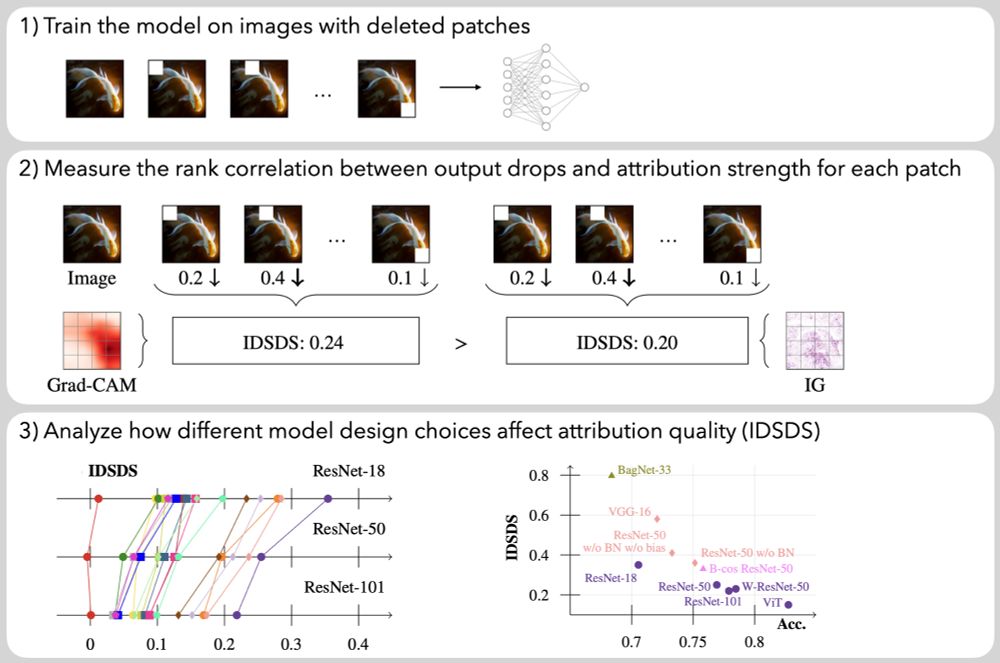

by @robinhesse.bsky.social, Jonas Fischer, @simoneschaub.bsky.social, and @stefanroth.bsky.social

Paper: arxiv.org/abs/2504.12939

Talk: Thursday 11:40 AM, Grand ballroom C1

Poster: Thursday, 12:30 PM, ExHall D, Poster 31-60

by @robinhesse.bsky.social, Jonas Fischer, @simoneschaub.bsky.social, and @stefanroth.bsky.social

Paper: arxiv.org/abs/2504.12939

Talk: Thursday 11:40 AM, Grand ballroom C1

Poster: Thursday, 12:30 PM, ExHall D, Poster 31-60

by Krishnakant Singh, @simoneschaub.bsky.social, and @stefanroth.bsky.social

Project Page: visinf.github.io/glass

Sunday 4:00 PM, ExHall D, Poster 239

by Krishnakant Singh, @simoneschaub.bsky.social, and @stefanroth.bsky.social

Project Page: visinf.github.io/glass

Sunday 4:00 PM, ExHall D, Poster 239

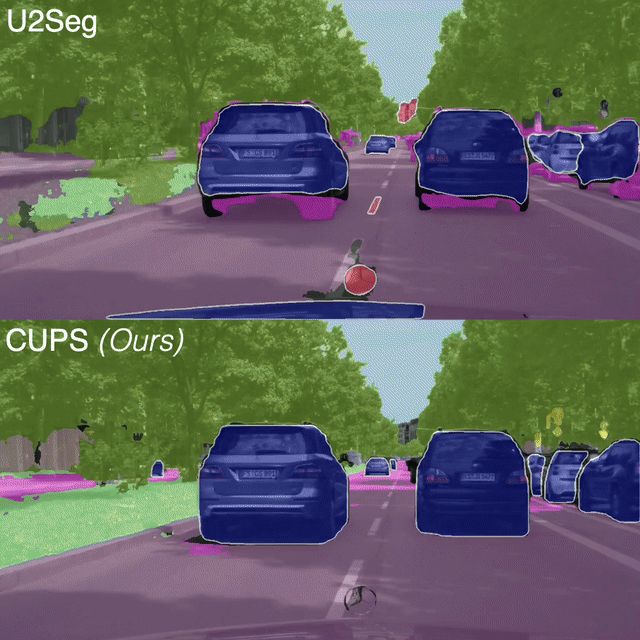

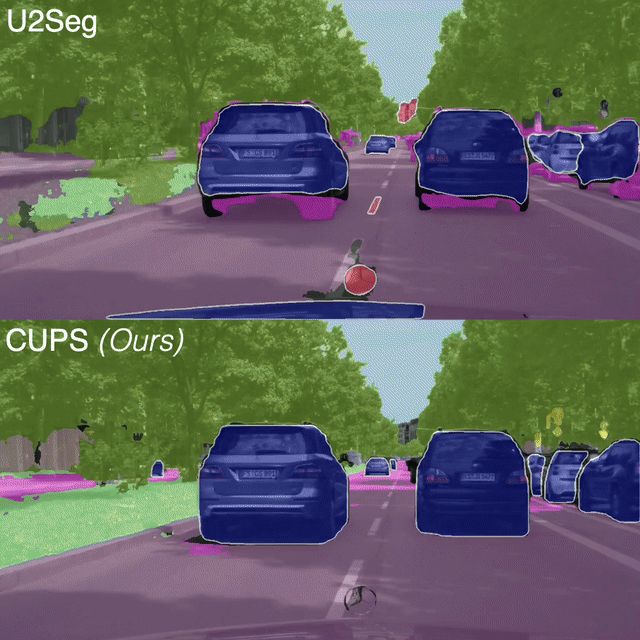

by @olvrhhn.bsky.social , @christophreich.bsky.social , @neekans.bsky.social , @dcremers.bsky.social, Christian Rupprecht, and @stefanroth.bsky.social

Sunday, 8:30 AM, ExHall D, Poster 330

Project Page: visinf.github.io/cups

by @olvrhhn.bsky.social , @christophreich.bsky.social , @neekans.bsky.social , @dcremers.bsky.social, Christian Rupprecht, and @stefanroth.bsky.social

Sunday, 8:30 AM, ExHall D, Poster 330

Project Page: visinf.github.io/cups

✅ Generalize to different datasets, including an OOD setting.

✅ Stable performance across domains, different from supervised learning.

✅ Generalize to different datasets, including an OOD setting.

✅ Stable performance across domains, different from supervised learning.

We present CUPS, the first unsupervised panoptic segmentation method trained directly on scene-centric imagery.

Using self-supervised features, depth & motion, we achieve SotA results!

🌎 visinf.github.io/cups

We present CUPS, the first unsupervised panoptic segmentation method trained directly on scene-centric imagery.

Using self-supervised features, depth & motion, we achieve SotA results!

🌎 visinf.github.io/cups

Paper: arxiv.org/abs/2407.11910

Code: github.com/visinf/idsds

Paper: arxiv.org/abs/2407.11910

Code: github.com/visinf/idsds

@hf.co @gradio-hf.bsky.social

@hf.co @gradio-hf.bsky.social