http://dmytro.ai

SAM 3D Team et al?

tl;dr: in title. 8-stage training, dataset, human labeling. Do not read tl;dr, read whole paper

arxiv.org/abs/2511.16624

SAM 3D Team et al?

tl;dr: in title. 8-stage training, dataset, human labeling. Do not read tl;dr, read whole paper

arxiv.org/abs/2511.16624

Weixun Luo, Ranran Huang, Junpeng Jing, Krystian Mikolajczyk

tl;dr: in title + dataset.

arxiv.org/abs/2511.16567

Weixun Luo, Ranran Huang, Junpeng Jing, Krystian Mikolajczyk

tl;dr: in title + dataset.

arxiv.org/abs/2511.16567

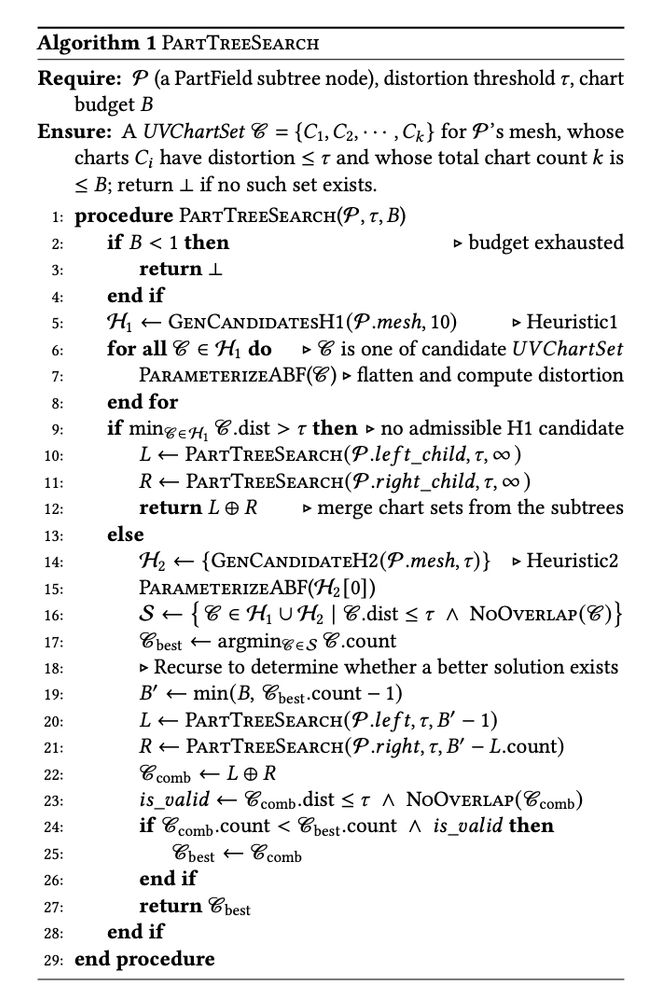

Zhaoning Wang, Xinyue Wei, Ruoxi Shi, Xiaoshuai Zhang, Hao Su, Minghua Liu

arxiv.org/abs/2511.16659

tl;dr: in-title, start from learning-based split -> algorithmic finish

Zhaoning Wang, Xinyue Wei, Ruoxi Shi, Xiaoshuai Zhang, Hao Su, Minghua Liu

arxiv.org/abs/2511.16659

tl;dr: in-title, start from learning-based split -> algorithmic finish

Here are the main improvements we made since RoMa:

Here are the main improvements we made since RoMa:

@parskatt.bsky.social et 11 al.

tl;dr: in title.

Predict covariance per-pixel, more datasets, use DINOv3, adjust architecture.

arxiv.org/abs/2511.15706

@parskatt.bsky.social et 11 al.

tl;dr: in title.

Predict covariance per-pixel, more datasets, use DINOv3, adjust architecture.

arxiv.org/abs/2511.15706

Pro-tip#2: don't do that with other professors as well. It's not just me.

Pro-tip#2: don't do that with other professors as well. It's not just me.

Project Page: depth-anything-3.github.io

Code: github.com/ByteDance-Se...

Hugging Face Demo: huggingface.co/spaces/depth...

Project Page: depth-anything-3.github.io

Code: github.com/ByteDance-Se...

Hugging Face Demo: huggingface.co/spaces/depth...

Haotong Lin, Sili Chen, Junhao Liew, Donny Y. Chen, Zhenyu Li, Guang Shi, Jiashi Feng, Bingyi Kang

tl;dr: DINOv2+reshape for multiview,

joint DPT, synth teacher. Depth-ray output.

Simpler VGGT.

arxiv.org/abs/2511.10647

Haotong Lin, Sili Chen, Junhao Liew, Donny Y. Chen, Zhenyu Li, Guang Shi, Jiashi Feng, Bingyi Kang

tl;dr: DINOv2+reshape for multiview,

joint DPT, synth teacher. Depth-ray output.

Simpler VGGT.

arxiv.org/abs/2511.10647

That's a 10-15 years observation.

That's a 10-15 years observation.

1. When architecture information is combined with a Figure showing what actually happens with the data:

@kaggle.com

The dataset is exactly as in IMC2025, but the competition is on-going for a year, making it better for academic leaderboard and persistency.

kaggle.com/competitions...

1/2

@kaggle.com

The dataset is exactly as in IMC2025, but the competition is on-going for a year, making it better for academic leaderboard and persistency.

kaggle.com/competitions...

1/2

(I'm) For the same reason I downloaded:

1. WhatsApp

2. Signal, used only when living in 🇳🇱.

3. Telegram

(in such order) to be in contact with someone/someppl

The 🐦 still offers better UX for me. Nevertheless,

Kudos to all folks engaged on making a clear blue sky 💪🏼

Ofc, that’s just me

(I'm) For the same reason I downloaded:

1. WhatsApp

2. Signal, used only when living in 🇳🇱.

3. Telegram

(in such order) to be in contact with someone/someppl

The 🐦 still offers better UX for me. Nevertheless,

Kudos to all folks engaged on making a clear blue sky 💪🏼

Grounded in anthropometric data (MakeHuman) & WHO stats, Anny offers:

🧠 Interpretable shape control

👶👩🦳 Unified from infants to elders

🧩 Versatile for fitting, synthesis & HMR

🌍 Open under Apache 2.0

Grounded in anthropometric data (MakeHuman) & WHO stats, Anny offers:

🧠 Interpretable shape control

👶👩🦳 Unified from infants to elders

🧩 Versatile for fitting, synthesis & HMR

🌍 Open under Apache 2.0

github.com/nianticspati...

github.com/nianticspati...

My favorites:

1) CAD representation

2) synthetic data to help city-scale reconstruction

3) trends in 3D vision

4) visual chain-of-thoughts?

My favorites:

1) CAD representation

2) synthetic data to help city-scale reconstruction

3) trends in 3D vision

4) visual chain-of-thoughts?

Common, exclude them from the char limit, or whatever.

On twitter, when authors are on platform, it means MORE space. Here is means LESS space

It is easy to fit the paper name + authors (handles) + tl;dr + arXiv link on twitter, but almost impossible here :(

Common, exclude them from the char limit, or whatever.

On twitter, when authors are on platform, it means MORE space. Here is means LESS space

It is easy to fit the paper name + authors (handles) + tl;dr + arXiv link on twitter, but almost impossible here :(

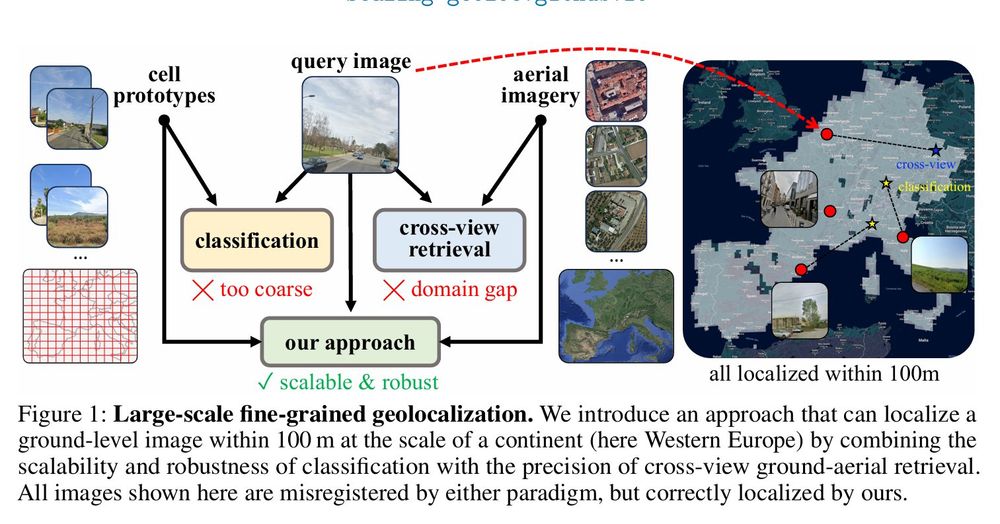

Philipp Lindenberger

@pesarlin.bsky.social @janhosang.bsky.social Matteo Balice @marcpollefeys.bsky.social Simon Lynen, Eduard Trulls

tl;dr: combine ground+aerial to get cell-prototype. Acc@ 42Gb = ground [email protected]

arxiv.org/abs/2510.26795

Philipp Lindenberger

@pesarlin.bsky.social @janhosang.bsky.social Matteo Balice @marcpollefeys.bsky.social Simon Lynen, Eduard Trulls

tl;dr: combine ground+aerial to get cell-prototype. Acc@ 42Gb = ground [email protected]

arxiv.org/abs/2510.26795

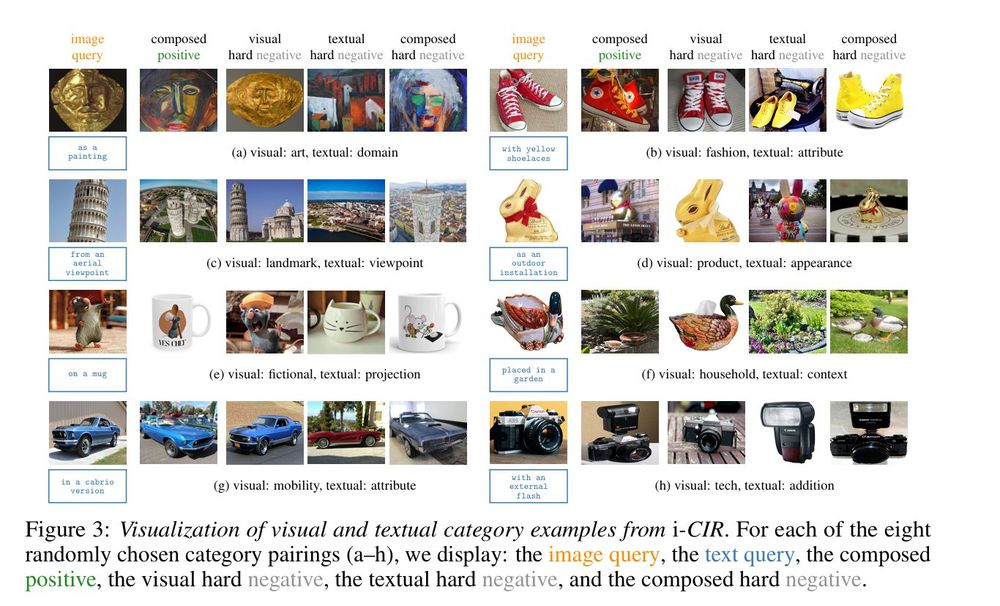

@billpsomas.bsky.social George Retsinas @nikos-efth.bsky.social Panagiotis Filntisis,Yannis Avrithis, Petros Maragos, Ondrej Chum, @gtolias.bsky.social

tl;dr: condition-based retrieval (+dataset) - old photo/sunset/night/aerial/model arxiv.org/abs/2510.25387

@billpsomas.bsky.social George Retsinas @nikos-efth.bsky.social Panagiotis Filntisis,Yannis Avrithis, Petros Maragos, Ondrej Chum, @gtolias.bsky.social

tl;dr: condition-based retrieval (+dataset) - old photo/sunset/night/aerial/model arxiv.org/abs/2510.25387