Throws machine learning at traditional computer vision pipelines to see what sticks. Differentiates the non-differentiable.

📍Europe 🔗 http://ebrach.github.io

nianticspatial.github.io/fastforward/

Work by @axelbarroso.bsky.social, Tommaso Cavallari and Victor Adrian Prisacariu.

ACESLAM: arxiv.org/abs/2512.14032

ACESLAM: arxiv.org/abs/2512.14032

Niantic Spatial is offering research internships on a multitude of 3D vision topics: relocalization, reconstruction, 3D VLMs... Top tier papers regularly come out of our internships 🚀

nianticspatial.careers.hibob.com/jobs/0fc4871...

Niantic Spatial is offering research internships on a multitude of 3D vision topics: relocalization, reconstruction, 3D VLMs... Top tier papers regularly come out of our internships 🚀

nianticspatial.careers.hibob.com/jobs/0fc4871...

It's the Winter School on Social Robotics, Artificial Intelligence and Multimedia (SoRAIM), 9-13 Feb 2026 👇

It's the Winter School on Social Robotics, Artificial Intelligence and Multimedia (SoRAIM), 9-13 Feb 2026 👇

youtu.be/NymS-f4DNh0

3.5h of goodness dedicated to robot-object manipulation w/ Hao-Shu Fang (MIT), Maximilian Durner (DLR), Agastya Kalra+Vahe Taamazyan (Intrinsic) and Sergey Levine (Physical Intelligence)

youtu.be/NymS-f4DNh0

3.5h of goodness dedicated to robot-object manipulation w/ Hao-Shu Fang (MIT), Maximilian Durner (DLR), Agastya Kalra+Vahe Taamazyan (Intrinsic) and Sergey Levine (Physical Intelligence)

Now, after one involuntary overnight stay: delay again. Great job @lufthansagroup.bsky.social

Now, after one involuntary overnight stay: delay again. Great job @lufthansagroup.bsky.social

day 1: read the reviews

day 2-6: calm down

day 7: write the rebuttal

day 1: read the reviews

day 2-6: calm down

day 7: write the rebuttal

Gives you, e.g., a RGB-D version of ACE0. In the snipped below, I jointly reconstruct multiple RGB-D scans of ScanNet.

github.com/nianticlabs/...

Gives you, e.g., a RGB-D version of ACE0. In the snipped below, I jointly reconstruct multiple RGB-D scans of ScanNet.

github.com/nianticlabs/...

github.com/nianticspati...

github.com/nianticspati...

cmp.felk.cvut.cz/sixd/worksho...

🤖 Object pose estimation for industrial robotics

📢 Speakers:

- Sergey Levine (UC Berkeley)

- Haoshu Fang (MIT)

- Maximilian Durner (DLR)

- Agastya Kalra & Vahe Taamazyan (Intrinsic)

📊 BOP 2025 with new BOP-Industrial datasets

cmp.felk.cvut.cz/sixd/worksho...

🤖 Object pose estimation for industrial robotics

📢 Speakers:

- Sergey Levine (UC Berkeley)

- Haoshu Fang (MIT)

- Maximilian Durner (DLR)

- Agastya Kalra & Vahe Taamazyan (Intrinsic)

📊 BOP 2025 with new BOP-Industrial datasets

I will give three (very different) talks at workshops and tutorials, see info below.

We also present two papers, ACE-G and SCR Priors.

And it's the 10th (!) anniversary of the R6D workshop, which we co-organize.

I will give three (very different) talks at workshops and tutorials, see info below.

We also present two papers, ACE-G and SCR Priors.

And it's the 10th (!) anniversary of the R6D workshop, which we co-organize.

I will give three (very different) talks at workshops and tutorials, see info below.

We also present two papers, ACE-G and SCR Priors.

And it's the 10th (!) anniversary of the R6D workshop, which we co-organize.

Train a scene coordinate regressor with "map codes" (ie, trainable inputs) so that you can train one generalizable regressor. Then, find these "map codes" to localize.

Train a scene coordinate regressor with "map codes" (ie, trainable inputs) so that you can train one generalizable regressor. Then, find these "map codes" to localize.

My group works on topics in vision, machine learning, and AI for climate.

For more information and details on how to get in touch, please check out my website:

homepages.inf.ed.ac.uk/omacaod

My group works on topics in vision, machine learning, and AI for climate.

For more information and details on how to get in touch, please check out my website:

homepages.inf.ed.ac.uk/omacaod

Can we learn what a successful reconstruction looks like, and use this knowledge when reconstructing new scenes?

Explainer: youtu.be/RkV6U5xYc20

Project Page: nianticspatial.github.io/scr-priors

Inspiring work by Wenjing Bian et al.!

Can we learn what a successful reconstruction looks like, and use this knowledge when reconstructing new scenes?

Explainer: youtu.be/RkV6U5xYc20

Project Page: nianticspatial.github.io/scr-priors

Inspiring work by Wenjing Bian et al.!

openaccess.thecvf.com/ICCV2025

Workshop papers will be posted shortly. Aloha!

openaccess.thecvf.com/ICCV2025

Workshop papers will be posted shortly. Aloha!

We disentangle coordinate regression and latent map representation which lets us pre-train the regressor to generalize from mapping data to difficult query images.

Page: nianticspatial.github.io/ace-g/

Stellar work by Leonard Bruns et al.!

We disentangle coordinate regression and latent map representation which lets us pre-train the regressor to generalize from mapping data to difficult query images.

Page: nianticspatial.github.io/ace-g/

Stellar work by Leonard Bruns et al.!

- Daniel Barath

- @ericbrachmann.bsky.social

- Viktor Larsson

- Jiri Matas

- and me

danini.github.io/ransac-2025-...

#ICCV2025

- Daniel Barath

- @ericbrachmann.bsky.social

- Viktor Larsson

- Jiri Matas

- and me

danini.github.io/ransac-2025-...

#ICCV2025

- Daniel Barath

- @ericbrachmann.bsky.social

- Viktor Larsson

- Jiri Matas

- and me

danini.github.io/ransac-2025-...

#ICCV2025

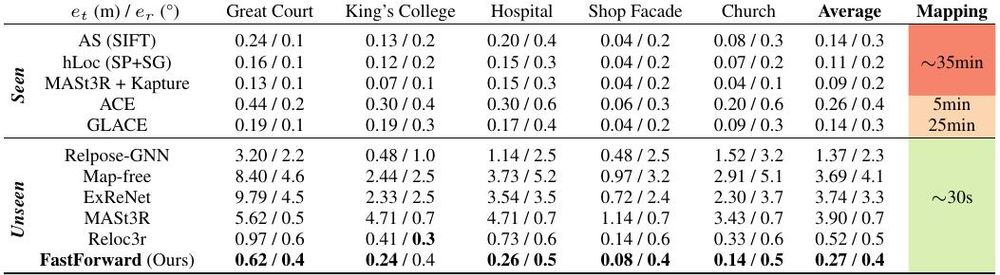

Axel Barroso-Laguna, Tommaso Cavallari, Victor Adrian Prisacariu, Eric Brachmann

arxiv.org/abs/2510.00978

Trending on www.scholar-inbox.com