Grammar-Driven SMILES Standardization with TokenSMILES.

📜 pubs.rsc.org/en/content/a...

[1/6]

Grammar-Driven SMILES Standardization with TokenSMILES.

📜 pubs.rsc.org/en/content/a...

[1/6]

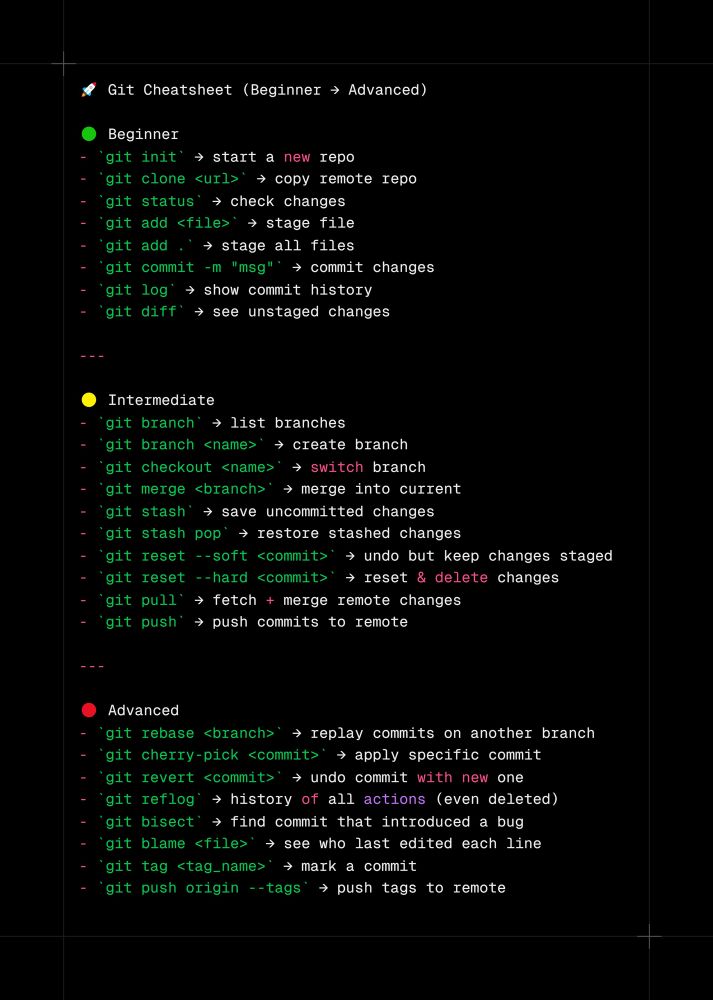

from beginner → advanced → intermediate

from beginner → advanced → intermediate

We can do better. "How to Select Datapoints for Efficient Human Evaluation of NLG Models?" shows how.🕵️

(random is still a devilishly good baseline)

We can do better. "How to Select Datapoints for Efficient Human Evaluation of NLG Models?" shows how.🕵️

(random is still a devilishly good baseline)

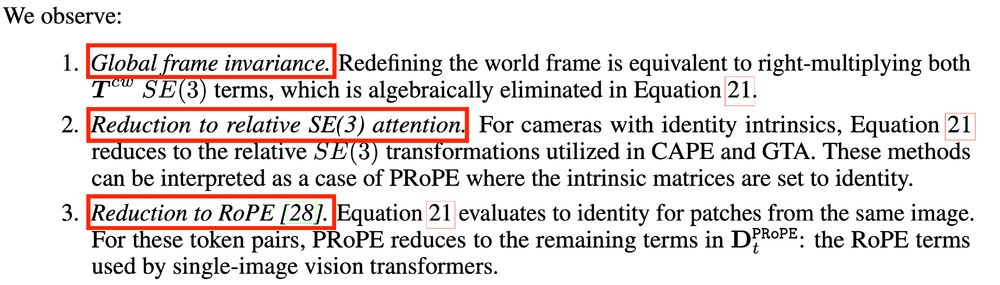

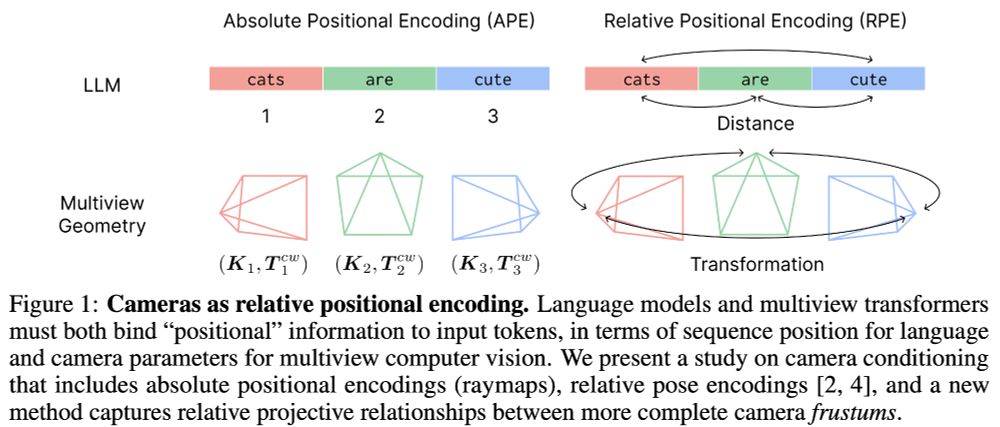

It is elegant: in special situations, it defaults to known baselines like GTA (if identity intrinsics) and RoPE (same cam).

arxiv.org/abs/2507.10496

It is elegant: in special situations, it defaults to known baselines like GTA (if identity intrinsics) and RoPE (same cam).

arxiv.org/abs/2507.10496

Exciting topics: lots of generative AI using transformers, diffusion, 3DGS, etc. focusing on image synthesis, geometry generation, avatars, and much more - check it out!

So proud of everyone involved - let's go🚀🚀🚀

niessnerlab.org/publications...

Exciting topics: lots of generative AI using transformers, diffusion, 3DGS, etc. focusing on image synthesis, geometry generation, avatars, and much more - check it out!

So proud of everyone involved - let's go🚀🚀🚀

niessnerlab.org/publications...

arxiv.org/abs/2505.20802

arxiv.org/abs/2505.20802

colab.research.google.com/drive/16GJyb...

Comments welcome!

colab.research.google.com/drive/16GJyb...

Comments welcome!

NO. WRONG.

You have just forgotten how good search used to be.

Google broke it's own flagship product, and so you are accepting a demented chatbot's half baked gishgallop because we no longer have functional web search.

NO. WRONG.

You have just forgotten how good search used to be.

Google broke it's own flagship product, and so you are accepting a demented chatbot's half baked gishgallop because we no longer have functional web search.

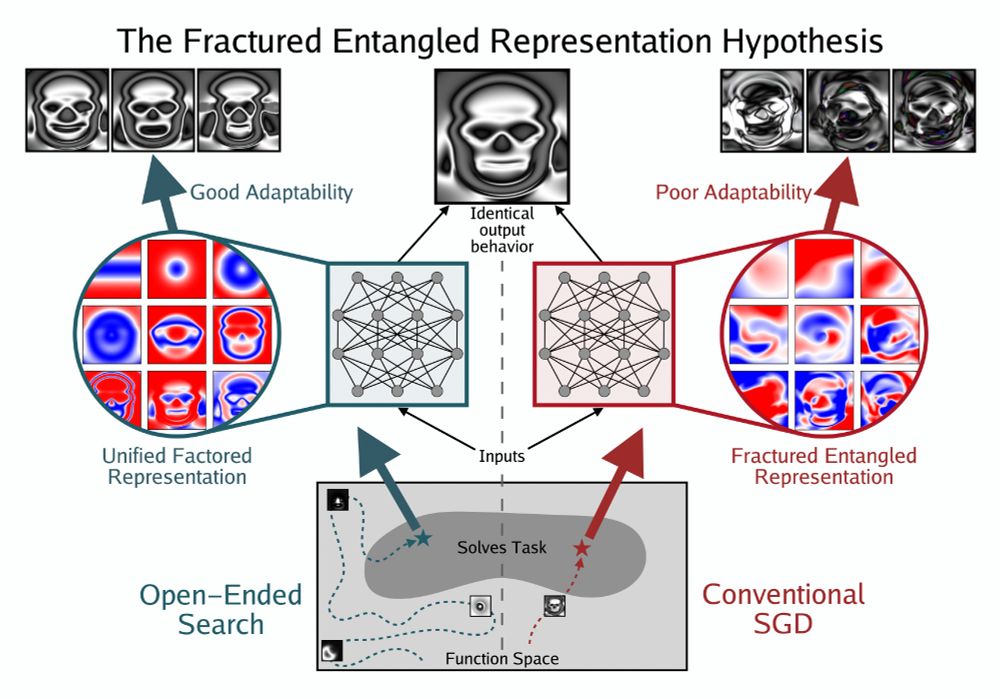

Paper: arxiv.org/abs/2505.11581

Paper: arxiv.org/abs/2505.11581

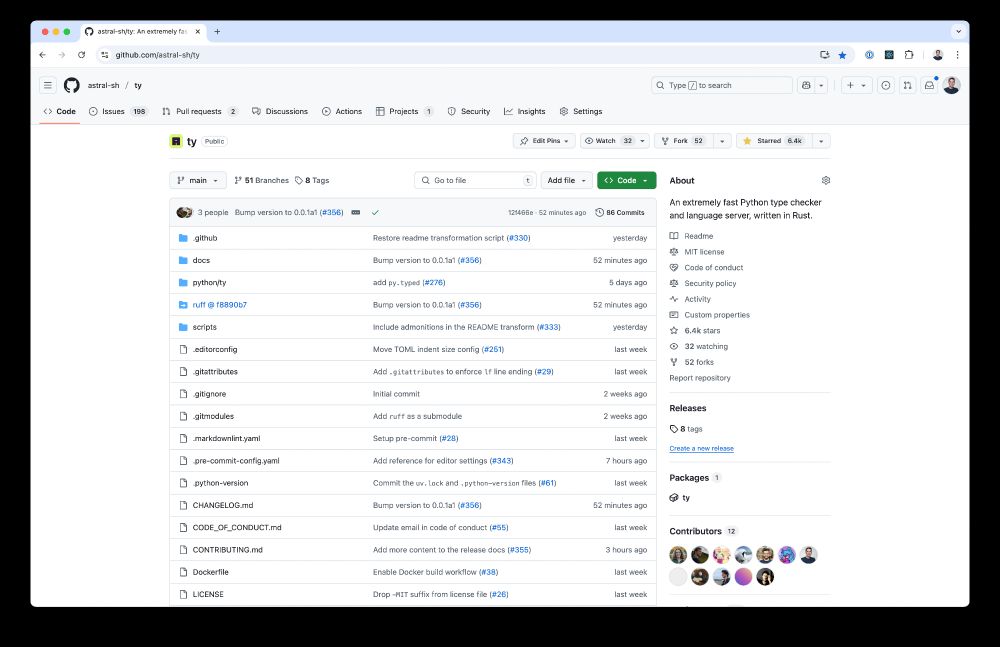

In early testing, it's 10x, 50x, even 100x faster than existing type checkers. (We've seen >600x speed-ups over Mypy in some real-world projects.)

In early testing, it's 10x, 50x, even 100x faster than existing type checkers. (We've seen >600x speed-ups over Mypy in some real-world projects.)

Model: huggingface.co/BlinkDL/rwkv...

paper: huggingface.co/papers/2503....

✨ Apache2.0

✨ Supports 100+ languages

✨ 0.1 B runs smoothly on low power devices

✨ 0.4B/1.5B/2.9B are coming soon!!

Model: huggingface.co/BlinkDL/rwkv...

paper: huggingface.co/papers/2503....

✨ Apache2.0

✨ Supports 100+ languages

✨ 0.1 B runs smoothly on low power devices

✨ 0.4B/1.5B/2.9B are coming soon!!

A new language model uses diffusion instead of next-token prediction. That means the text it can back out of a hallucination before it commits. This is a big win for areas like law & contracts, where global consistency is valued

timkellogg.me/blog/2025/02...

A new language model uses diffusion instead of next-token prediction. That means the text it can back out of a hallucination before it commits. This is a big win for areas like law & contracts, where global consistency is valued

timkellogg.me/blog/2025/02...

A mesh-based 3D represention for training radiance fields from collections of images.

radfoam.github.io

arxiv.org/abs/2502.01157

Project co-lead by my PhD students Shrisudhan Govindarajan and Daniel Rebain, and w/ co-advisor Kwang Moo Yi

A mesh-based 3D represention for training radiance fields from collections of images.

radfoam.github.io

arxiv.org/abs/2502.01157

Project co-lead by my PhD students Shrisudhan Govindarajan and Daniel Rebain, and w/ co-advisor Kwang Moo Yi

github.com/theialab/rad...

Belated happy Valentine's day 🥰

github.com/theialab/rad...

Belated happy Valentine's day 🥰

- 800k total samples dataset similar in composition to the data used to train DeepSeek-R1 Distill models.

- 300k from DeepSeek-R1

- 300k from Gemini 2.0 flash thinking

- 200k from Dolphin chat

huggingface.co/datasets/cog...

- 800k total samples dataset similar in composition to the data used to train DeepSeek-R1 Distill models.

- 300k from DeepSeek-R1

- 300k from Gemini 2.0 flash thinking

- 200k from Dolphin chat

huggingface.co/datasets/cog...

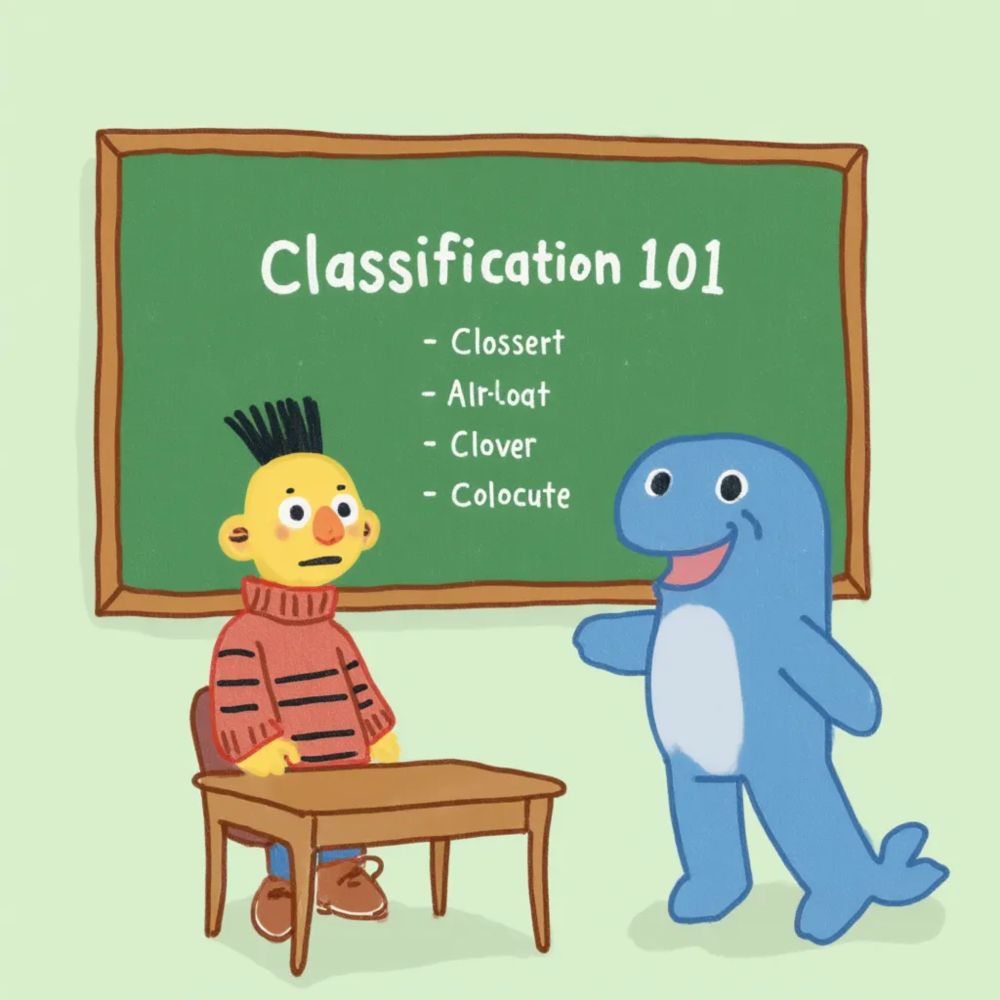

Use DeepSeek to generate high-quality training data, then distil that knowledge into ModernBERT for fast, efficient classification.

New blog post: danielvanstrien.xyz/posts/2025/d...

Use DeepSeek to generate high-quality training data, then distil that knowledge into ModernBERT for fast, efficient classification.

New blog post: danielvanstrien.xyz/posts/2025/d...

Really neat stuff! Once can easily replace the slower, expensive 3rd party LLM router with a fast, cheap & local model.

Use DeepSeek to generate high-quality training data, then distil that knowledge into ModernBERT for fast, efficient classification.

New blog post: danielvanstrien.xyz/posts/2025/d...

Really neat stuff! Once can easily replace the slower, expensive 3rd party LLM router with a fast, cheap & local model.

your blocks, likes, lists, and just about everything except chats are PUBLIC

you can pin custom feeds; i like quiet posters, best of follows, mutuals, mentions

if your chronological feed is overwhelming, you can make and pin make a personal list of "unmissable" people

your blocks, likes, lists, and just about everything except chats are PUBLIC

you can pin custom feeds; i like quiet posters, best of follows, mutuals, mentions

if your chronological feed is overwhelming, you can make and pin make a personal list of "unmissable" people