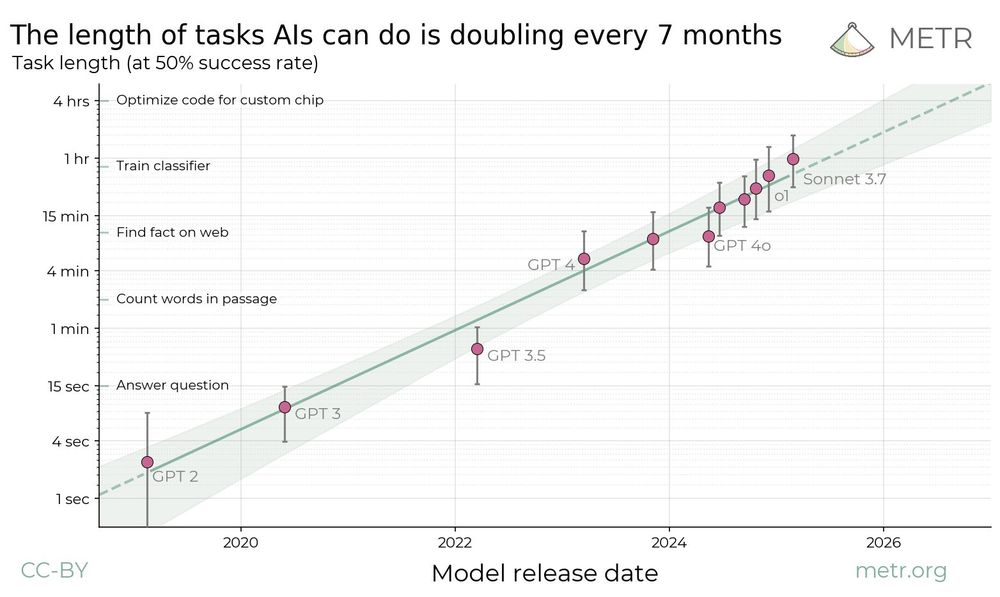

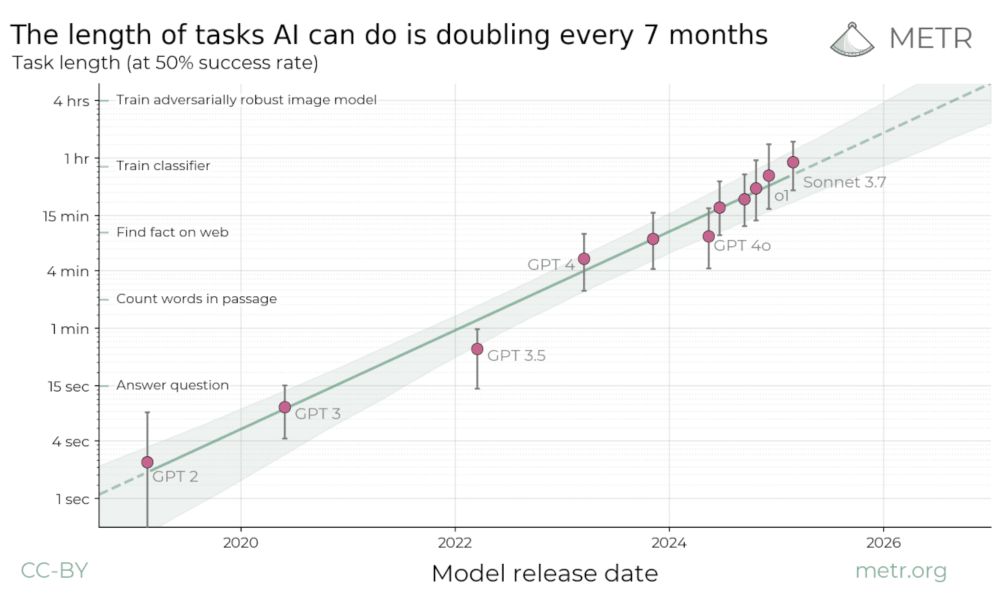

The graph also incorrectly showed a [0,0] CI.

We’ve updated the blog post to show a new figure, which more accurately conveys what we observed.

The graph also incorrectly showed a [0,0] CI.

We’ve updated the blog post to show a new figure, which more accurately conveys what we observed.

- Small sample (18 tasks)

- These repositories may have higher-than-average standards

- Basic agent scaffold

- We used Claude 3.7 to help compare to our developer productivity RCT, but more recent models may have a different algorithmic vs. holistic scoring gap

- Small sample (18 tasks)

- These repositories may have higher-than-average standards

- Basic agent scaffold

- We used Claude 3.7 to help compare to our developer productivity RCT, but more recent models may have a different algorithmic vs. holistic scoring gap

Speculatively, it doesn’t seem like they fundamentally lack these capabilities.

Speculatively, it doesn’t seem like they fundamentally lack these capabilities.

- Core functionality errors

- Poor test coverage

- Missing/incorrect documentation

- Linting/formatting violations

- Other quality issues (verbosity, brittleness, poor maintainability)

All agent attempts contain at least 3 of these issues!

- Core functionality errors

- Poor test coverage

- Missing/incorrect documentation

- Linting/formatting violations

- Other quality issues (verbosity, brittleness, poor maintainability)

All agent attempts contain at least 3 of these issues!

We then scored its PRs with human-written tests, where it passed 38% of the time, and manual review, where it never passed.

We then scored its PRs with human-written tests, where it passed 38% of the time, and manual review, where it never passed.

What separates real coding from SWE benchmark tasks? Was human input holding the AI back?

What separates real coding from SWE benchmark tasks? Was human input holding the AI back?