andreeadogaru.github.io/Gen3DSR

Reach out if you're interested in discussing our research or exploring international postdoc opportunities @fau.de

andreeadogaru.github.io/Gen3DSR

Reach out if you're interested in discussing our research or exploring international postdoc opportunities @fau.de

Paper: arxiv.org/abs/2412.13244

Paper: arxiv.org/abs/2412.13244

Paper: ieeexplore.ieee.org/document/107...

Project page: hannahhaensen.github.io/DynaMoN/

Code: github.com/HannahHaense...

Paper: ieeexplore.ieee.org/document/107...

Project page: hannahhaensen.github.io/DynaMoN/

Code: github.com/HannahHaense...

Die aktuelle Grafik am Beispiel unseres Hauses

Im November noch 50%.

Die aktuelle Grafik am Beispiel unseres Hauses

Im November noch 50%.

Artwork by Micronaut.

Artwork by Micronaut.

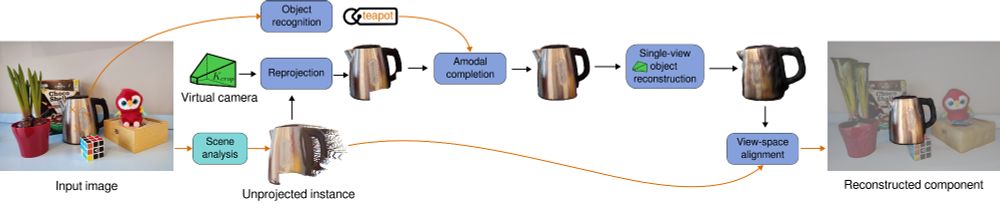

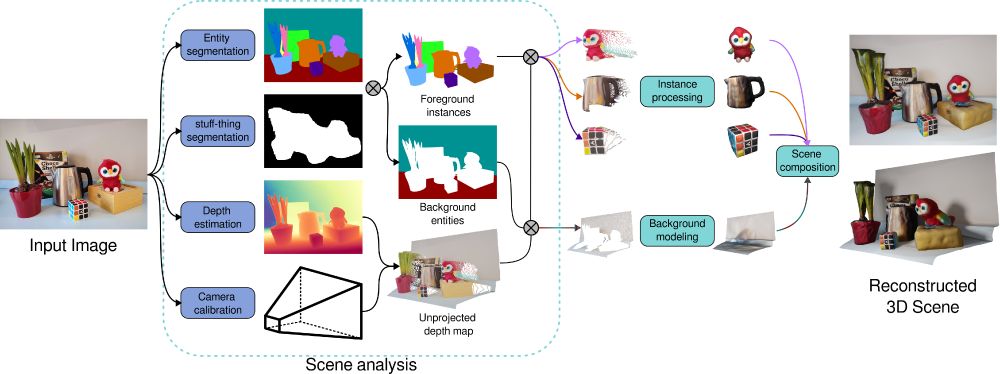

✨ Gen3DSR: Generalizable 3D Scene Reconstruction via Divide and Conquer from a Single View ✨

🌐 Project page: andreeadogaru.github.io/Gen3DSR

📄 Paper: arxiv.org/abs/2404.03421

👩💻 Code: github.com/AndreeaDogar...

(1/5)

✨ Gen3DSR: Generalizable 3D Scene Reconstruction via Divide and Conquer from a Single View ✨

🌐 Project page: andreeadogaru.github.io/Gen3DSR

📄 Paper: arxiv.org/abs/2404.03421

👩💻 Code: github.com/AndreeaDogar...

(1/5)

Novel view synthesis of scanning electron microscopy images and Conditional colorization.

📝 arXiv: arxiv.org/abs/2410.21310

🎨Project page: ronly2460.github.io/ArCSEM

(1/3)

Novel view synthesis of scanning electron microscopy images and Conditional colorization.

📝 arXiv: arxiv.org/abs/2410.21310

🎨Project page: ronly2460.github.io/ArCSEM

(1/3)

NeRFtrinsic Four: An End-To-End Trainable NeRF Jointly Optimizing Diverse Intrinsic and Extrinsic Camera Parameters has been accepted to #CVIU!!!

Many thanks to my co-authors! Shout out to Fabian Deuser, @visionbernie.bsky.social,Norbert Oswald and Daniel Roth.

(1/4)

NeRFtrinsic Four: An End-To-End Trainable NeRF Jointly Optimizing Diverse Intrinsic and Extrinsic Camera Parameters has been accepted to #CVIU!!!

Many thanks to my co-authors! Shout out to Fabian Deuser, @visionbernie.bsky.social,Norbert Oswald and Daniel Roth.

(1/4)

Project page: mert-o.github.io/ThermalNeRF/

Paper: arxiv.org/abs/2403.11865

Dataset: zenodo.org/records/1106...

1/7

Project page: mert-o.github.io/ThermalNeRF/

Paper: arxiv.org/abs/2403.11865

Dataset: zenodo.org/records/1106...

1/7