More at human-ai-collaboration-lab.kellogg.northwestern.edu/ic2s2

See you there!

#IC2S2

More at human-ai-collaboration-lab.kellogg.northwestern.edu/ic2s2

See you there!

#IC2S2

It's also a framework that can generalize to multimedia.

Consider this, what do you notice at the 16s mark about her legs?

It's also a framework that can generalize to multimedia.

Consider this, what do you notice at the 16s mark about her legs?

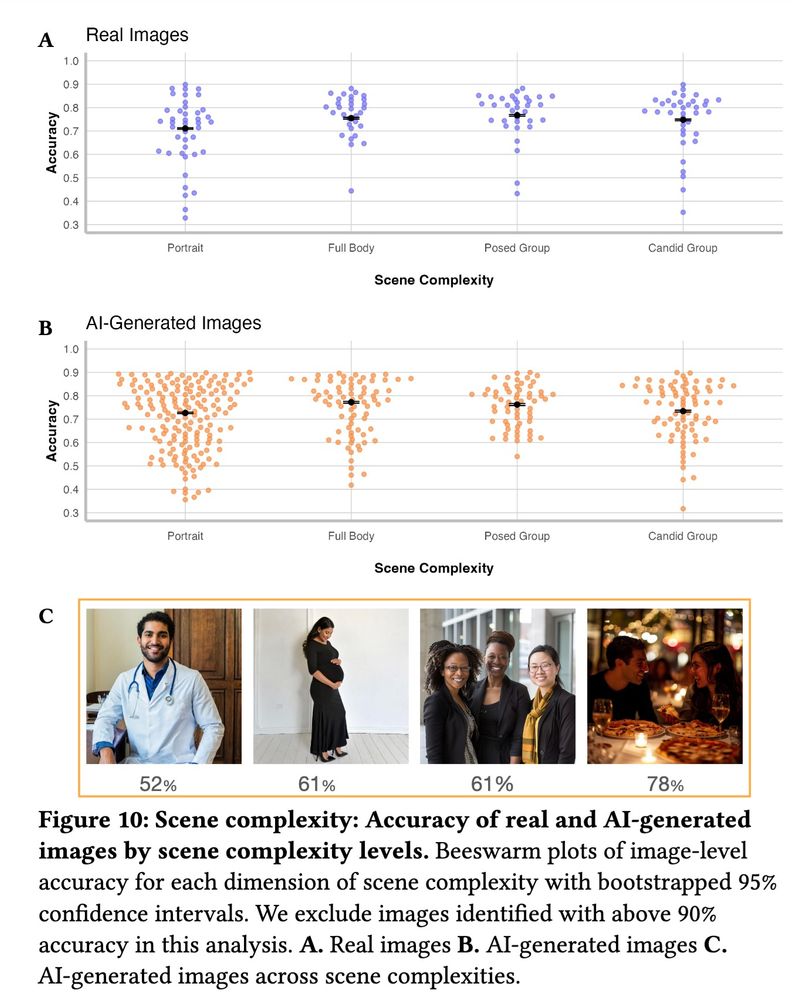

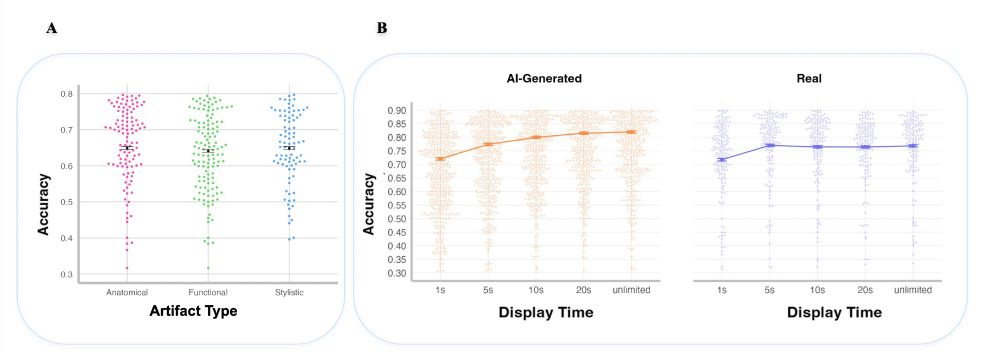

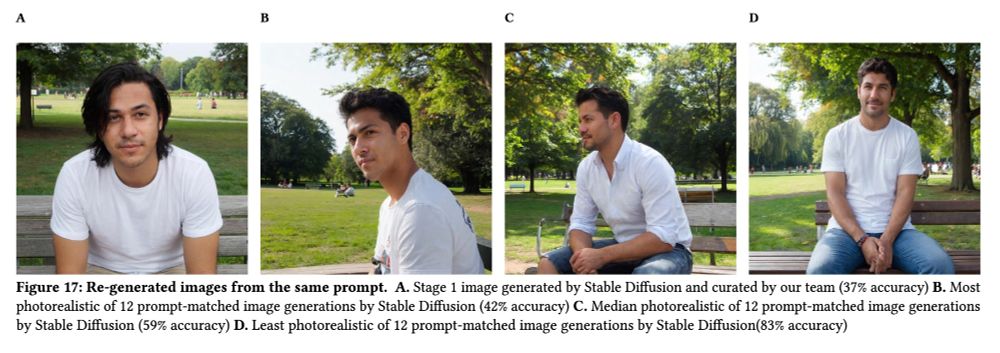

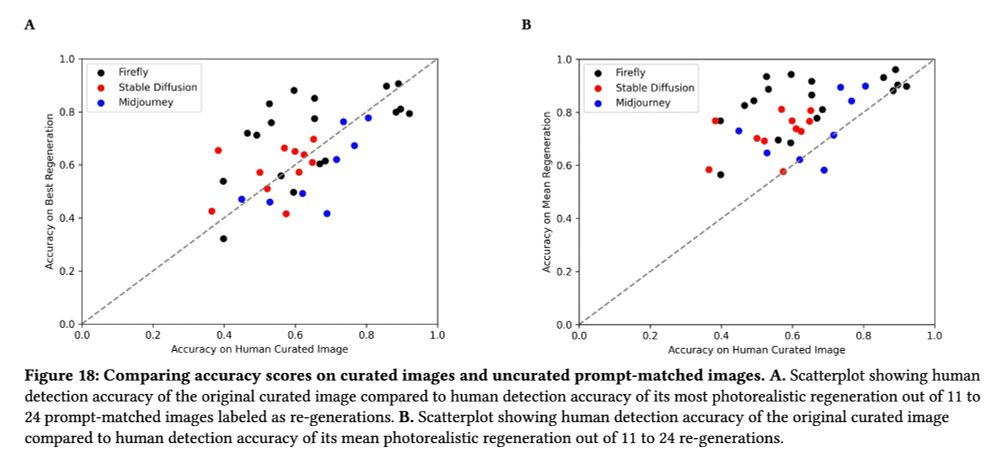

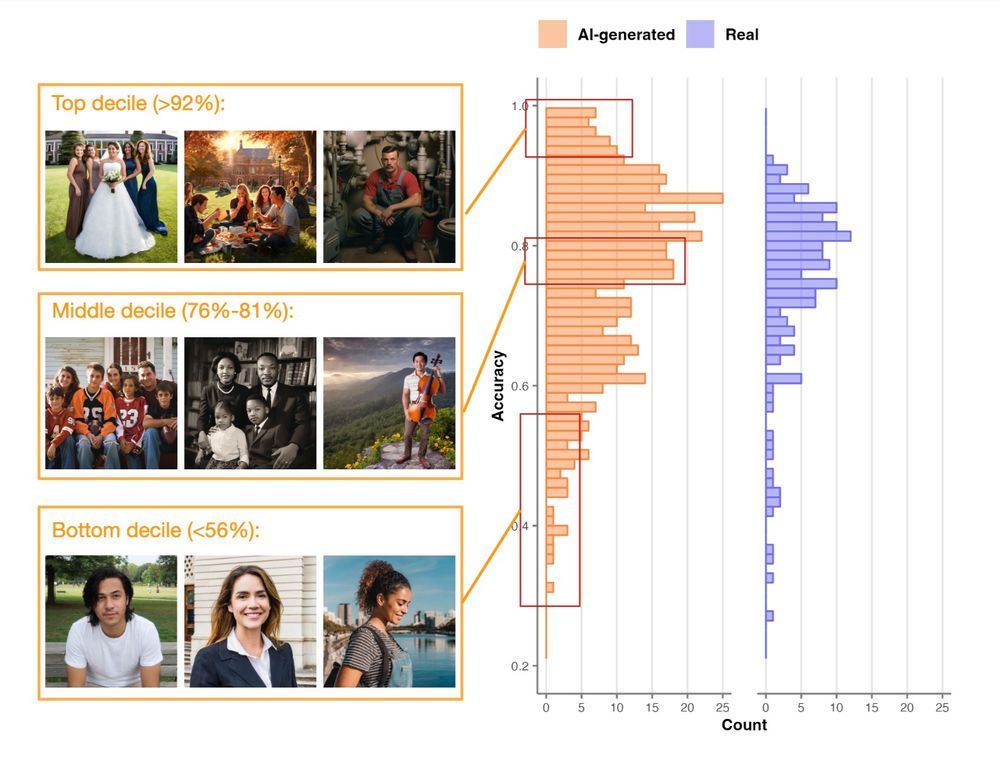

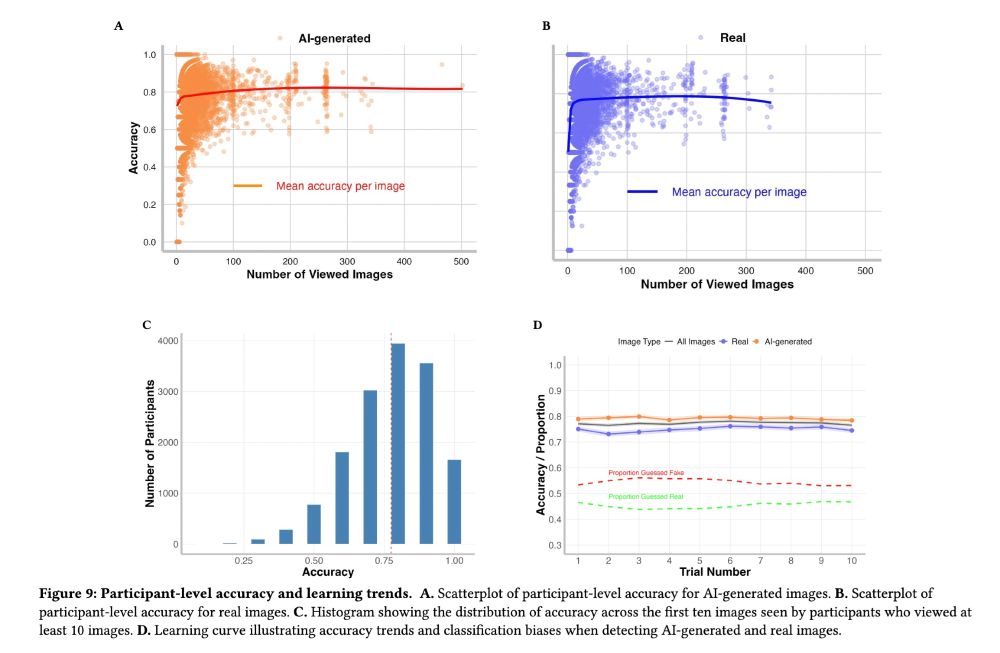

Photorealism varies from image to image and person to person

83% of AI-generated images are identified as AI better than random chance would predict

Photorealism varies from image to image and person to person

83% of AI-generated images are identified as AI better than random chance would predict

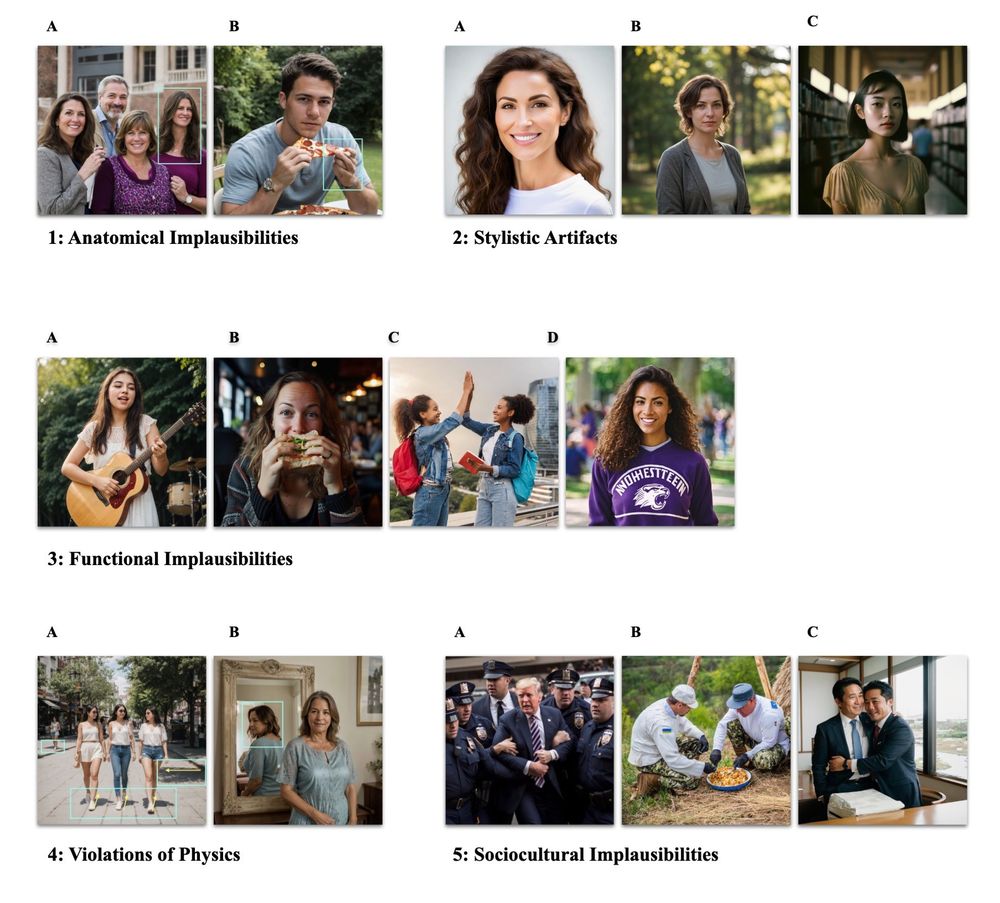

Large scale experiment with 750k obs addressing

(1) How photorealistic are today's AI-generated images?

(2) What features of images influence people's ability to distinguish real/fake?

(3) How should we categorize artifacts?

Large scale experiment with 750k obs addressing

(1) How photorealistic are today's AI-generated images?

(2) What features of images influence people's ability to distinguish real/fake?

(3) How should we categorize artifacts?

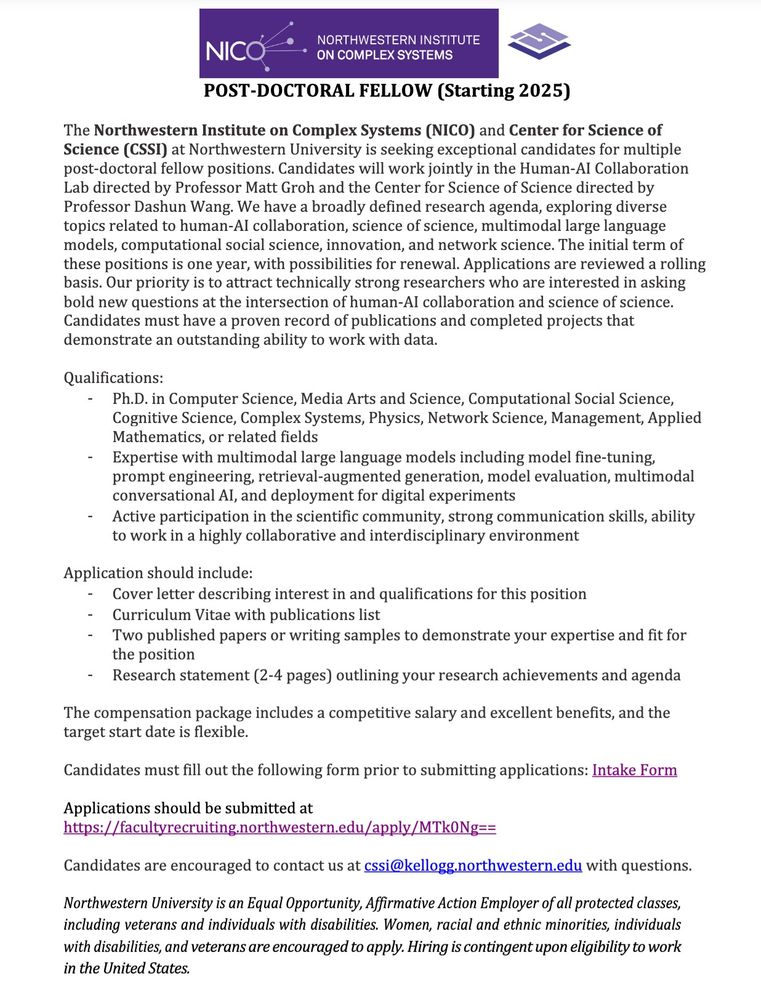

Dashun Wang and I are seeking a creative, technical, interdisciplinary researcher for a joint postdoc fellowship between our labs.

If you're passionate about Human-AI Collaboration and Science of Science, this may be for you! 🚀

Please share widely!

Dashun Wang and I are seeking a creative, technical, interdisciplinary researcher for a joint postdoc fellowship between our labs.

If you're passionate about Human-AI Collaboration and Science of Science, this may be for you! 🚀

Please share widely!

So many fantastic discussions as we witnessed the frontier of AI shift even further into hyperdrive✨

Props to students for all the hard work and big thanks to teaching assistants and guest speakers 🙏

So many fantastic discussions as we witnessed the frontier of AI shift even further into hyperdrive✨

Props to students for all the hard work and big thanks to teaching assistants and guest speakers 🙏

And, why does the amodal completion illusion lead us to see a super long reindeer in the image on the right?

This week @chazfirestone.bsky.social joined the NU CogSci seminar series to address these fundamental questions

And, why does the amodal completion illusion lead us to see a super long reindeer in the image on the right?

This week @chazfirestone.bsky.social joined the NU CogSci seminar series to address these fundamental questions

Re-reading this before joining a panel of lawyers to speak on deepfakes

go.activecalendar.com/FordhamUnive...

Re-reading this before joining a panel of lawyers to speak on deepfakes

go.activecalendar.com/FordhamUnive...

Lack of domain expertise & lack of knowledge of AI's capabilities and limitations -> falling for & even preferring simulacra

Lack of domain expertise & lack of knowledge of AI's capabilities and limitations -> falling for & even preferring simulacra

If you're excited about computational social science, LLMs, digital experiments, real-world problem solving, this could be a great fit

Please reshare!

Deets 👇

If you're excited about computational social science, LLMs, digital experiments, real-world problem solving, this could be a great fit

Please reshare!

Deets 👇

User error seems to be the culprit

If users don't know how to interact with the technology (however easy it may seem), then the experiment misses out on what would happen if participants had basic knowledge LLMs

User error seems to be the culprit

If users don't know how to interact with the technology (however easy it may seem), then the experiment misses out on what would happen if participants had basic knowledge LLMs