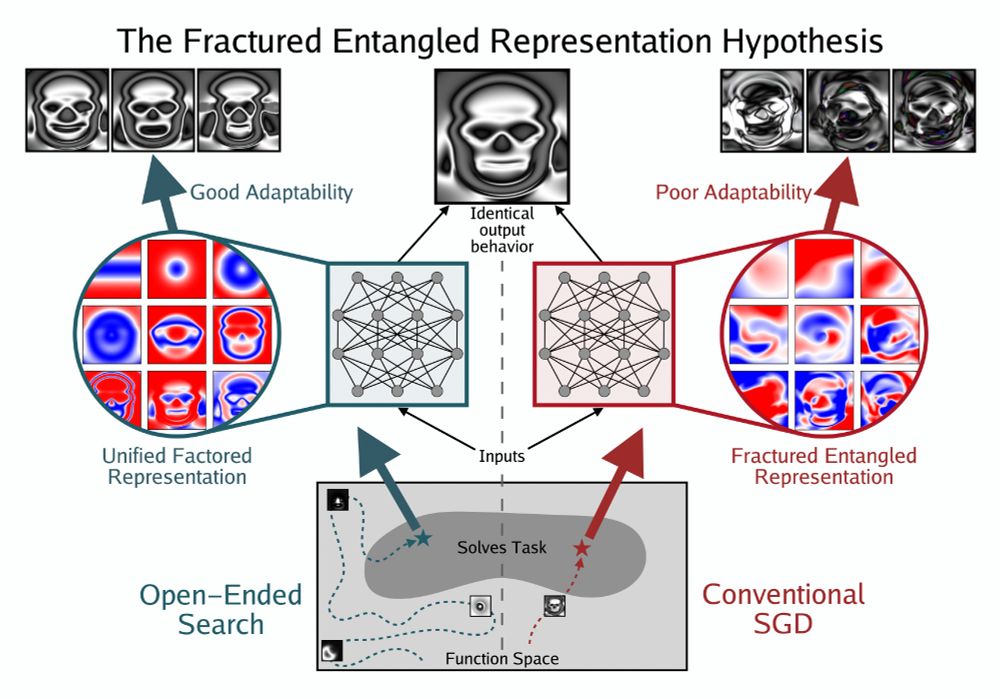

Paper: arxiv.org/abs/2509.19349

Blog: sakana.ai/shinka-evolve/

GitHub Project: github.com/SakanaAI/Shi...

Paper: arxiv.org/abs/2509.19349

Blog: sakana.ai/shinka-evolve/

GitHub Project: github.com/SakanaAI/Shi...

arxiv.org/abs/2206.00730

arxiv.org/abs/2206.00730

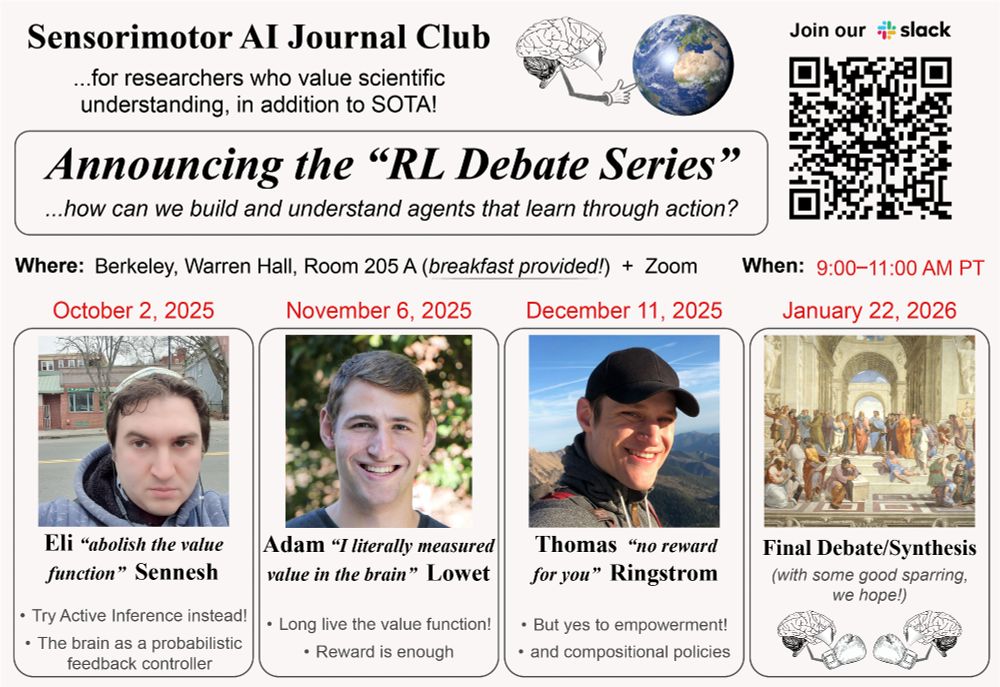

📢 To address these, the Sensorimotor AI Journal Club is launching the "RL Debate Series"👇

w/ @elisennesh.bsky.social, @noreward4u.bsky.social, @tommasosalvatori.bsky.social

🧵[1/5]

🧠🤖🧠📈

📢 To address these, the Sensorimotor AI Journal Club is launching the "RL Debate Series"👇

w/ @elisennesh.bsky.social, @noreward4u.bsky.social, @tommasosalvatori.bsky.social

🧵[1/5]

🧠🤖🧠📈

So I asked ChatGPT to play chess with me

It tried to make an illegal move after 3 moves

chatgpt.com/share/68ba54...

So I think the answer is still no. It can't

So I asked ChatGPT to play chess with me

It tried to make an illegal move after 3 moves

chatgpt.com/share/68ba54...

So I think the answer is still no. It can't

It seems it can solve it, but the chain of thought it employed seemed to make absolutely zero sense. It looks more like it's trying to regenerate the same prompt over and over again and got stuck

It seems it can solve it, but the chain of thought it employed seemed to make absolutely zero sense. It looks more like it's trying to regenerate the same prompt over and over again and got stuck

Paper: arxiv.org/abs/2505.11581

Paper: arxiv.org/abs/2505.11581

Paper: arxiv.org/abs/2506.06105

Code: github.com/SakanaAI/Tex...

Paper: arxiv.org/abs/2506.06105

Code: github.com/SakanaAI/Tex...

If someone tested you, wouldn't you? And if you tested LLMs?

Finding: LLMs can tell when they are evaluated🧠

We can only wait to see how they act on it

alphaxiv.org/pdf/2505.23836

📈🧠🤖

If someone tested you, wouldn't you? And if you tested LLMs?

Finding: LLMs can tell when they are evaluated🧠

We can only wait to see how they act on it

alphaxiv.org/pdf/2505.23836

📈🧠🤖

reasoning that holds in new environments.

However, weighting the games is complicated, so merging (my beloved fusing in the title) is used.

📈🤖🧠

alphaxiv.org/pdf/2505.16401

reasoning that holds in new environments.

However, weighting the games is complicated, so merging (my beloved fusing in the title) is used.

📈🤖🧠

alphaxiv.org/pdf/2505.16401