My experience is that LLMs make using TLA+ much easier. For instance, I just wrote up a bug report last week that outlined the old/buggy behavior with a TLA+ spec. It made the dynamics much clearer.

My experience is that LLMs make using TLA+ much easier. For instance, I just wrote up a bug report last week that outlined the old/buggy behavior with a TLA+ spec. It made the dynamics much clearer.

jack-vanlightly.com/...

If you fix the # of iterations, RANSAC is an argmax over hypotheses. You turn the inlier count into your policy for hypothesis selection, and train with policy gradient (DSAC, CVPR17).

github.com/vislearn/DSA...

If you fix the # of iterations, RANSAC is an argmax over hypotheses. You turn the inlier count into your policy for hypothesis selection, and train with policy gradient (DSAC, CVPR17).

github.com/vislearn/DSA...

Generative Adversarial Nets

Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, Yoshua Bengio

Sequence to Sequence Learning with Neural Networks

Ilya Sutskever, Oriol Vinyals, Quoc V. Le

Generative Adversarial Nets

Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, Yoshua Bengio

Sequence to Sequence Learning with Neural Networks

Ilya Sutskever, Oriol Vinyals, Quoc V. Le

We got this idea after their cool work on improving Plug and Play with FM: arxiv.org/abs/2410.02423

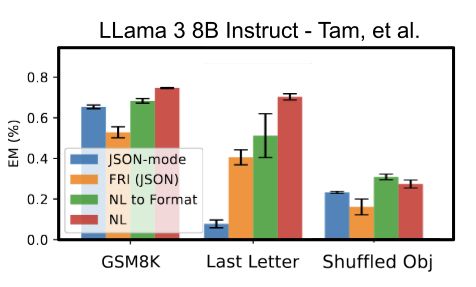

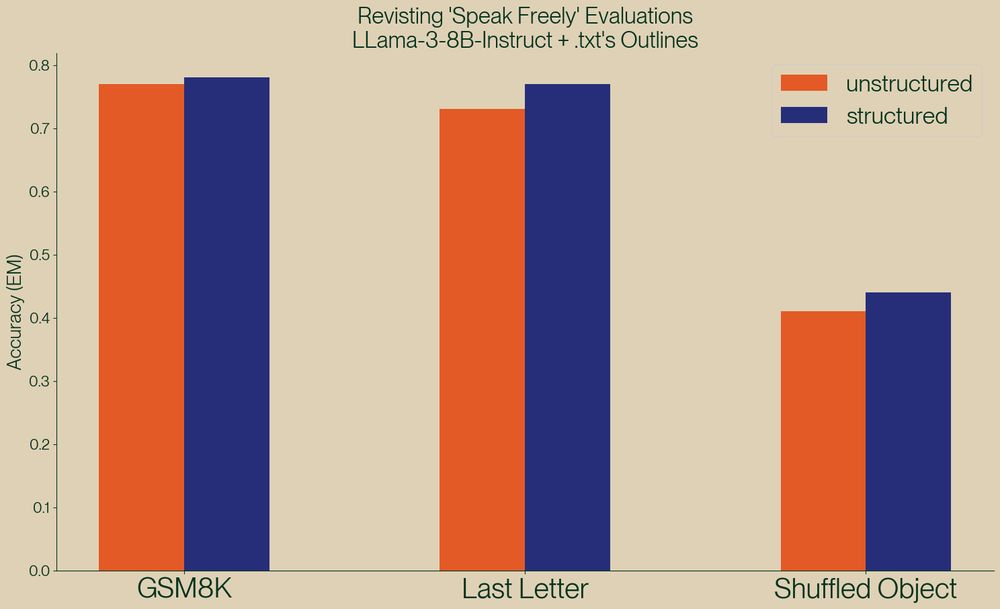

Well, we've taken a look and found serious issue in this paper, and shown, once again, that structured generation *improves* evaluation performance!

Well, we've taken a look and found serious issue in this paper, and shown, once again, that structured generation *improves* evaluation performance!