www.youtube.com/watch?v=XLZ0...

www.youtube.com/watch?v=XLZ0...

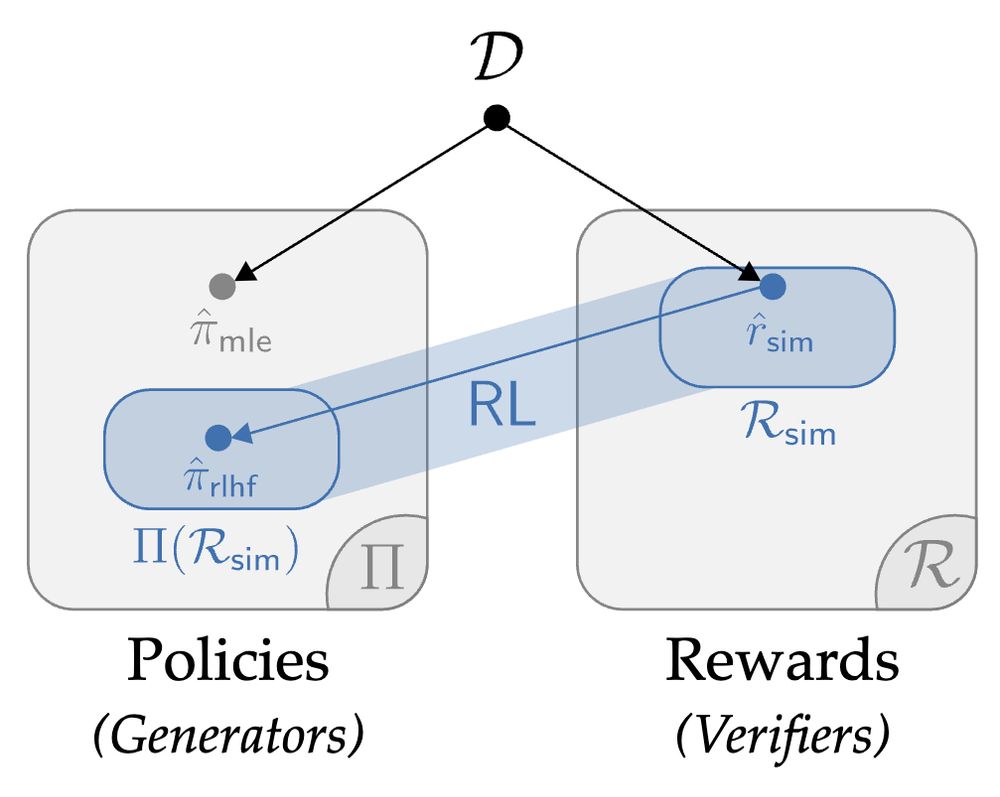

1. efficient training (i.e. limit backprop through time)

2. expressive model classes (e.g. flow matching)

3. inference-time scaling (sequential and parallel)

go.bsky.app/2Gibu1a

go.bsky.app/2Gibu1a

Happy to meet old and new friends and talk about all aspects of RL: data, environment structure, and reward! 😀

In Wed 11am-2pm poster session I will present HyPO-- best of both worlds of offline and online RLHF: neurips.cc/virtual/2024...

Happy to meet old and new friends and talk about all aspects of RL: data, environment structure, and reward! 😀

In Wed 11am-2pm poster session I will present HyPO-- best of both worlds of offline and online RLHF: neurips.cc/virtual/2024...

Also, I'm also on the industry job market, looking forward to connect 😁!

Also, I'm also on the industry job market, looking forward to connect 😁!

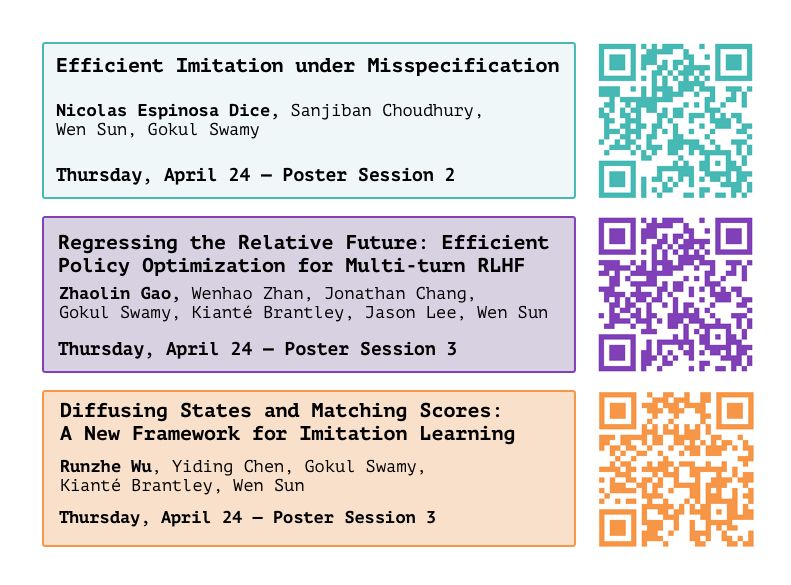

arxiv.org/abs/2406.04219, arxiv.org/abs/2406.01462,

arxiv.org/abs/2404.16767.

arxiv.org/abs/2406.04219, arxiv.org/abs/2406.01462,

arxiv.org/abs/2404.16767.