Get started: pip install -U vllm, add --quantization ptpc_fp8.

Full details: blog.vllm.ai/2025/02/24/p...

[1/2]

Get started: pip install -U vllm, add --quantization ptpc_fp8.

Full details: blog.vllm.ai/2025/02/24/p...

[1/2]

20% throughput boost & 60% memory reduction for models like Llama, Gemma & Qwen with just one line of code! Works on AMD!

www.linkedin.com/blog/enginee...

20% throughput boost & 60% memory reduction for models like Llama, Gemma & Qwen with just one line of code! Works on AMD!

www.linkedin.com/blog/enginee...

Check out the guide on 🤗 Hugging Face for how to leverage this high throughput embedding inference engine!

huggingface.co/blog/michael...

Check out the guide on 🤗 Hugging Face for how to leverage this high throughput embedding inference engine!

huggingface.co/blog/michael...

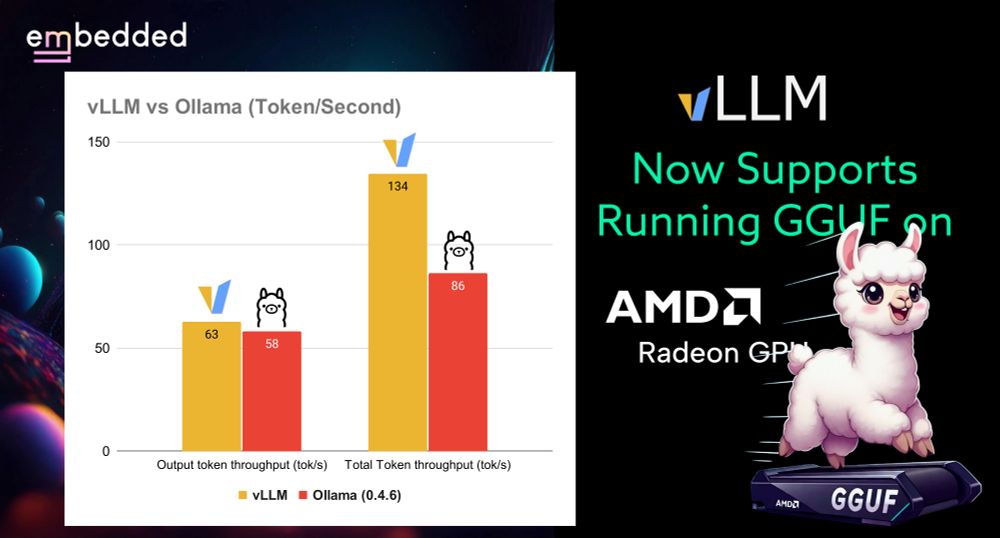

Check it out: embeddedllm.com/blog/vllm-no...

What's your experience with vLLM on AMD? Any features you want to see next?

Check it out: embeddedllm.com/blog/vllm-no...

What's your experience with vLLM on AMD? Any features you want to see next?

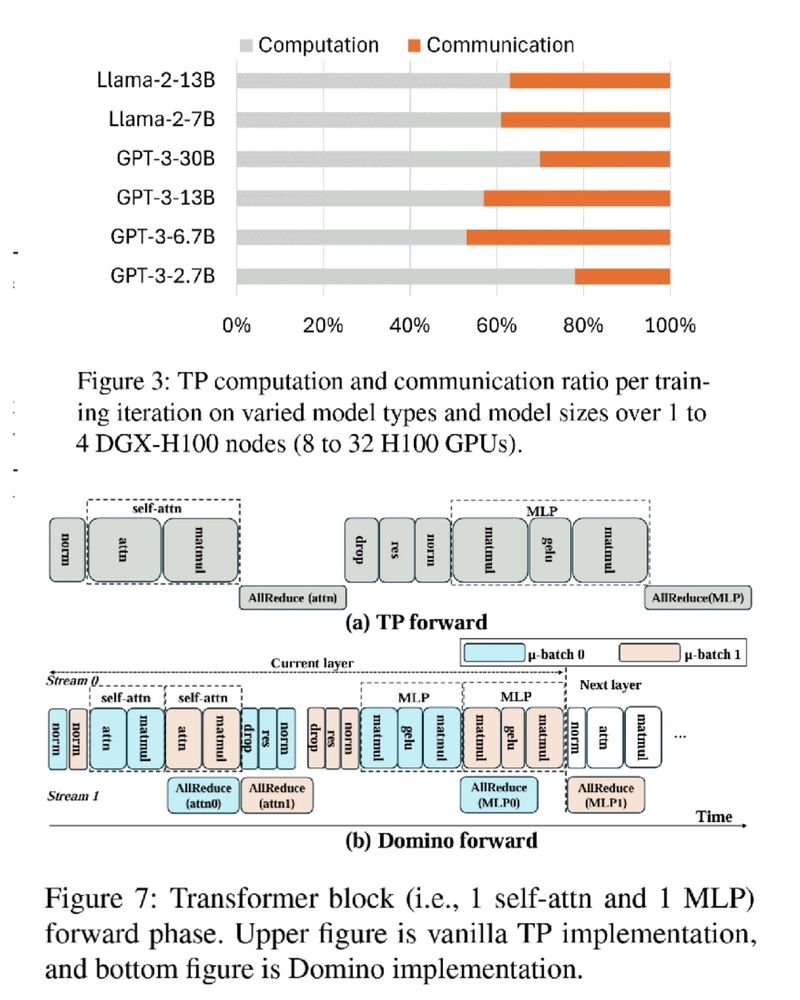

DeepSpeed Domino, with a new tensor parallelism engine, minimizes communication overhead for faster LLM training. 🚀

✅ Near-complete communication hiding

✅ Multi-node scalable solution

Blog: github.com/microsoft/De...

DeepSpeed Domino, with a new tensor parallelism engine, minimizes communication overhead for faster LLM training. 🚀

✅ Near-complete communication hiding

✅ Multi-node scalable solution

Blog: github.com/microsoft/De...

@hotaisle.bsky.social

@hotaisle.bsky.social

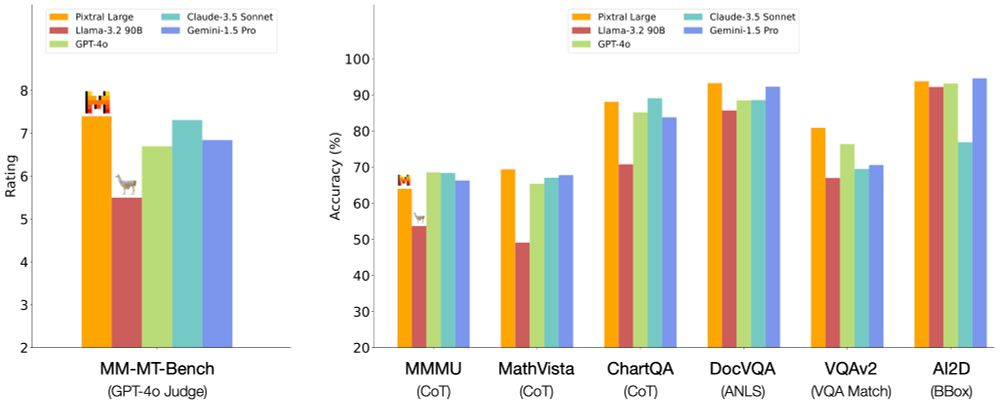

Run Pixtral Large with multiple input images from day 0 using vLLM.

Install vLLM:

pip install -U VLLM

Run Pixtral Large:

vllm serve mistralai/Pixtral-Large-Instruct-2411 --tokenizer_mode mistral --limit_mm_per_prompt 'image=10' --tensor-parallel-size 8

Run Pixtral Large with multiple input images from day 0 using vLLM.

Install vLLM:

pip install -U VLLM

Run Pixtral Large:

vllm serve mistralai/Pixtral-Large-Instruct-2411 --tokenizer_mode mistral --limit_mm_per_prompt 'image=10' --tensor-parallel-size 8

🤯 Expanded model support? ✅

💪 Intel Gaudi integration? ✅

🚀 Major engine & torch.compile boosts? ✅

🔗 github.com/vllm-project...

🤯 Expanded model support? ✅

💪 Intel Gaudi integration? ✅

🚀 Major engine & torch.compile boosts? ✅

🔗 github.com/vllm-project...