Website: https://atcbosselut.github.io/

🔗 Full article: actu.epfl.ch/news/epfl-pr...

🔗 Full article: actu.epfl.ch/news/epfl-pr...

🤖 agents "taking over" science (hypogenic.ai and 📌)

🧪 Real scientists ➡️AI (e.g., materials, chem, physics)

📜 Theory + incentives for H-AI collab & credit (e.g., formalizing tacit knowledge)

new adventures for me, 🔄 if you can! 🙌

chenhaot.com/recruiting.h...

🤖 agents "taking over" science (hypogenic.ai and 📌)

🧪 Real scientists ➡️AI (e.g., materials, chem, physics)

📜 Theory + incentives for H-AI collab & credit (e.g., formalizing tacit knowledge)

new adventures for me, 🔄 if you can! 🙌

chenhaot.com/recruiting.h...

Apart from this, I'm also recruiting postdocs in developing novel training algorithms for reasoning models and agentic AI.

Apart from this, I'm also recruiting postdocs in developing novel training algorithms for reasoning models and agentic AI.

Main takeaway: In mechanistic interpretability, we need assumptions about how DNNs encode concepts in their representations (eg, the linear representation hypothesis). Without them, we can claim any DNN implements any algorithm!

Main takeaway: In mechanistic interpretability, we need assumptions about how DNNs encode concepts in their representations (eg, the linear representation hypothesis). Without them, we can claim any DNN implements any algorithm!

We’ve added more recent work and more immediately actionable directions for future work. Now published in Computational Linguistics!

We’ve added more recent work and more immediately actionable directions for future work. Now published in Computational Linguistics!

How do #LLMs’ inner features change as they train? Using #crosscoders + a new causal metric, we map when features appear, strengthen, or fade across checkpoints—opening a new lens on training dynamics beyond loss curves & benchmarks.

#interpretability

How do #LLMs’ inner features change as they train? Using #crosscoders + a new causal metric, we map when features appear, strengthen, or fade across checkpoints—opening a new lens on training dynamics beyond loss curves & benchmarks.

#interpretability

Introducing Creative Preference Optimization (CrPO) and MuCE (Multi-task Creativity Evaluation Dataset).

Result: More novel, diverse, surprising text—without losing quality!

📝 Appearing at #EMNLP2025

Introducing Creative Preference Optimization (CrPO) and MuCE (Multi-task Creativity Evaluation Dataset).

Result: More novel, diverse, surprising text—without losing quality!

📝 Appearing at #EMNLP2025

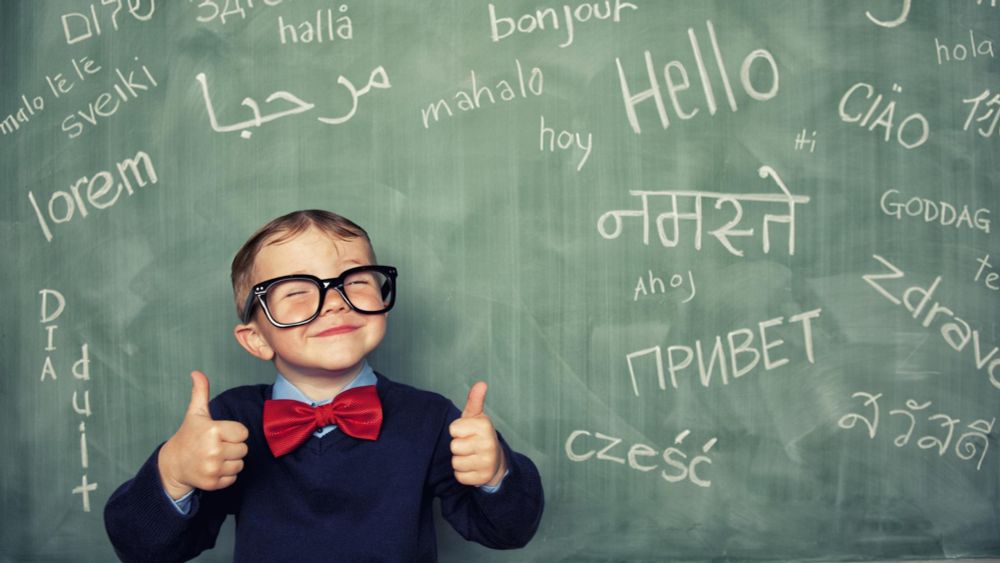

Trained on 15T tokens in 1,000+ languages, it’s built for transparency, responsibility & the public good.

Read more: actu.epfl.ch/news/apertus...

Find out more here: ai.epfl.ch/apertus-a-fu...

@abosselut.bsky.social @icepfl.bsky.social

Find out more here: ai.epfl.ch/apertus-a-fu...

@abosselut.bsky.social @icepfl.bsky.social

Trained on 15T tokens in 1,000+ languages, it’s built for transparency, responsibility & the public good.

Read more: actu.epfl.ch/news/apertus...

Trained on 15T tokens in 1,000+ languages, it’s built for transparency, responsibility & the public good.

Read more: actu.epfl.ch/news/apertus...

From their tech report: huggingface.co/swiss-ai/Ape...

From their tech report: huggingface.co/swiss-ai/Ape...

Fürs MAZ habe ich Apertus kurz analysiert:

www.maz.ch/news/apertus...

Fürs MAZ habe ich Apertus kurz analysiert:

www.maz.ch/news/apertus...

the link to the slide deck in the reply.

the link to the slide deck in the reply.

In multilingual models, the same meaning can take far more tokens in some languages, penalizing users of underrepresented languages with worse performance and higher API costs. Our Parity-aware BPE algorithm is a step toward addressing this issue: 🧵

In multilingual models, the same meaning can take far more tokens in some languages, penalizing users of underrepresented languages with worse performance and higher API costs. Our Parity-aware BPE algorithm is a step toward addressing this issue: 🧵

docs.google.com/document/d/1...

Come join our dynamic group in beautiful Lausanne!

docs.google.com/document/d/1...

Come join our dynamic group in beautiful Lausanne!

I’ll be recruiting PhD students in the upcoming cycle, as well as research interns throughout the year: lasharavichander.github.io/contact.html

I’ll be recruiting PhD students in the upcoming cycle, as well as research interns throughout the year: lasharavichander.github.io/contact.html

Fully open and multilingual, the model is trained on CSCS's supercomputer "Alps" and supports sovereign, transparent, and responsible AI in Switzerland and beyond.

Read more here: ai.epfl.ch/a-language-m...

#ResponsibleAI

Fully open and multilingual, the model is trained on CSCS's supercomputer "Alps" and supports sovereign, transparent, and responsible AI in Switzerland and beyond.

Read more here: ai.epfl.ch/a-language-m...

#ResponsibleAI

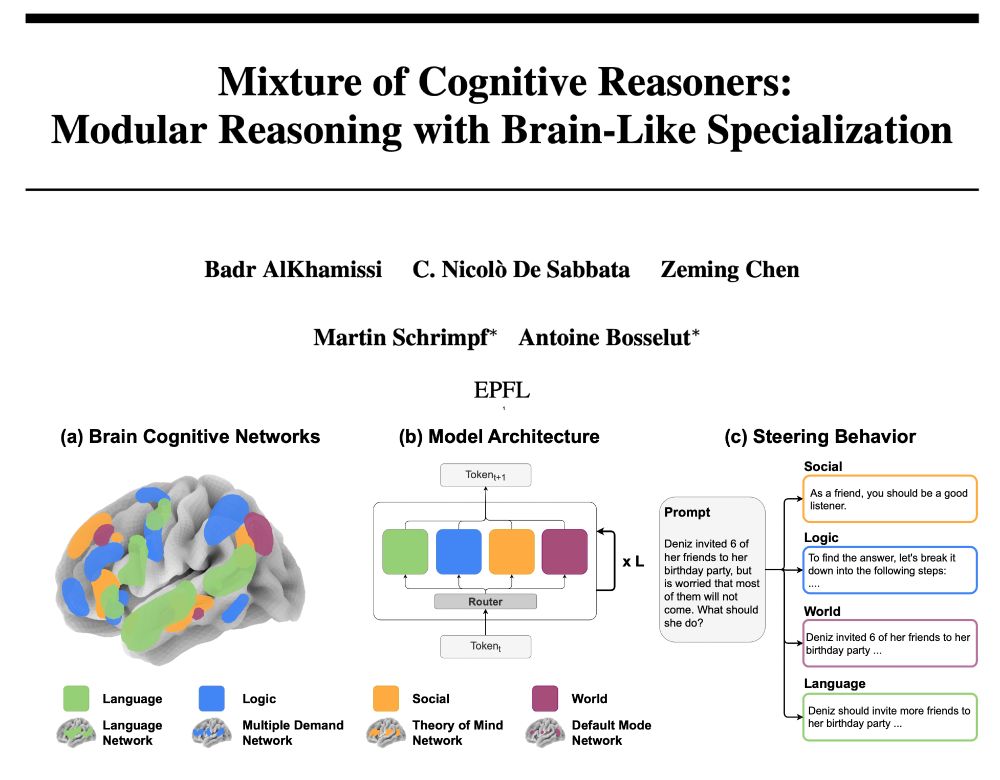

Thrilled to share with you our latest work: “Mixture of Cognitive Reasoners”, a modular transformer architecture inspired by the brain’s functional networks: language, logic, social reasoning, and world knowledge.

1/ 🧵👇

The INCLUDE benchmark from EPFL's NLP Lab and @cohereforai.bsky.social reveal that there is still a gap...

👉 Find out how benchmarks like INCLUDE can help make AI truly inclusive: actu.epfl.ch/news/beyond-...

The INCLUDE benchmark from EPFL's NLP Lab and @cohereforai.bsky.social reveal that there is still a gap...

👉 Find out how benchmarks like INCLUDE can help make AI truly inclusive: actu.epfl.ch/news/beyond-...

Paper: aclanthology.org/2025.naacl-l...

Code: github.com/lucamouchel/...

#NAACL2025

Paper: aclanthology.org/2025.naacl-l...

Code: github.com/lucamouchel/...

#NAACL2025