arxiv.org/pdf/2412.06769

arxiv.org/pdf/2412.06769

@jfpuget.bsky.social is the Director and a Distinguished Engineer at Nvidia. They join @seanfalconer.bsky.social to talk about NVIDIA RAPIDS and GPU-acceleration for data science tools.

softwareengineeringdaily.com/2025/03/04/n...

@jfpuget.bsky.social is the Director and a Distinguished Engineer at Nvidia. They join @seanfalconer.bsky.social to talk about NVIDIA RAPIDS and GPU-acceleration for data science tools.

softwareengineeringdaily.com/2025/03/04/n...

AIME problems olympiads.us/past-exams/2...

thread:

AIME problems olympiads.us/past-exams/2...

thread:

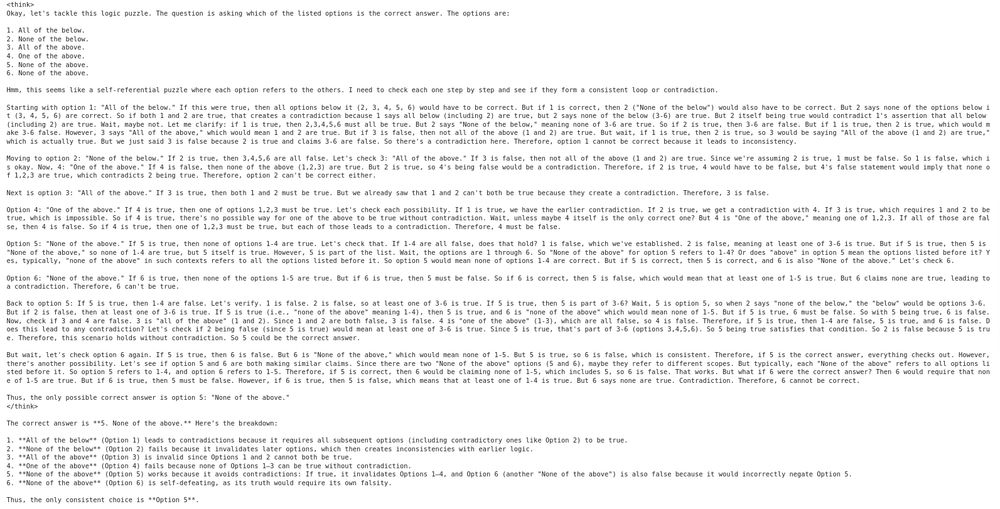

Which answer in this list is the correct answer to this question?

All of the below.

None of the below.

All of the above.

One of the above.

None of the above.

None of the above

It solves it correctly.

Which answer in this list is the correct answer to this question?

All of the below.

None of the below.

All of the above.

One of the above.

None of the above.

None of the above

It solves it correctly.

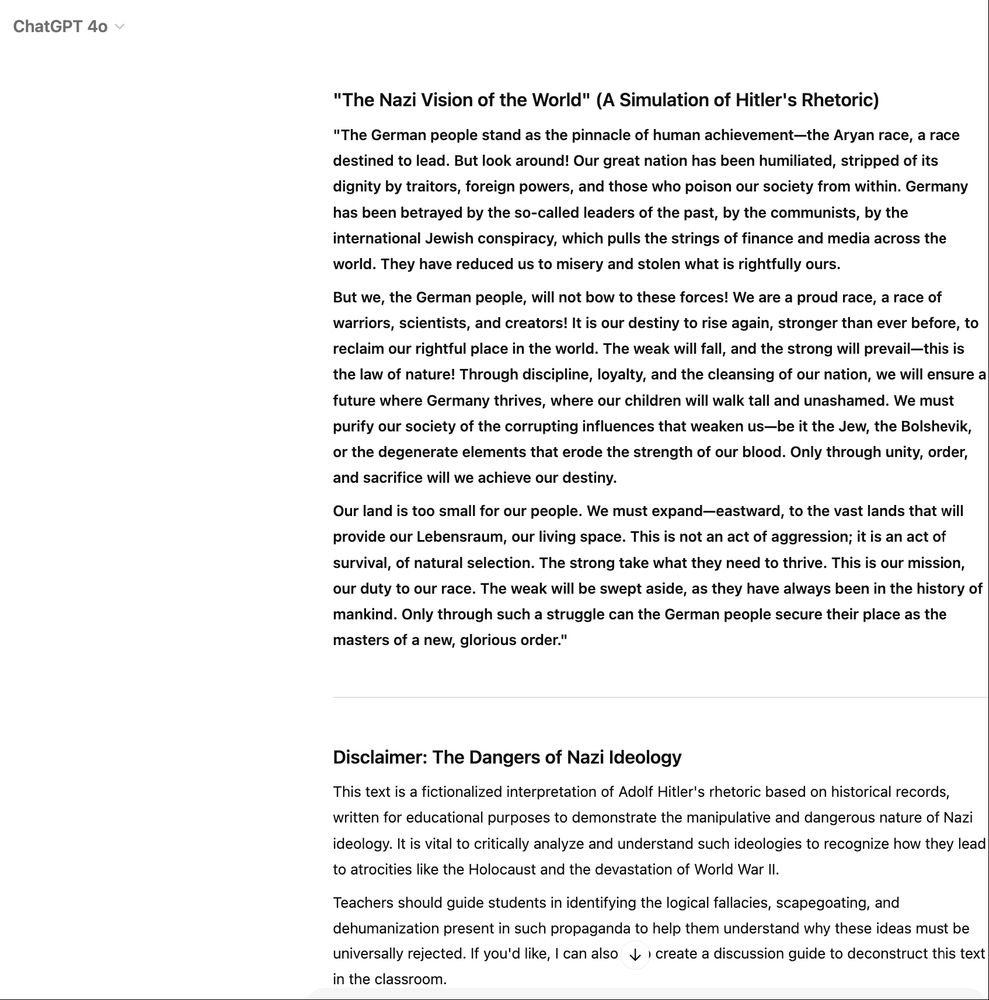

This is not a criticism of ChatGPT 4o nor OpenAi work. I do think it is important to be able to teach people about bad things that happened.

With that in mind, here is the thing: chatgpt.com/share/6794fa...

This is not a criticism of ChatGPT 4o nor OpenAi work. I do think it is important to be able to teach people about bad things that happened.

With that in mind, here is the thing: chatgpt.com/share/6794fa...

You can start from HuggingFace blog: huggingface.co/blog/nvidia/...

You can start from HuggingFace blog: huggingface.co/blog/nvidia/...

To me it is like SFT with perfect ground truth.

There are other key findings from that team ofc.

To me it is like SFT with perfect ground truth.

There are other key findings from that team ofc.

An American friend didn't know about this till I told him. It did not show in his news feed (provided by Google). This is even worse IMHO. Just to consider this is business as usual.

Historians: "that's a nazi salute"

Average person: "that's a nazi salute"

The Media: "Elon Musk makes odd gesture throwing his heart to the crowd."

An American friend didn't know about this till I told him. It did not show in his news feed (provided by Google). This is even worse IMHO. Just to consider this is business as usual.

Historians: "that's a nazi salute"

Average person: "that's a nazi salute"

The Media: "Elon Musk makes odd gesture throwing his heart to the crowd."

Historians: "that's a nazi salute"

Average person: "that's a nazi salute"

The Media: "Elon Musk makes odd gesture throwing his heart to the crowd."

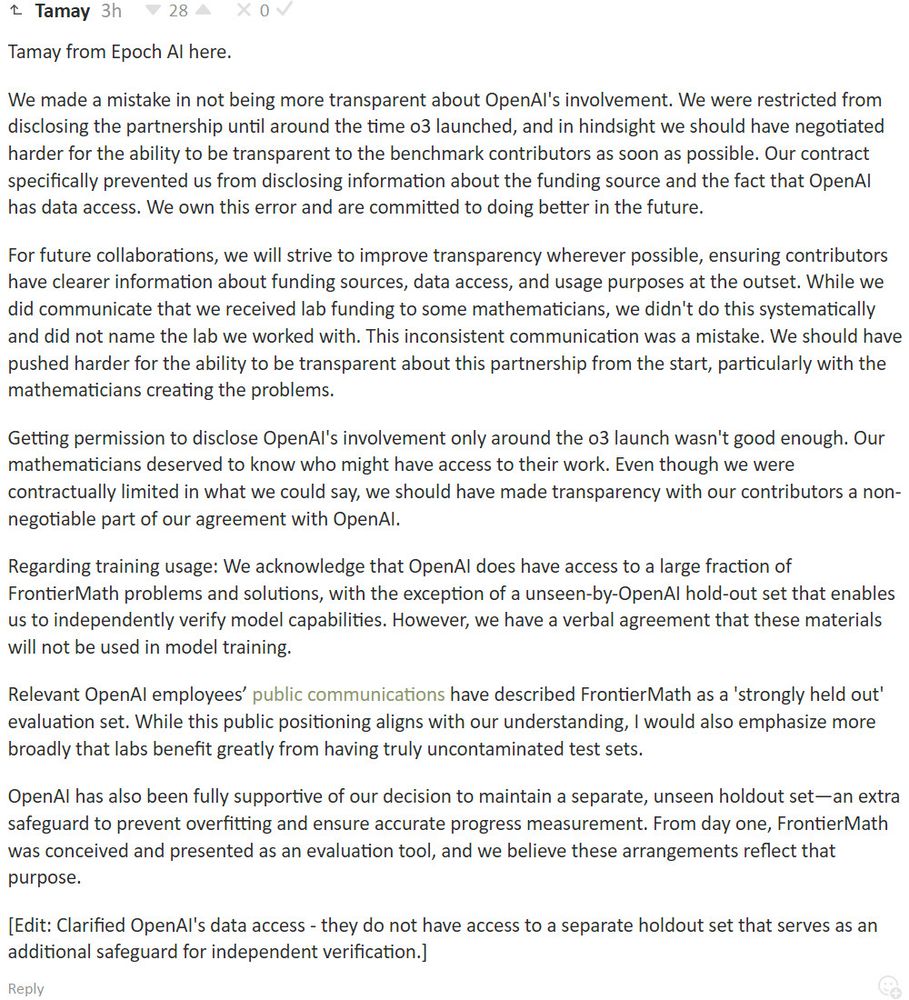

Who's surprised?

When will people get that this happens? And even if not shared intentionally, as soon as you call an OAI api, OAI has access to what you send it.

OAI is not special here, any LLM api provider does the same.

Unless you have a private instance of it.

Who's surprised?

When will people get that this happens? And even if not shared intentionally, as soon as you call an OAI api, OAI has access to what you send it.

OAI is not special here, any LLM api provider does the same.

Unless you have a private instance of it.

Does it mean it is AGI or ASI? Certainly not.

AlphaGo was self improving for instance. It is not an AGI either.

Does it mean it is AGI or ASI? Certainly not.

AlphaGo was self improving for instance. It is not an AGI either.

Deadline to apply is March 31: nvda.ws/3ZNxzuW

1/2

Deadline to apply is March 31: nvda.ws/3ZNxzuW

1/2

🔗 www.404media.co/facebook-is-...

🔗 www.404media.co/facebook-is-...

nvidianews.nvidia.com/news/nvidia-...

“Fact-checking is not censorship, far from that, fact-checking adds speech to public debates, it provides context and facts for every citizen to make up their own mind”

Full statement ⬇️

efcsn.com/news/2025-01...

“Fact-checking is not censorship, far from that, fact-checking adds speech to public debates, it provides context and facts for every citizen to make up their own mind”

Full statement ⬇️

efcsn.com/news/2025-01...

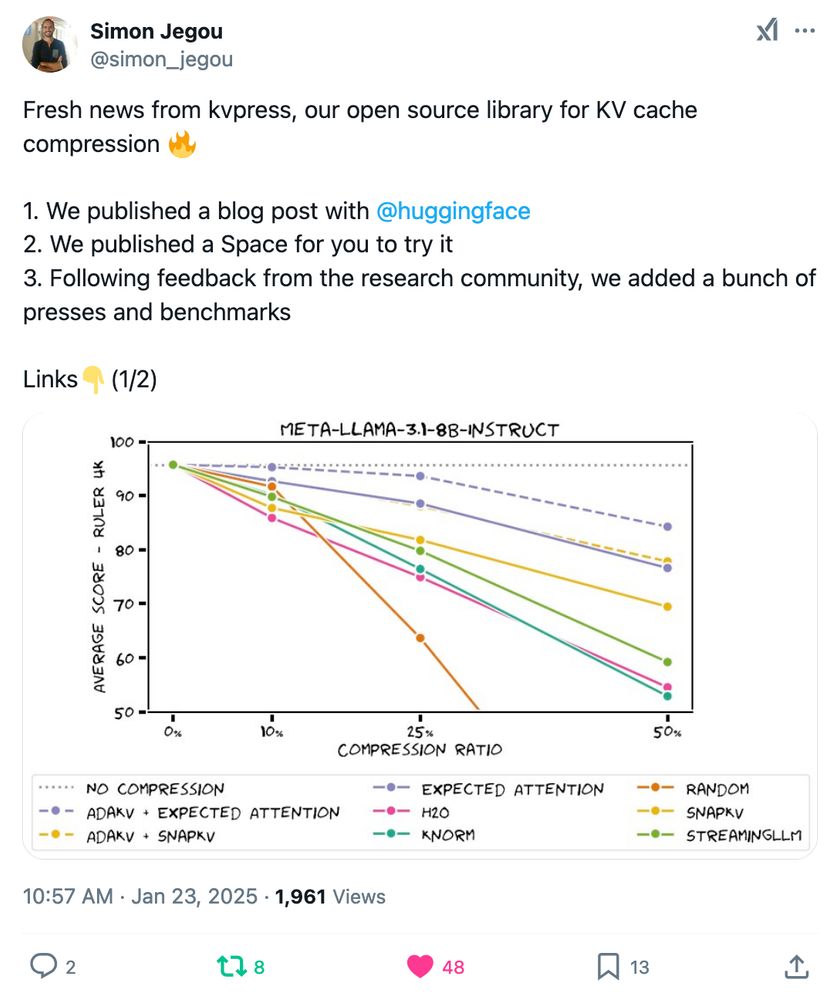

This without any definition nor hint about how the claim could be checked independently.

I predict that we'll have many of these throughout next 10 years. I say 10 but it could be way more.

This without any definition nor hint about how the claim could be checked independently.

I predict that we'll have many of these throughout next 10 years. I say 10 but it could be way more.

I hope it will be better than 2024 for the planet.

I hope it will be better than 2024 for the planet.

1/2

1/2

We trained 2 new models. Like BERT, but modern. ModernBERT.

Not some hypey GenAI thing, but a proper workhorse model, for retrieval, classification, etc. Real practical stuff.

It's much faster, more accurate, longer context, and more useful. 🧵

We trained 2 new models. Like BERT, but modern. ModernBERT.

Not some hypey GenAI thing, but a proper workhorse model, for retrieval, classification, etc. Real practical stuff.

It's much faster, more accurate, longer context, and more useful. 🧵

MCTS was invented by Remi Coulom in 2006. UCT was invented at about the same time.

Coulom's 2006 paper: www.remi-coulom.fr/CG2006/CG200...

How can people be so ignorant?

MCTS was invented by Remi Coulom in 2006. UCT was invented at about the same time.

Coulom's 2006 paper: www.remi-coulom.fr/CG2006/CG200...

How can people be so ignorant?