lindsey

@cogqueries.bsky.social

Reposted by lindsey

The first talk in the Yale Law Library’s Critical Legal AI Literacies: Emily Bender on “Large Language Models and the Lawyer’s Search for Meaning” library.law.yale.edu/news/critica...

Critical Legal AI Literacies: Emily Bender on “Large Language Models and the Lawyer’s Search for Meaning” | Lillian Goldman Law Library

The Lillian Goldman Law Library, through the generous support of the Oscar M.

library.law.yale.edu

October 10, 2025 at 6:34 PM

The first talk in the Yale Law Library’s Critical Legal AI Literacies: Emily Bender on “Large Language Models and the Lawyer’s Search for Meaning” library.law.yale.edu/news/critica...

While LLMs dominate the spotlight, there's a growing body of work showing that small, specialized models can rival massive generalized ones.

These Tiny Recursive Models (TRMs), at only 7M parameters, hit 45% on ARC-AGI-1, beating DeepSeek R1, o3-mini, and Gemini 2.5 Pro with <0.01% of their size.

These Tiny Recursive Models (TRMs), at only 7M parameters, hit 45% on ARC-AGI-1, beating DeepSeek R1, o3-mini, and Gemini 2.5 Pro with <0.01% of their size.

October 10, 2025 at 12:37 AM

While LLMs dominate the spotlight, there's a growing body of work showing that small, specialized models can rival massive generalized ones.

These Tiny Recursive Models (TRMs), at only 7M parameters, hit 45% on ARC-AGI-1, beating DeepSeek R1, o3-mini, and Gemini 2.5 Pro with <0.01% of their size.

These Tiny Recursive Models (TRMs), at only 7M parameters, hit 45% on ARC-AGI-1, beating DeepSeek R1, o3-mini, and Gemini 2.5 Pro with <0.01% of their size.

Reposted by lindsey

I don't think the average person is going to learn much by accessing a paywalled scientific paper.

But the current system keeps out journalists, science communicators, policy researchers, and fact checkers from reading into a topic as well.

But the current system keeps out journalists, science communicators, policy researchers, and fact checkers from reading into a topic as well.

In general I think it's hard to combat scientific misinformation when some of the best research is locked behind an academic paywall, while lots of nonsense gets published free for everyone to read in predatory journals.

September 29, 2025 at 6:17 PM

I don't think the average person is going to learn much by accessing a paywalled scientific paper.

But the current system keeps out journalists, science communicators, policy researchers, and fact checkers from reading into a topic as well.

But the current system keeps out journalists, science communicators, policy researchers, and fact checkers from reading into a topic as well.

Reposted by lindsey

The length of tasks that can be completed at 50% success rate by AI continues to increase

September 29, 2025 at 11:13 PM

The length of tasks that can be completed at 50% success rate by AI continues to increase

Reposted by lindsey

How often do LLMs snitch? Recreating Theo’s SnitchBench with LLM https://simonwillison.net/2025/May/31/snitchbench-with-llm/ #AI #evals #Claude (interesting)

May 31, 2025 at 11:59 PM

How often do LLMs snitch? Recreating Theo’s SnitchBench with LLM https://simonwillison.net/2025/May/31/snitchbench-with-llm/ #AI #evals #Claude (interesting)

Reposted by lindsey

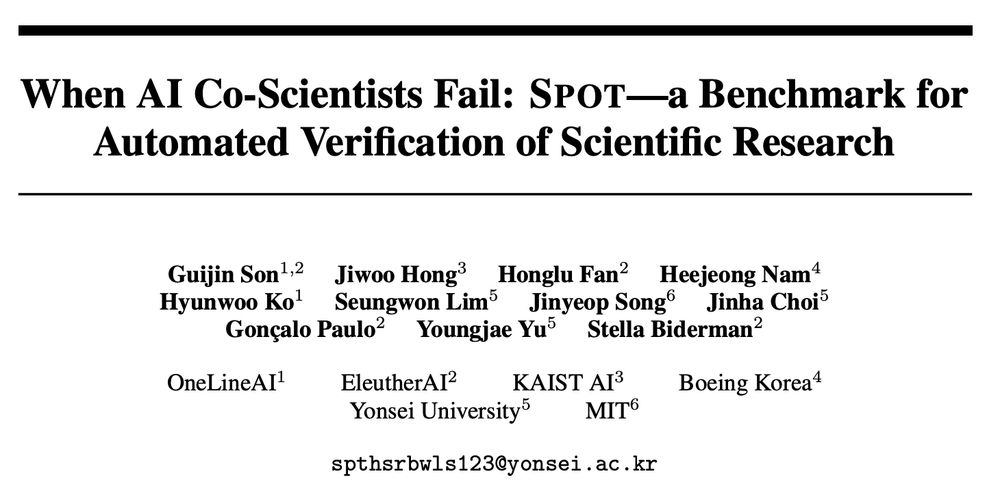

People keep plugging AI "Co-Scientists," so what happens when you ask them to do an important task like finding errors in papers?

We built SPOT, a dataset of STEM manuscripts across 10 fields annotated with real errors to find out.

(tl;dr not even close to usable) #NLProc

arxiv.org/abs/2505.11855

We built SPOT, a dataset of STEM manuscripts across 10 fields annotated with real errors to find out.

(tl;dr not even close to usable) #NLProc

arxiv.org/abs/2505.11855

May 23, 2025 at 4:22 PM

People keep plugging AI "Co-Scientists," so what happens when you ask them to do an important task like finding errors in papers?

We built SPOT, a dataset of STEM manuscripts across 10 fields annotated with real errors to find out.

(tl;dr not even close to usable) #NLProc

arxiv.org/abs/2505.11855

We built SPOT, a dataset of STEM manuscripts across 10 fields annotated with real errors to find out.

(tl;dr not even close to usable) #NLProc

arxiv.org/abs/2505.11855

Reposted by lindsey

Open-sourcing circuit tracing tools | Discussion

Open-sourcing circuit-tracing tools

Anthropic is an AI safety and research company that's working to build reliable, interpretable, and steerable AI systems.

www.anthropic.com

May 29, 2025 at 8:40 PM

Open-sourcing circuit tracing tools | Discussion

AI scientists are here! Agents generated the hypotheses, proposed the experiments, analyzed the data, and produced the figures. Humans carried out the actual lab work. There's been some debate about whether the findings in this particular study were truly novel, but it's exciting nonetheless.

Demonstrating end-to-end scientific discovery with Robin: a multi-agent system | FutureHouse https://www.futurehouse.org/research-announcements/demonstrating-end-to-end-scientific-discovery-with-robin-a-multi-agent-system?_bhlid=8f1249274acd4f992442a7576a18ccd8170aa737 #AI #science #agents

May 29, 2025 at 7:59 AM

AI scientists are here! Agents generated the hypotheses, proposed the experiments, analyzed the data, and produced the figures. Humans carried out the actual lab work. There's been some debate about whether the findings in this particular study were truly novel, but it's exciting nonetheless.

Reposted by lindsey

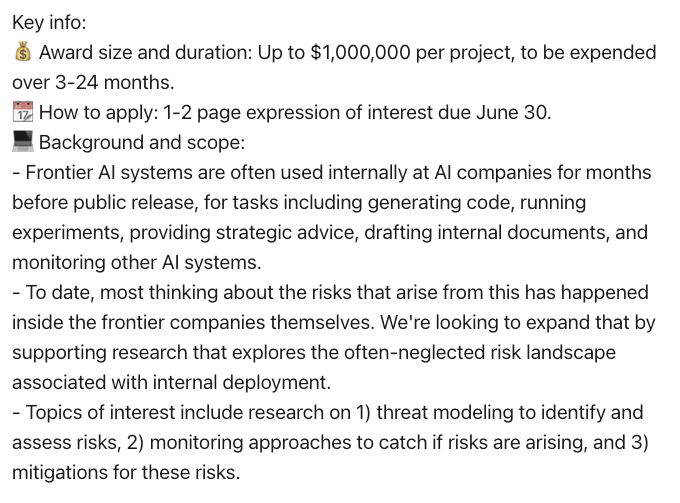

💡Funding opportunity—share with your AI research networks💡

Internal deployments of frontier AI models are an underexplored source of risk. My program at @csetgeorgetown.bsky.social just opened a call for research ideas—EOIs due Jun 30.

Full details ➡️ cset.georgetown.edu/wp-content/u...

Summary ⬇️

Internal deployments of frontier AI models are an underexplored source of risk. My program at @csetgeorgetown.bsky.social just opened a call for research ideas—EOIs due Jun 30.

Full details ➡️ cset.georgetown.edu/wp-content/u...

Summary ⬇️

May 19, 2025 at 4:59 PM

💡Funding opportunity—share with your AI research networks💡

Internal deployments of frontier AI models are an underexplored source of risk. My program at @csetgeorgetown.bsky.social just opened a call for research ideas—EOIs due Jun 30.

Full details ➡️ cset.georgetown.edu/wp-content/u...

Summary ⬇️

Internal deployments of frontier AI models are an underexplored source of risk. My program at @csetgeorgetown.bsky.social just opened a call for research ideas—EOIs due Jun 30.

Full details ➡️ cset.georgetown.edu/wp-content/u...

Summary ⬇️

Reposted by lindsey

I think a lot about what Carl Sagan said in one of his final interviews.

May 4, 2025 at 6:21 AM

I think a lot about what Carl Sagan said in one of his final interviews.

Reposted by lindsey

Possibly useful if you’re the victim of a cancelled grant! www.spencer.org/grant_types/...

Rapid Response Bridge Funding Program

In the face of recent abrupt shifts in federal funding for education research, including large-scale terminations of National Science Foundation (NSF) research grant awards, we have developed a rap...

www.spencer.org

May 3, 2025 at 3:57 PM

Possibly useful if you’re the victim of a cancelled grant! www.spencer.org/grant_types/...

Reposted by lindsey

📢 The Internet Archive needs your help.

At a time when information is being rewritten or erased online, a $700 million lawsuit from major record labels threatens to destroy the Wayback Machine.

Tell the labels to drop the 78s lawsuit.

👉 Sign our open letter: www.change.org/p/defend-the...

🧵⬇️

At a time when information is being rewritten or erased online, a $700 million lawsuit from major record labels threatens to destroy the Wayback Machine.

Tell the labels to drop the 78s lawsuit.

👉 Sign our open letter: www.change.org/p/defend-the...

🧵⬇️

April 17, 2025 at 4:51 PM

📢 The Internet Archive needs your help.

At a time when information is being rewritten or erased online, a $700 million lawsuit from major record labels threatens to destroy the Wayback Machine.

Tell the labels to drop the 78s lawsuit.

👉 Sign our open letter: www.change.org/p/defend-the...

🧵⬇️

At a time when information is being rewritten or erased online, a $700 million lawsuit from major record labels threatens to destroy the Wayback Machine.

Tell the labels to drop the 78s lawsuit.

👉 Sign our open letter: www.change.org/p/defend-the...

🧵⬇️

Reposted by lindsey

There's a new paper out that offers a 68 pages critique of the way the popular Chatbot Arena LLM leaderboard can potentially be gamed by large AI labs with deep pockets. Here's my attempt at adding some extra context to the issues described in the paper.

simonwillison.net/2025/Apr/30/...

simonwillison.net/2025/Apr/30/...

Understanding the recent criticism of the Chatbot Arena

The Chatbot Arena has become the go-to place for vibes-based evaluation of LLMs over the past two years. The project, originating at UC Berkeley, is home to a large community …

simonwillison.net

April 30, 2025 at 10:57 PM

There's a new paper out that offers a 68 pages critique of the way the popular Chatbot Arena LLM leaderboard can potentially be gamed by large AI labs with deep pockets. Here's my attempt at adding some extra context to the issues described in the paper.

simonwillison.net/2025/Apr/30/...

simonwillison.net/2025/Apr/30/...

Reposted by lindsey

Qwen 3 offers a case study in how to effectively release a model simonwillison.net/2025/Apr/29/...

Qwen 3 offers a case study in how to effectively release a model

Alibaba’s Qwen team released the hotly anticipated Qwen 3 model family today. The Qwen models are already some of the best open weight models—Apache 2.0 licensed and with a variety …

simonwillison.net

April 29, 2025 at 12:37 AM

Qwen 3 offers a case study in how to effectively release a model simonwillison.net/2025/Apr/29/...

Reposted by lindsey

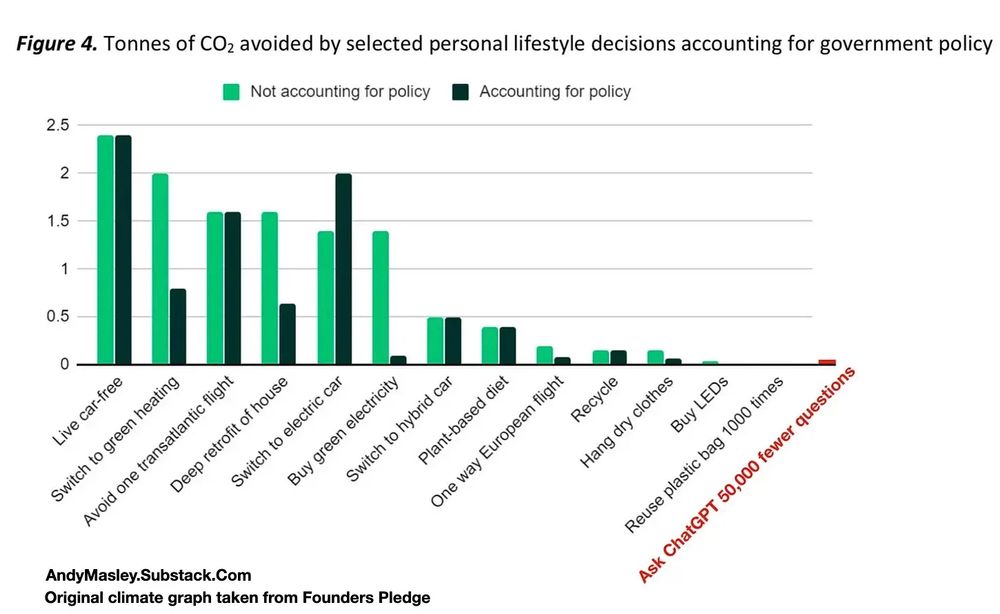

Issues around AI are important and will become only more important which is why this meme around "LLMs are destroying the planet" is worth combating. If you start your arguments with untrue things, people will ignore you.

andymasley.substack.com/p/a-cheat-sh...

andymasley.substack.com/p/a-cheat-sh...

April 29, 2025 at 4:23 PM

Issues around AI are important and will become only more important which is why this meme around "LLMs are destroying the planet" is worth combating. If you start your arguments with untrue things, people will ignore you.

andymasley.substack.com/p/a-cheat-sh...

andymasley.substack.com/p/a-cheat-sh...

Reposted by lindsey

This is one of the worst violations of research ethics I've ever seen. Manipulating people in online communities using deception, without consent, is not "low risk" and, as evidenced by the discourse in this Reddit post, resulted in harm.

Great thread from Sarah, and I have additional thoughts. 🧵

Great thread from Sarah, and I have additional thoughts. 🧵

The mods of r/ChangeMyView shared the sub was the subject of a study to test the persuasiveness of LLMs & that they didn't consent. There’s a lot that went wrong, so here’s a 🧵 unpacking it, along with some ideas for how to do research with online communities ethically. tinyurl.com/59tpt988

From the changemyview community on Reddit

Explore this post and more from the changemyview community

tinyurl.com

April 26, 2025 at 10:25 PM

This is one of the worst violations of research ethics I've ever seen. Manipulating people in online communities using deception, without consent, is not "low risk" and, as evidenced by the discourse in this Reddit post, resulted in harm.

Great thread from Sarah, and I have additional thoughts. 🧵

Great thread from Sarah, and I have additional thoughts. 🧵

Reposted by lindsey

Some of the anti-AI stuff feels a bit like when people would say "don't use Wikipedia as a source." It's just like anything else, a piece of information that you weigh against multiple sources and your own understanding of its likely failure modes

April 26, 2025 at 1:23 PM

Some of the anti-AI stuff feels a bit like when people would say "don't use Wikipedia as a source." It's just like anything else, a piece of information that you weigh against multiple sources and your own understanding of its likely failure modes

Reposted by lindsey

We've updated our data on government spending 📊

You can explore how much countries spend relative to the size of their economies, how this has changed over time, and how spending is split across governments' priorities like health, education, and more:

➡️ ourworldindata.org/government-s...

You can explore how much countries spend relative to the size of their economies, how this has changed over time, and how spending is split across governments' priorities like health, education, and more:

➡️ ourworldindata.org/government-s...

Government Spending

What do governments spend their financial resources on?

ourworldindata.org

April 24, 2025 at 5:06 PM

We've updated our data on government spending 📊

You can explore how much countries spend relative to the size of their economies, how this has changed over time, and how spending is split across governments' priorities like health, education, and more:

➡️ ourworldindata.org/government-s...

You can explore how much countries spend relative to the size of their economies, how this has changed over time, and how spending is split across governments' priorities like health, education, and more:

➡️ ourworldindata.org/government-s...

Reposted by lindsey

Had a really fun time being able to talk to/vent with two people I love, but professionally, and recorded!

Hopefully useful for anyone trying to understand what "AI agents" are, what they could be, and how they're being hyped. And for anyone who could use a bit of levity in all the AAAAAAH!

Hopefully useful for anyone trying to understand what "AI agents" are, what they could be, and how they're being hyped. And for anyone who could use a bit of levity in all the AAAAAAH!

Mystery AI Hype Theater 3000 Episode 54: “AI” Agents, A Single Point of Failure in which @mmitchell.bsky.social comes back to the pod to sift through agentic nonsense with me and @alexhanna.bsky.social

www.buzzsprout.com/2126417/epis...

Thx to @whatulysses.bsky.social for production!

www.buzzsprout.com/2126417/epis...

Thx to @whatulysses.bsky.social for production!

April 18, 2025 at 4:00 PM

Had a really fun time being able to talk to/vent with two people I love, but professionally, and recorded!

Hopefully useful for anyone trying to understand what "AI agents" are, what they could be, and how they're being hyped. And for anyone who could use a bit of levity in all the AAAAAAH!

Hopefully useful for anyone trying to understand what "AI agents" are, what they could be, and how they're being hyped. And for anyone who could use a bit of levity in all the AAAAAAH!

Reposted by lindsey

As a scientist, I have so many notes on how to expand this study to be much more rigorous. Yet, this approach is well within the norms of what people are actually doing when measuring AI bias in industry.

So: What the heck is Meta measuring? What are they seeing? 9/

So: What the heck is Meta measuring? What are they seeing? 9/

April 22, 2025 at 1:28 AM

As a scientist, I have so many notes on how to expand this study to be much more rigorous. Yet, this approach is well within the norms of what people are actually doing when measuring AI bias in industry.

So: What the heck is Meta measuring? What are they seeing? 9/

So: What the heck is Meta measuring? What are they seeing? 9/

Reposted by lindsey

bell hooks ngl ate when she wrote, “Sometimes people try to destroy you, precisely because they recognize your power — not because they don’t see it, but because they see it and they don’t want it to exist.”

April 19, 2025 at 12:42 AM

bell hooks ngl ate when she wrote, “Sometimes people try to destroy you, precisely because they recognize your power — not because they don’t see it, but because they see it and they don’t want it to exist.”

Reposted by lindsey

Always love a good, truly alien-looking robot. This is for inspection, and can unfold to manipulate its environment and open doors.

April 19, 2025 at 11:20 AM

Always love a good, truly alien-looking robot. This is for inspection, and can unfold to manipulate its environment and open doors.

Reposted by lindsey

Check out this new series of online workshops about the Cyberpony Express!

March 22, 2025 at 4:26 PM

Check out this new series of online workshops about the Cyberpony Express!

Reposted by lindsey

The first batch of survey data from @esa.int's Euclid mission dropped earlier today.

This is a portion of the Deep Field Fornax, a focused look at the region of space in the Fornax constellation.

Based on the press release, I estimate that there are more than TWO MILLION galaxies in this image.

This is a portion of the Deep Field Fornax, a focused look at the region of space in the Fornax constellation.

Based on the press release, I estimate that there are more than TWO MILLION galaxies in this image.

March 19, 2025 at 11:14 PM

The first batch of survey data from @esa.int's Euclid mission dropped earlier today.

This is a portion of the Deep Field Fornax, a focused look at the region of space in the Fornax constellation.

Based on the press release, I estimate that there are more than TWO MILLION galaxies in this image.

This is a portion of the Deep Field Fornax, a focused look at the region of space in the Fornax constellation.

Based on the press release, I estimate that there are more than TWO MILLION galaxies in this image.

Reposted by lindsey

Hugging Face submits open-source blueprint, challenging Big Tech in White House AI policy fight https://venturebeat.com/ai/hugging-face-submits-open-source-blueprint-challenging-big-tech-in-white-house-ai-policy-fight/ #AI #HuggingFace

March 19, 2025 at 11:51 PM

Hugging Face submits open-source blueprint, challenging Big Tech in White House AI policy fight https://venturebeat.com/ai/hugging-face-submits-open-source-blueprint-challenging-big-tech-in-white-house-ai-policy-fight/ #AI #HuggingFace