- Efficient evaluation (Nov 5, 16:30, poster session 3)

- MT difficulty (Nov 7, 12:30, findings 3)

- COMET-poly (Nov 8, 11:00, WMT)

(DM to meet 🌿 )

- Efficient evaluation (Nov 5, 16:30, poster session 3)

- MT difficulty (Nov 7, 12:30, findings 3)

- COMET-poly (Nov 8, 11:00, WMT)

(DM to meet 🌿 )

I am not secretive about having applied to 4 similar fellowships during my PhD before and not succeeding. Still, refining my research statement (part of the application) helped me tremendously in finding out the...

inf.ethz.ch/news-and-eve...

I am not secretive about having applied to 4 similar fellowships during my PhD before and not succeeding. Still, refining my research statement (part of the application) helped me tremendously in finding out the...

inf.ethz.ch/news-and-eve...

MME focuses on resources, metrics & methodologies for evaluating multilingual systems! multilingual-multicultural-evaluation.github.io

📅 Workshop Mar 24–29, 2026

🗓️ Submit by Dec 19, 2025

MME focuses on resources, metrics & methodologies for evaluating multilingual systems! multilingual-multicultural-evaluation.github.io

📅 Workshop Mar 24–29, 2026

🗓️ Submit by Dec 19, 2025

- Standard testsets are too easy (Figure 1).

- We can make testsets that are not easy (Figure 2). 😎

- Standard testsets are too easy (Figure 1).

- We can make testsets that are not easy (Figure 2). 😎

The following tasks are now open for participants (deadline July 31st but participation has never been easier 🙂 ):

The following tasks are now open for participants (deadline July 31st but participation has never been easier 🙂 ):

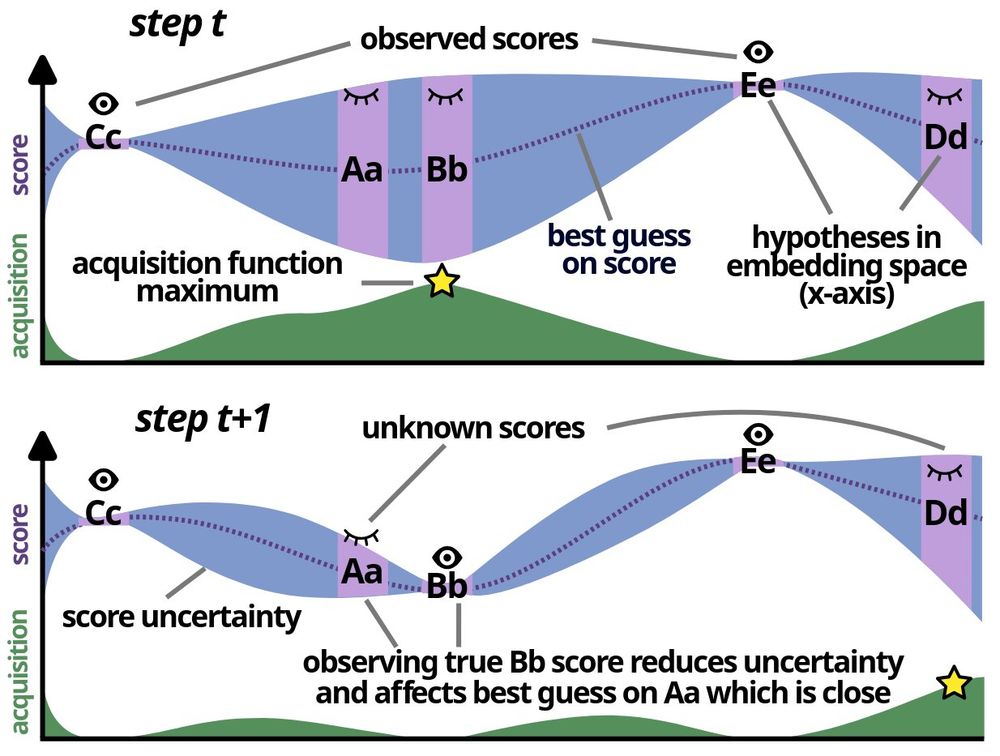

We can do better. "How to Select Datapoints for Efficient Human Evaluation of NLG Models?" shows how.🕵️

(random is still a devilishly good baseline)

We can do better. "How to Select Datapoints for Efficient Human Evaluation of NLG Models?" shows how.🕵️

(random is still a devilishly good baseline)

This year:

👉5 language pairs: EN->{ES, RU, DE, ZH},

👉2 tracks - sentence-level and doc-level translation,

👉authentic data from 2 domains: finance and IT!

www2.statmt.org/wmt25/termin...

Don't miss an opportunity - we only do it once in two years😏

If you're near Mountain View, let's talk evaluation. 📏

If you're near Mountain View, let's talk evaluation. 📏

(still requires a manual bbl)

(still requires a manual bbl)

1️⃣ Unsup. WQE shows promise (esp. uncertainty-based ones), interp approaches under-explored for MT

2️⃣ Calibration sets can help to ensure fair evaluations.

3️⃣ Use multiple annotators for robust rakings.

More info ➡️ arxiv.org/abs/2505.23183 8/8

1️⃣ Unsup. WQE shows promise (esp. uncertainty-based ones), interp approaches under-explored for MT

2️⃣ Calibration sets can help to ensure fair evaluations.

3️⃣ Use multiple annotators for robust rakings.

More info ➡️ arxiv.org/abs/2505.23183 8/8

We compare uncertainty- and interp-based WQE metrics across 12 directions, with some surprising findings!

🧵 1/

We compare uncertainty- and interp-based WQE metrics across 12 directions, with some surprising findings!

🧵 1/

(this is a joke)

(this is a joke)

Fret no more and come tomorrow at 11:00 to Hall 3 #NAACL2025.

Fret no more and come tomorrow at 11:00 to Hall 3 #NAACL2025.

See you tomorrow at 9:00 in Hall 3 #NAACL2025.

See you tomorrow at 9:00 in Hall 3 #NAACL2025.

@crystinaz.bsky.social

@oxxoskeets.bsky.social

@dayeonki.bsky.social @onadegibert.bsky.social

@crystinaz.bsky.social

@oxxoskeets.bsky.social

@dayeonki.bsky.social @onadegibert.bsky.social

And how can span annotation help us with evaluating texts?

Find out in our new paper: llm-span-annotators.github.io

Arxiv: arxiv.org/abs/2504.08697

And how can span annotation help us with evaluating texts?

Find out in our new paper: llm-span-annotators.github.io

Arxiv: arxiv.org/abs/2504.08697