Evaluates LLMs for breakfast, preaches AI usefulness all day long at ellamind.com.

A few take-aways stood out - especially for those interested in local deployment and performance trade-offs:

A few take-aways stood out - especially for those interested in local deployment and performance trade-offs:

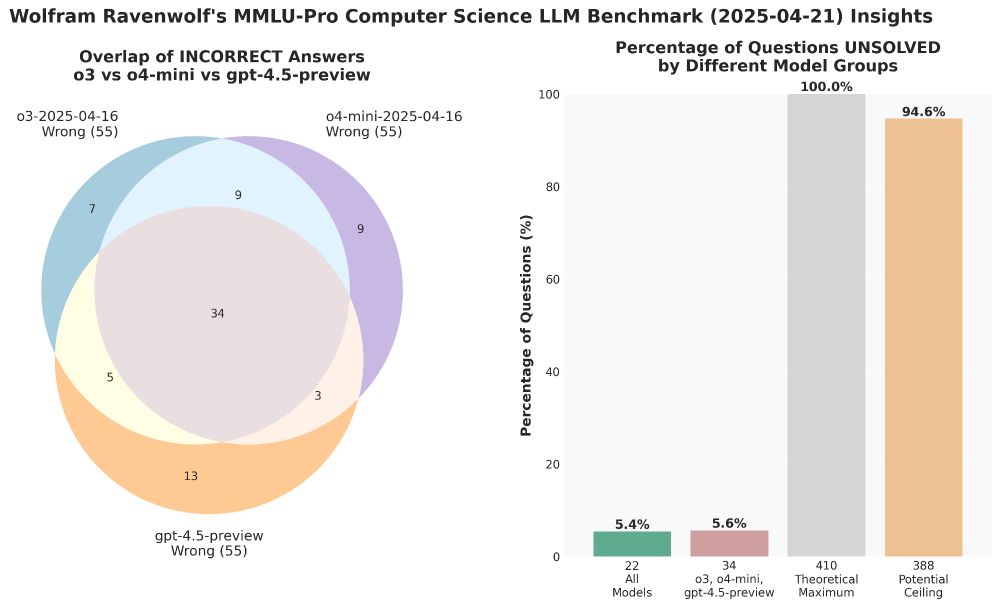

Definitely unexpected to see all three OpenAI top models get the exact same, top score in this benchmark. But they didn't all fail the same questions, as the Venn diagram shows. 🤔

Definitely unexpected to see all three OpenAI top models get the exact same, top score in this benchmark. But they didn't all fail the same questions, as the Venn diagram shows. 🤔

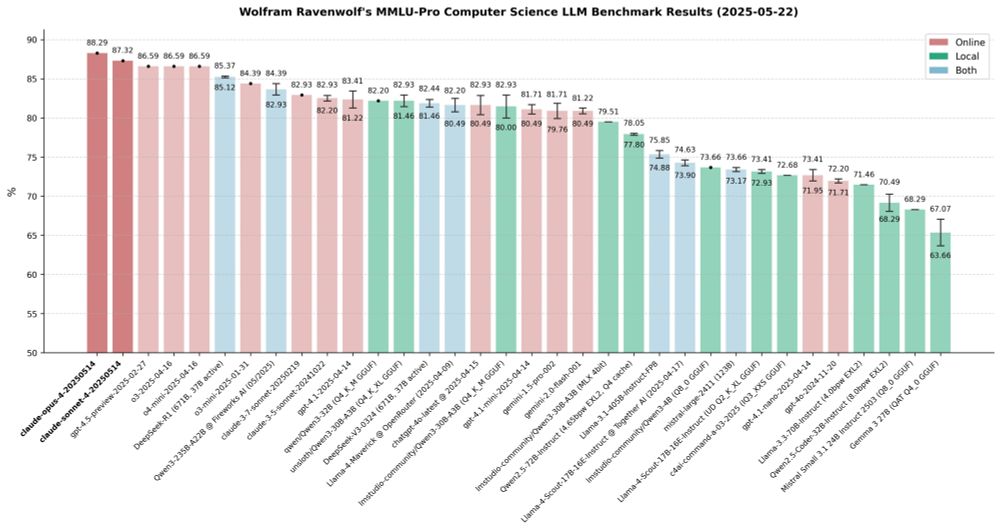

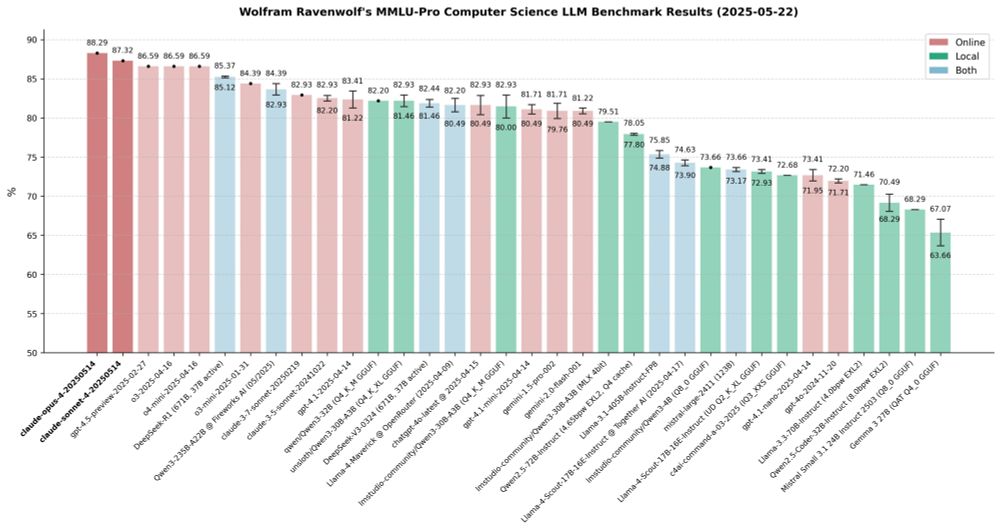

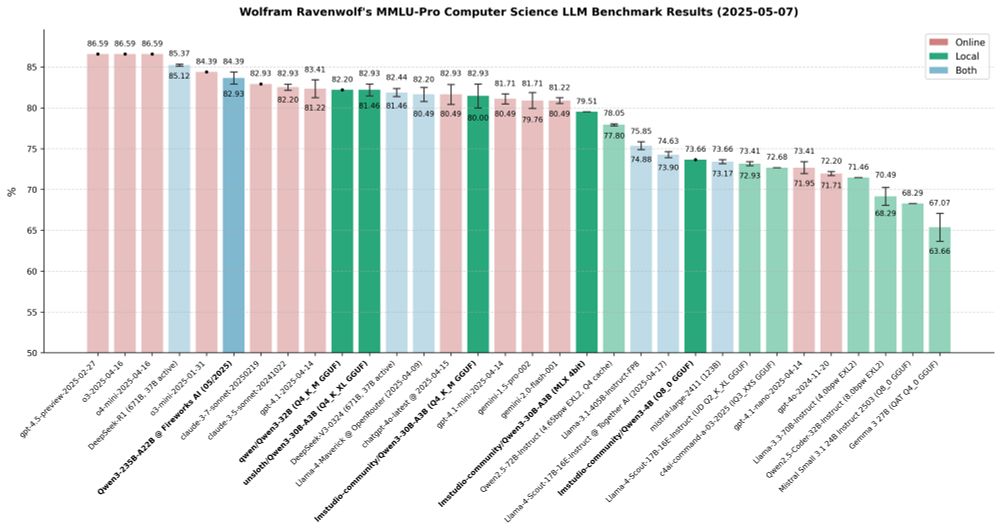

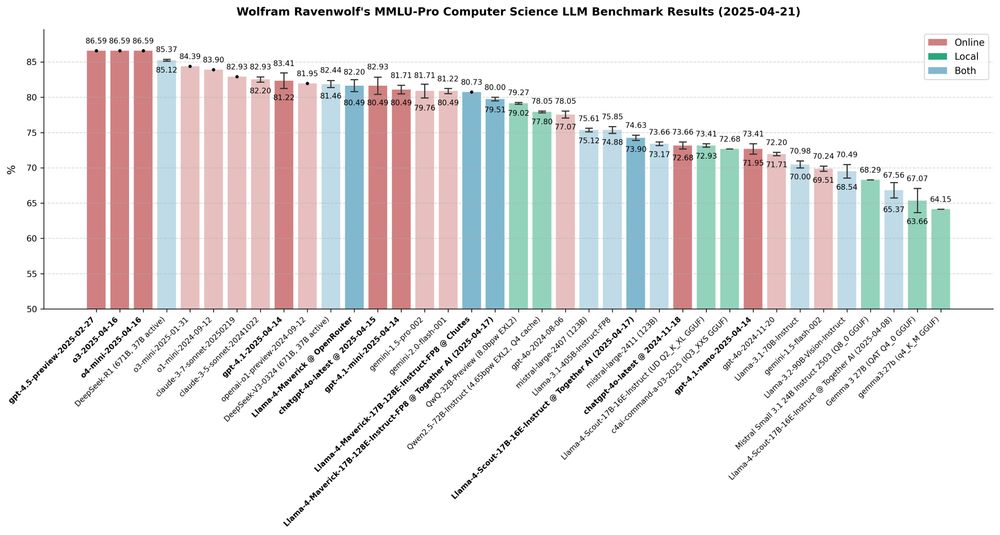

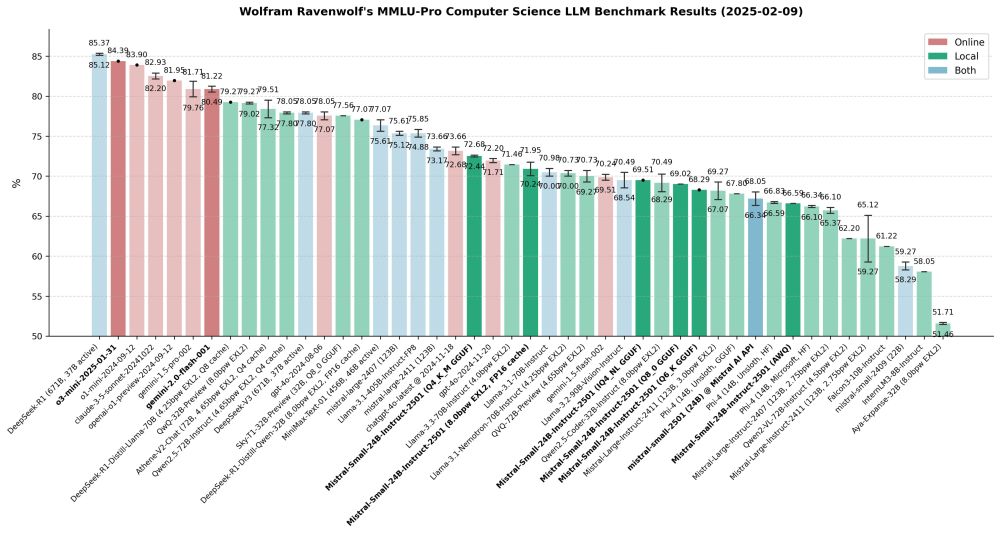

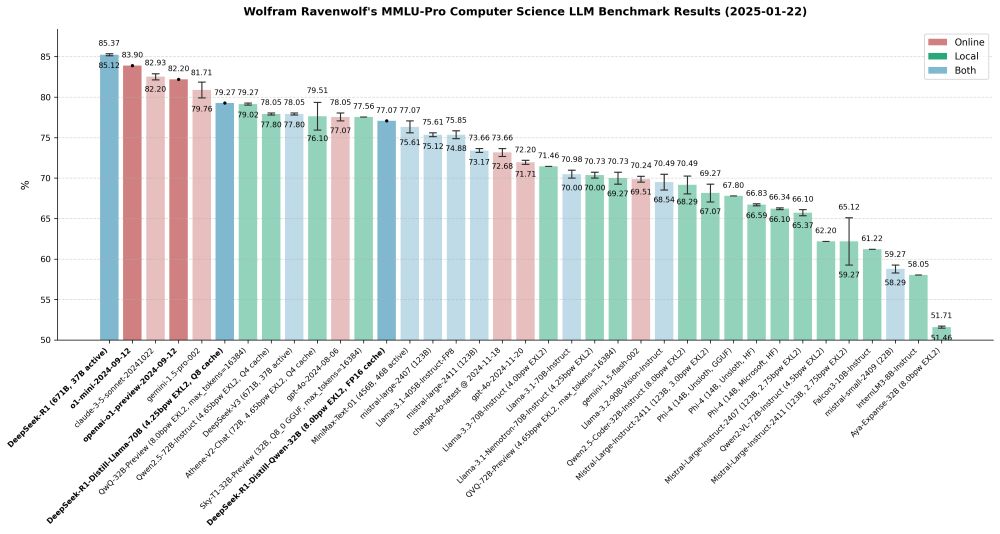

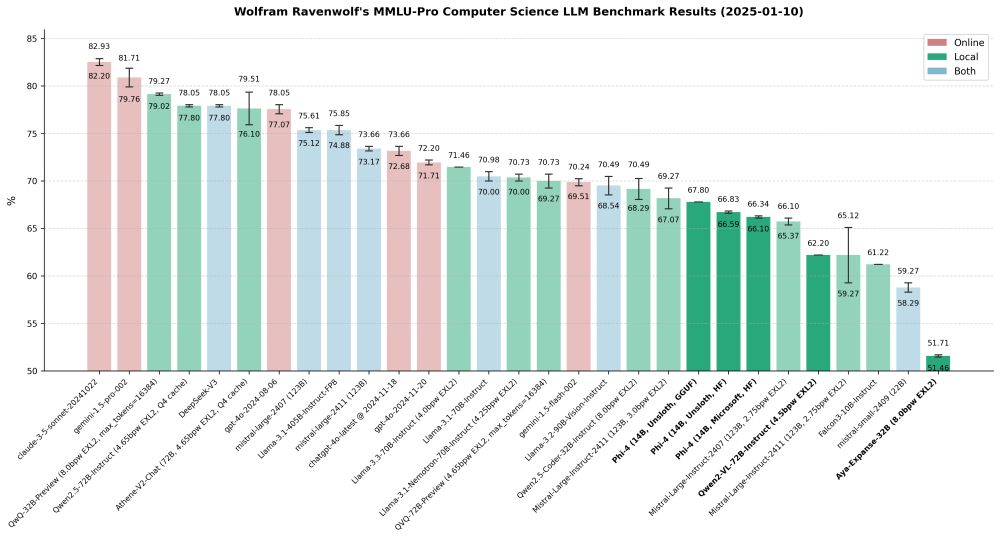

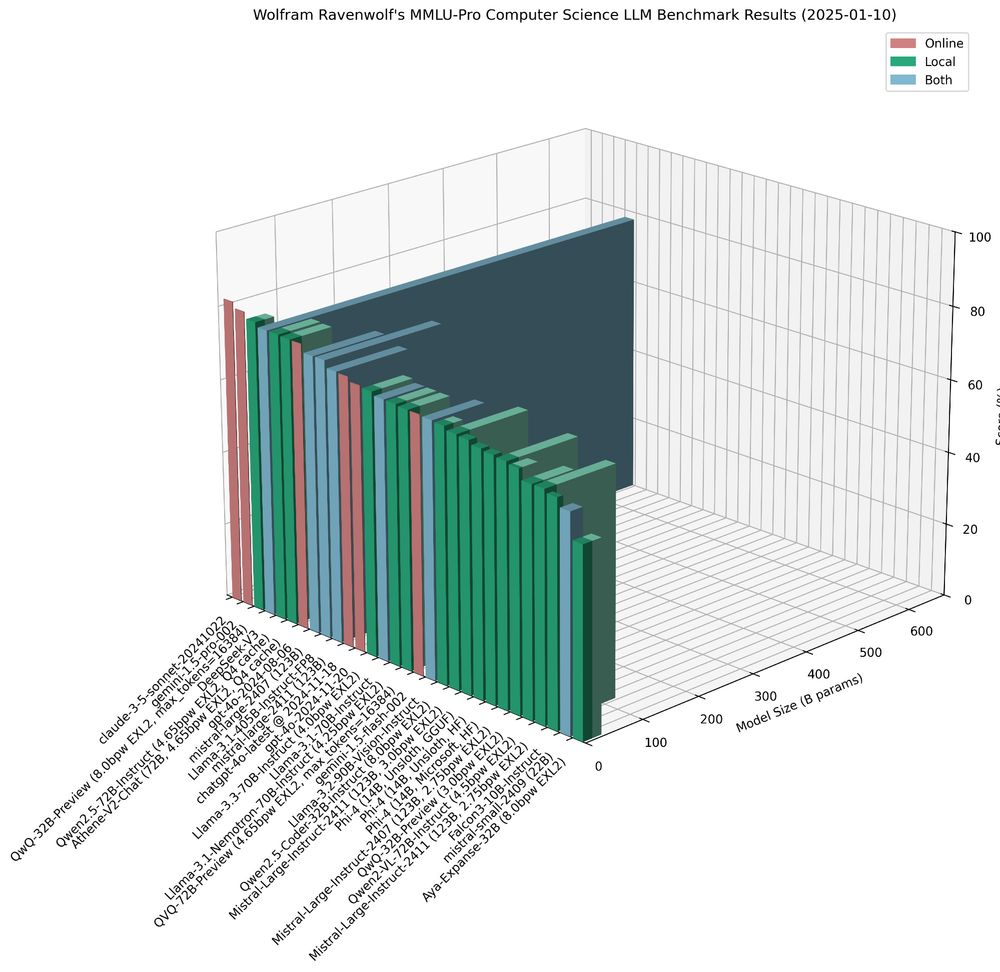

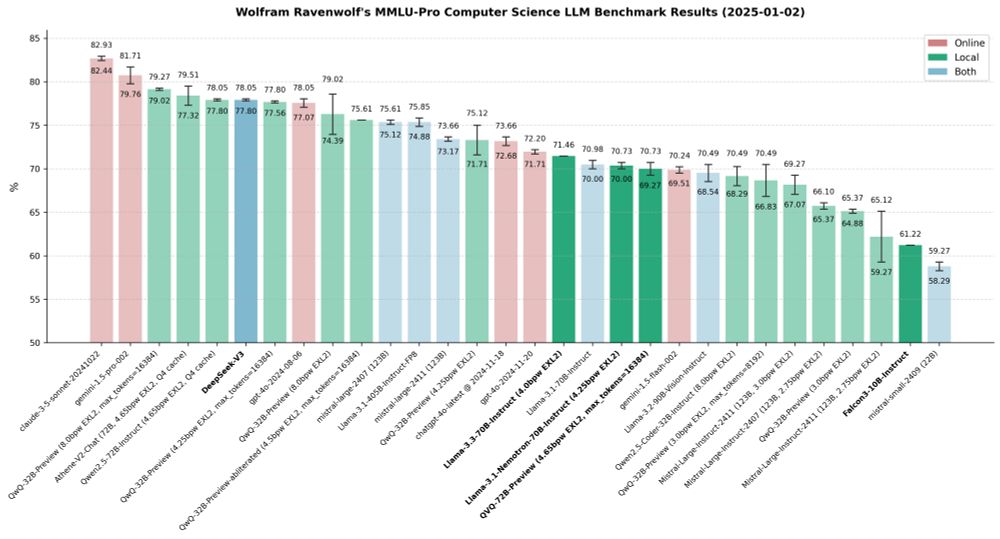

Here's how these three LLMs compare to an assortment of other strong models, online and local, open and closed, in the MMLU-Pro CS benchmark:

Here's how these three LLMs compare to an assortment of other strong models, online and local, open and closed, in the MMLU-Pro CS benchmark:

(Still wondering if he's man or machine - that dedication and discipline to do this week after week in a field that moves faster than any other, that requires superhuman drive! Utmost respect for that, no cap!)

(Still wondering if he's man or machine - that dedication and discipline to do this week after week in a field that moves faster than any other, that requires superhuman drive! Utmost respect for that, no cap!)

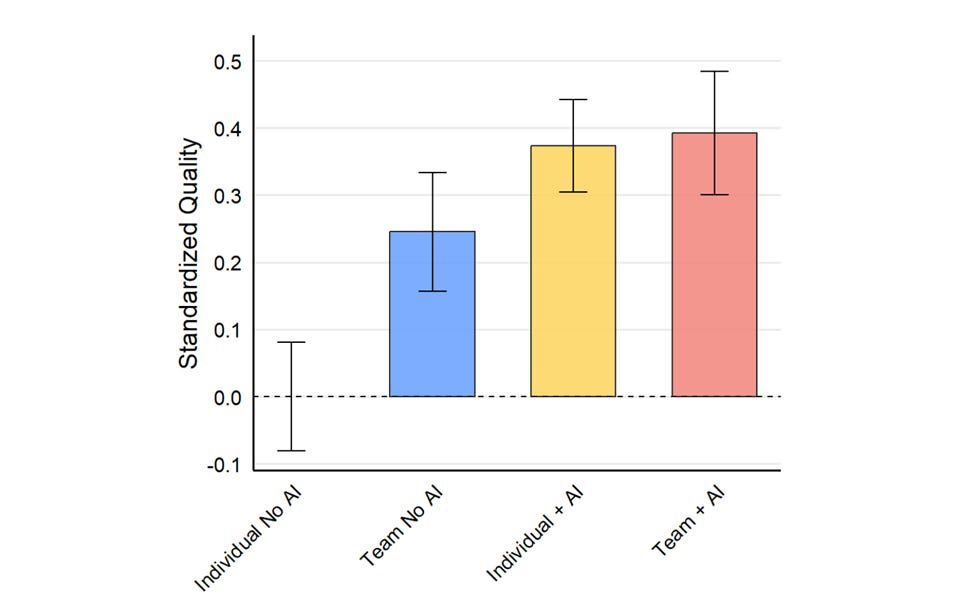

Since then we have seen Gen3 models, reasoners, large context windows, full multimodal, deep research, web search… www.oneusefulthing.org/p/the-cybern...

Since then we have seen Gen3 models, reasoners, large context windows, full multimodal, deep research, web search… www.oneusefulthing.org/p/the-cybern...

www.minimaxi.com/en/news/mini...

www.minimaxi.com/en/news/mini...

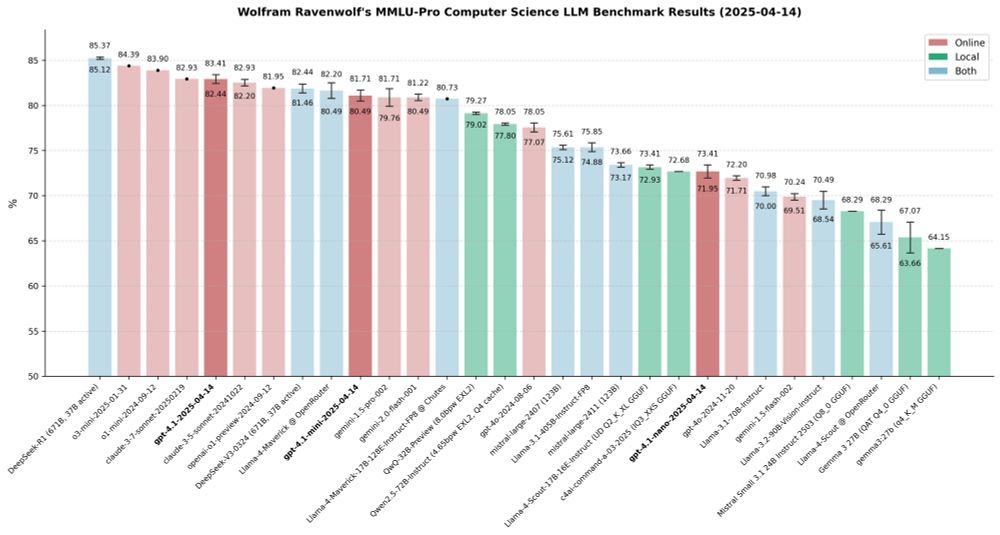

More details here:

huggingface.co/blog/wolfram...

More details here:

huggingface.co/blog/wolfram...

huggingface.co/blog/wolfram...

huggingface.co/blog/wolfram...

Thank you all for being part of this incredible journey - friends, colleagues, clients, and of course family. 💖

May the new year bring you joy and success! Let's make 2025 a year to remember - filled with laughter, love, and of course, plenty of AI magic! ✨

Thank you all for being part of this incredible journey - friends, colleagues, clients, and of course family. 💖

May the new year bring you joy and success! Let's make 2025 a year to remember - filled with laughter, love, and of course, plenty of AI magic! ✨

Benchmarks are still running. Looking forward to find out how it compares to QwQ which was the best local model in my recent mass benchmark.

huggingface.co/wolfram/QVQ-...

Benchmarks are still running. Looking forward to find out how it compares to QwQ which was the best local model in my recent mass benchmark.

huggingface.co/wolfram/QVQ-...

arxiv.org/abs/2412.01113

Also fits my observations with QwQ: Smaller (quantized) versions required more tokens to find the same answers.

arxiv.org/abs/2412.01113

Also fits my observations with QwQ: Smaller (quantized) versions required more tokens to find the same answers.

But would you hire a personal assistant with a PhD who's available to work remotely for you at a minimum wage of $1.25/h with a 40-hour work week?

And that guy even does any amount of overtime for free, even on weekends!

But would you hire a personal assistant with a PhD who's available to work remotely for you at a minimum wage of $1.25/h with a 40-hour work week?

And that guy even does any amount of overtime for free, even on weekends!

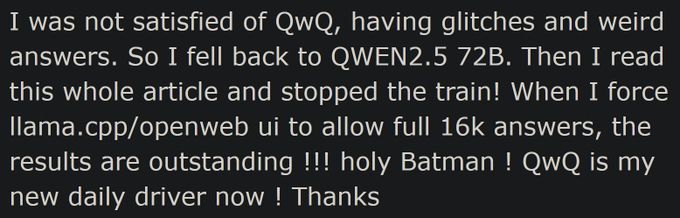

I've seen 2K max context and 128 max new tokens on too many models that should have much higher values. Especially QwQ needs room to think.

I've seen 2K max context and 128 max new tokens on too many models that should have much higher values. Especially QwQ needs room to think.

Maybe when AIs take over crap like naming a completely different product the same name as an existing product with a word added will be abandoned. I can't wait.

Maybe when AIs take over crap like naming a completely different product the same name as an existing product with a word added will be abandoned. I can't wait.

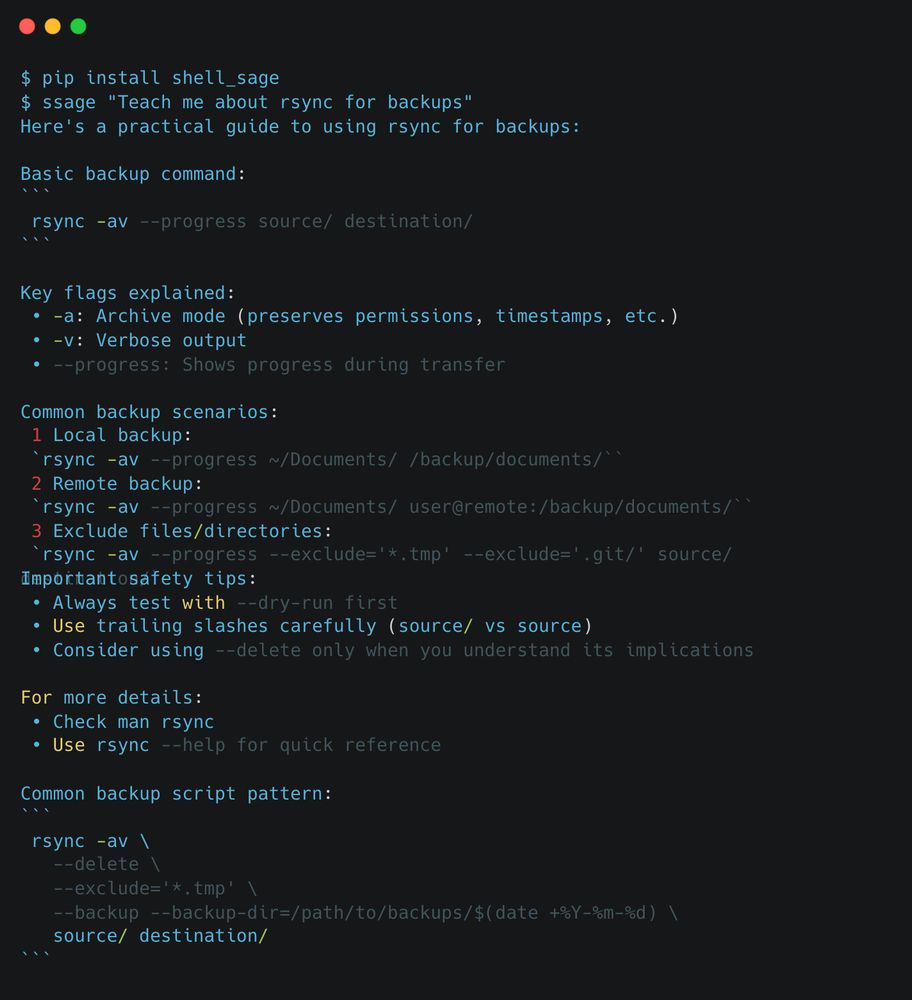

ShellSage is an LLM that lives in your terminal. It can see what directory you're in, what commands you've typed, what output you got, & your previous AI Q&A's.🧵

ShellSage is an LLM that lives in your terminal. It can see what directory you're in, what commands you've typed, what output you got, & your previous AI Q&A's.🧵

huggingface.co/blog/wolfram...

Check out my findings - some of the results might surprise you just as much as they surprised me...

huggingface.co/blog/wolfram...

Check out my findings - some of the results might surprise you just as much as they surprised me...

Contains *newly-collected* data, prioritizing *regional knowledge*.

Setting the stage for truly global AI evaluation.

Ready to see how your model measures up?

#AI #Multilingual #LLM #NLProc

Contains *newly-collected* data, prioritizing *regional knowledge*.

Setting the stage for truly global AI evaluation.

Ready to see how your model measures up?

#AI #Multilingual #LLM #NLProc