- High-res photos of all 6 faces

- Annual ring annotations

- Photos of slanted cuts for validation

- CT scans revealing the true interior structure (for future use)

- High-res photos of all 6 faces

- Annual ring annotations

- Photos of slanted cuts for validation

- CT scans revealing the true interior structure (for future use)

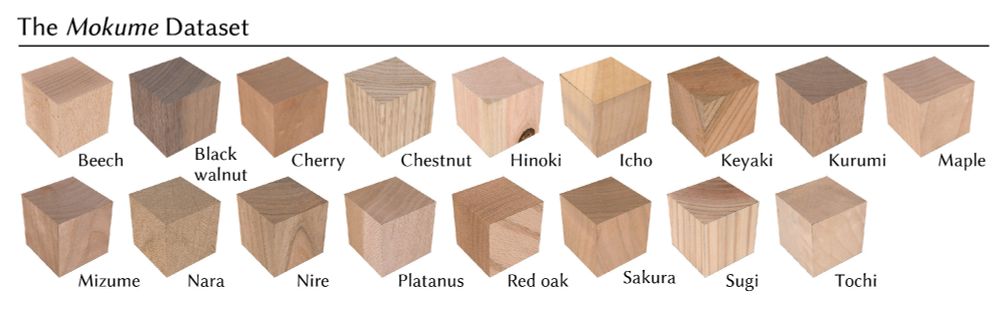

The patterns depend on tree species, growth conditions, and where and how the wood was cut from the tree.

The patterns depend on tree species, growth conditions, and where and how the wood was cut from the tree.

At SIGGRAPH'25 (Thursday!), Maria Larsson will present *Mokume*: a dataset of 190 diverse wood samples and a pipeline that solves this inverse texturing challenge. 🧵👇

At SIGGRAPH'25 (Thursday!), Maria Larsson will present *Mokume*: a dataset of 190 diverse wood samples and a pipeline that solves this inverse texturing challenge. 🧵👇

It generalizes deterministic surface evolution methods (e.g., NvDiffrec) and elegantly handles discontinuities. Future applications include physically based rendering and tomography.

It generalizes deterministic surface evolution methods (e.g., NvDiffrec) and elegantly handles discontinuities. Future applications include physically based rendering and tomography.

Each point along a ray is treated as a surface candidate, independently optimized to match that ray's reference color.

Each point along a ray is treated as a surface candidate, independently optimized to match that ray's reference color.

This patch shows the necessary changes to Instant NGP, which was originally designed for volume reconstruction.

This patch shows the necessary changes to Instant NGP, which was originally designed for volume reconstruction.