vishaal27.github.io

🖇️ 128 Papers

💬 8 Orals

🖋️ 564 Authors

✅ 40 Reviewers

🔊 7 Invited Speakers

👕 100 T-Shirts

🔥 Organizers: Paul Vicol, Mengye Ren, Renjie Liao, Naila Murray, Wei-Chiu Ma, Beidi Chen

#NeurIPS2024 #AdaptiveFoundationModels

🖇️ 128 Papers

💬 8 Orals

🖋️ 564 Authors

✅ 40 Reviewers

🔊 7 Invited Speakers

👕 100 T-Shirts

🔥 Organizers: Paul Vicol, Mengye Ren, Renjie Liao, Naila Murray, Wei-Chiu Ma, Beidi Chen

#NeurIPS2024 #AdaptiveFoundationModels

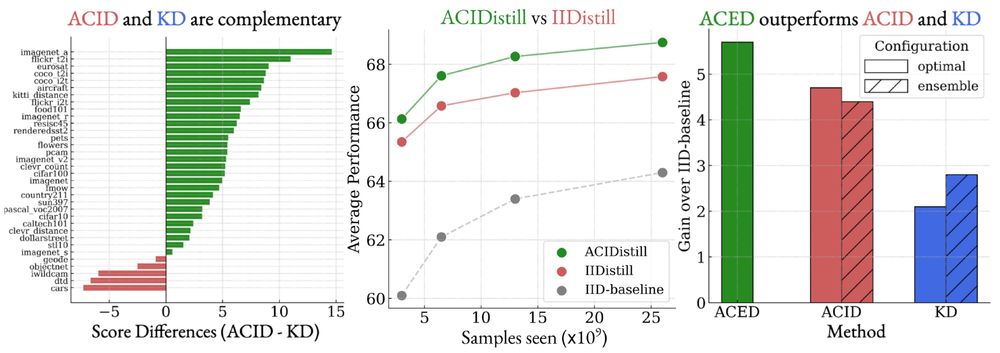

Super excited about this direction of strong pretraining for smol models!

Super excited about this direction of strong pretraining for smol models!

We construct a stable & reliable set of evaluations (StableEval) inspired by the inverse-variance-weighting method, to prune out unreliable evals!

We construct a stable & reliable set of evaluations (StableEval) inspired by the inverse-variance-weighting method, to prune out unreliable evals!

Outperforming strong baselines including Apple's MobileCLIP, TinyCLIP and @datologyai.com CLIP models!

Outperforming strong baselines including Apple's MobileCLIP, TinyCLIP and @datologyai.com CLIP models!

Further, ACID significantly outperforms KD as we scale up the reference/teacher sizes.

Further, ACID significantly outperforms KD as we scale up the reference/teacher sizes.