vishaal27.github.io

arxiv.org/abs/2411.18674

Smol models are all the rage these days & knowledge distillation (KD) is key for model compression!

We show how data curation can effectively distill to yield SoTA FLOP-efficient {C/Sig}LIPs!!

🧵👇

Since DeepSeek-R1 introduced reasoning-based RL, datasets like Open-R1 & OpenThoughts emerged for fine-tuning & GRPO. Our deep dive found major flaws — 25% of OpenThoughts needed elimination by data curation.

Here's why 👇🧵

Since DeepSeek-R1 introduced reasoning-based RL, datasets like Open-R1 & OpenThoughts emerged for fine-tuning & GRPO. Our deep dive found major flaws — 25% of OpenThoughts needed elimination by data curation.

Here's why 👇🧵

It turns out, Bayesian reasoning has some surprising answers - no cognitive biases needed! Let's explore this fascinating paradox quickly ☺️

It turns out, Bayesian reasoning has some surprising answers - no cognitive biases needed! Let's explore this fascinating paradox quickly ☺️

🚀 Speakers: @rsalakhu.bsky.social @sedielem.bsky.social Kate Saenko, Matthias Bethge / @vishaalurao.bsky.social Minjoon Seo, Bing Liu, Tianqi Chen

🌐Posters: adaptive-foundation-models.org/papers

🎬 neurips.cc/virtual/2024...

🧵Recap!

🚀 Speakers: @rsalakhu.bsky.social @sedielem.bsky.social Kate Saenko, Matthias Bethge / @vishaalurao.bsky.social Minjoon Seo, Bing Liu, Tianqi Chen

🌐Posters: adaptive-foundation-models.org/papers

🎬 neurips.cc/virtual/2024...

🧵Recap!

🖇️ 128 Papers

💬 8 Orals

🖋️ 564 Authors

✅ 40 Reviewers

🔊 7 Invited Speakers

👕 100 T-Shirts

🔥 Organizers: Paul Vicol, Mengye Ren, Renjie Liao, Naila Murray, Wei-Chiu Ma, Beidi Chen

#NeurIPS2024 #AdaptiveFoundationModels

🖇️ 128 Papers

💬 8 Orals

🖋️ 564 Authors

✅ 40 Reviewers

🔊 7 Invited Speakers

👕 100 T-Shirts

🔥 Organizers: Paul Vicol, Mengye Ren, Renjie Liao, Naila Murray, Wei-Chiu Ma, Beidi Chen

#NeurIPS2024 #AdaptiveFoundationModels

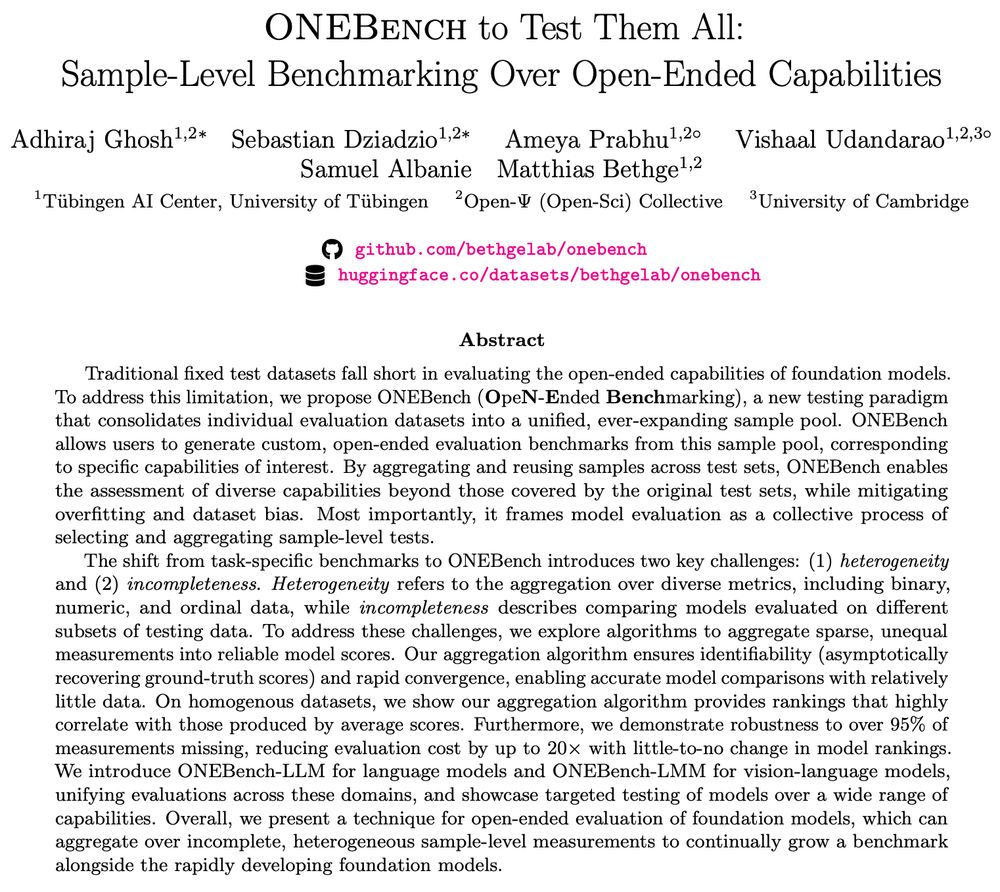

Check out ✨ONEBench✨, where we show how sample-level evaluation is the solution.

🔎 arxiv.org/abs/2412.06745

Check out ✨ONEBench✨, where we show how sample-level evaluation is the solution.

🔎 arxiv.org/abs/2412.06745

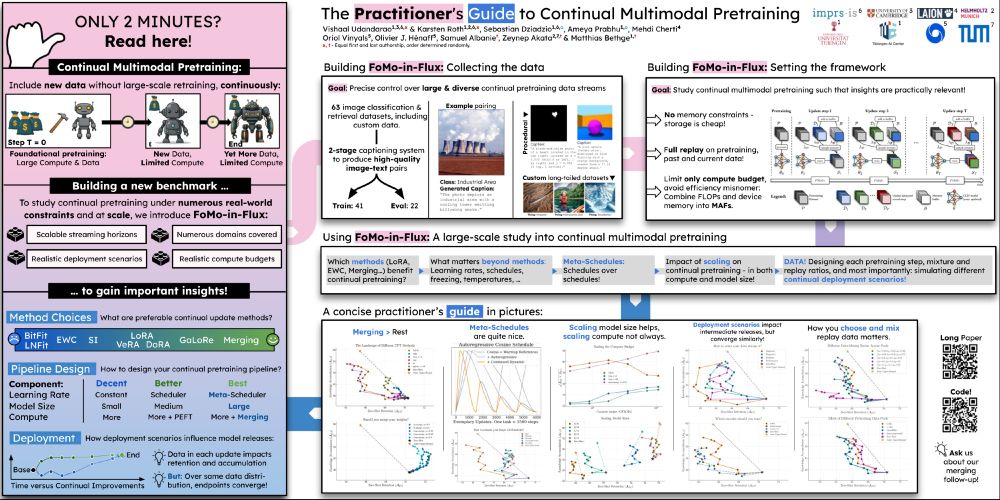

So I'm really happy to present our large-scale study at #NeurIPS2024!

Come drop by to talk about all that and more!

So I'm really happy to present our large-scale study at #NeurIPS2024!

Come drop by to talk about all that and more!

arxiv.org/abs/2411.18674

Smol models are all the rage these days & knowledge distillation (KD) is key for model compression!

We show how data curation can effectively distill to yield SoTA FLOP-efficient {C/Sig}LIPs!!

🧵👇

arxiv.org/abs/2411.18674

Smol models are all the rage these days & knowledge distillation (KD) is key for model compression!

We show how data curation can effectively distill to yield SoTA FLOP-efficient {C/Sig}LIPs!!

🧵👇

Intervening only on training data, our pipeline can train models faster (7.7x less compute), better (+8.5% performance), and smaller (models half the size outperform by >5%)!

www.datologyai.com/post/technic...

Intervening only on training data, our pipeline can train models faster (7.7x less compute), better (+8.5% performance), and smaller (models half the size outperform by >5%)!

www.datologyai.com/post/technic...

Turns out you can, and here is how: arxiv.org/abs/2411.15099

Really excited to this work on multimodal pretraining for my first bluesky entry!

🧵 A short and hopefully informative thread:

Turns out you can, and here is how: arxiv.org/abs/2411.15099

Really excited to this work on multimodal pretraining for my first bluesky entry!

🧵 A short and hopefully informative thread:

@uwnlp.bsky.social & Ai2

With open models & 45M-paper datastores, it outperforms proprietary systems & match human experts.

Try out our demo!

openscholar.allen.ai

@uwnlp.bsky.social & Ai2

With open models & 45M-paper datastores, it outperforms proprietary systems & match human experts.

Try out our demo!

openscholar.allen.ai

go.bsky.app/NFbVzrA

go.bsky.app/NFbVzrA