--> https://valeoai.github.io/ <--

by Loïck Chambon (loickch.github.io), @paulcouairon.bsky.social, @eloizablocki.bsky.social, @alexandreboulch.bsky.social, @nicolasthome.bsky.social, @matthieucord.bsky.social

Collab with @mlia-isir.bsky.social

by Loïck Chambon (loickch.github.io), @paulcouairon.bsky.social, @eloizablocki.bsky.social, @alexandreboulch.bsky.social, @nicolasthome.bsky.social, @matthieucord.bsky.social

Collab with @mlia-isir.bsky.social

The repo contains:

✅ Pretrained model

✅ Example notebooks

✅ Evaluation and training codes

Check it out & ⭐ the repo: github.com/valeoai/NAF

The repo contains:

✅ Pretrained model

✅ Example notebooks

✅ Evaluation and training codes

Check it out & ⭐ the repo: github.com/valeoai/NAF

If you are using bilinear interpolation anywhere, NAF acts as a strict drop-in replacement.

Just swap it in. No retraining required. It’s literally free points for your metrics.📈

If you are using bilinear interpolation anywhere, NAF acts as a strict drop-in replacement.

Just swap it in. No retraining required. It’s literally free points for your metrics.📈

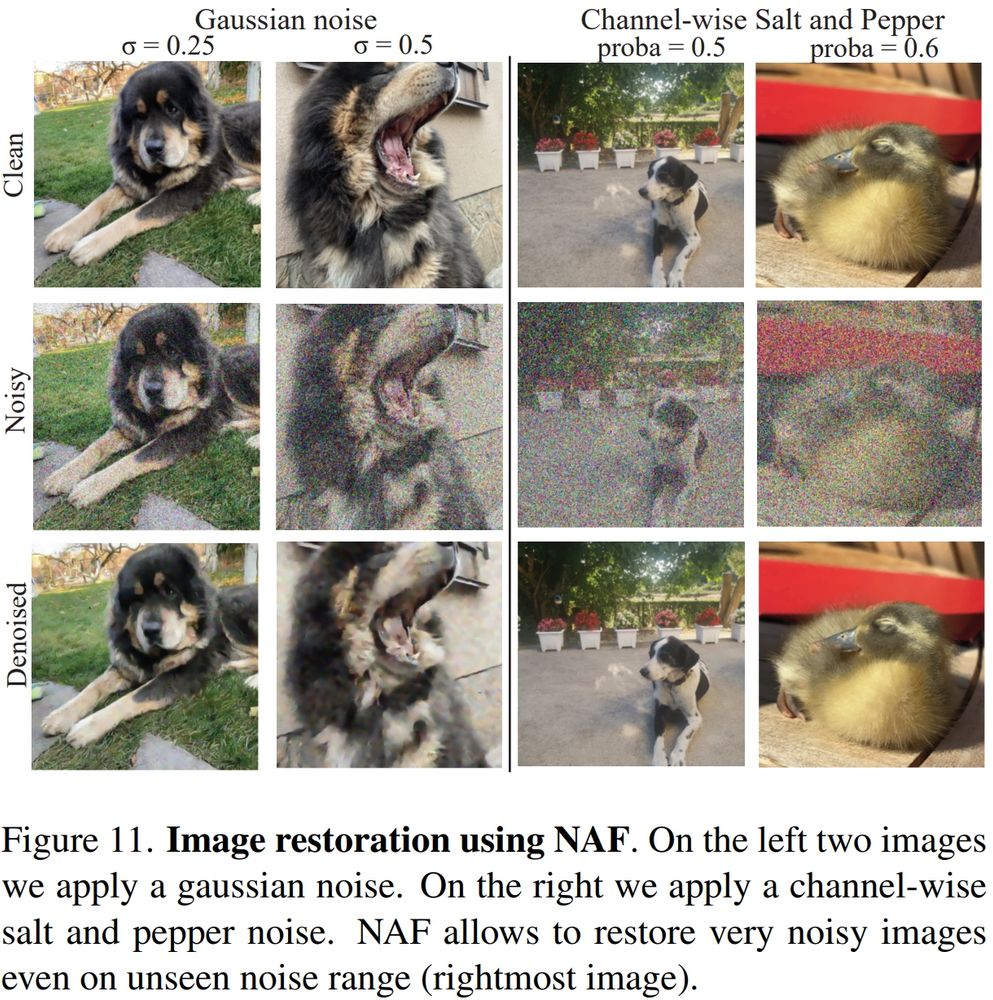

Not just zero-shot feature upsampling: it shines on image restoration too, delivering sharp, high-quality results across multiple applications. 🖼️

Not just zero-shot feature upsampling: it shines on image restoration too, delivering sharp, high-quality results across multiple applications. 🖼️

Under the hood, NAF learns an Inverse Discrete Fourier Transform: revealing a link between feature upsampling, classical filtering, and Fourier theory.

✨ Feature upsampling is no longer a black box

Under the hood, NAF learns an Inverse Discrete Fourier Transform: revealing a link between feature upsampling, classical filtering, and Fourier theory.

✨ Feature upsampling is no longer a black box

🧬 Lightweight image encoder (600k params)

🔁 Rotary Position Embeddings (RoPE)

🔍 Cross-Scale Neighborhood Attention

First fully learnable VFM-agnostic reweighting!✅

🧬 Lightweight image encoder (600k params)

🔁 Rotary Position Embeddings (RoPE)

🔍 Cross-Scale Neighborhood Attention

First fully learnable VFM-agnostic reweighting!✅

It beats both VFM-specific upsamplers (FeatUp, JAFAR) and VFM-agnostic methods (JBU, AnyUp) across downstream tasks:

- 🥇Semantic Segmentation

- 🥇Depth Estimation

- 🥇Open-Vocabulary

- 🥇Video Propagation, etc.

Even for massive models like: DINOv3-7B !

It beats both VFM-specific upsamplers (FeatUp, JAFAR) and VFM-agnostic methods (JBU, AnyUp) across downstream tasks:

- 🥇Semantic Segmentation

- 🥇Depth Estimation

- 🥇Open-Vocabulary

- 🥇Video Propagation, etc.

Even for massive models like: DINOv3-7B !

by: @bjoernmichele.bsky.social @alexandreboulch.bsky.social @gillespuy.bsky.social @tuanhungvu.bsky.social, R. Marlet, @ncourty.bsky.social

📄 bsky.app/profile/bjoe...

Code: ✅

We'll present MuDDoS at BMVC: a method that boosts multimodal distillation for 3D semantic segmentation under domain shift.

📍 BMVC

🕚 Monday, Poster Session 1: Multimodal Learning (11:00–12:30)

📌 Hadfield Hall #859

by: @bjoernmichele.bsky.social @alexandreboulch.bsky.social @gillespuy.bsky.social @tuanhungvu.bsky.social, R. Marlet, @ncourty.bsky.social

📄 bsky.app/profile/bjoe...

Code: ✅

by: S de Moreau, Y. Almehio, @abursuc.bsky.social, H. El-Idrissi, B. Stanciulescu, @fabienmoutarde

tl;dr: a light enhancement method for better depth estimation in low-light conditions

📄 arxiv.org/abs/2409.08031

Code: ✅

by: S de Moreau, Y. Almehio, @abursuc.bsky.social, H. El-Idrissi, B. Stanciulescu, @fabienmoutarde

tl;dr: a light enhancement method for better depth estimation in low-light conditions

📄 arxiv.org/abs/2409.08031

Code: ✅

by: E. Kirby, @mickaelchen.bsky.social, R. Marlet, N. Samet

tl;dr: a diffusion-based method producing lidar point clouds of dataset objects, with an extensive control of the generation

📄 arxiv.org/abs/2412.07385

Code: ✅

by: E. Kirby, @mickaelchen.bsky.social, R. Marlet, N. Samet

tl;dr: a diffusion-based method producing lidar point clouds of dataset objects, with an extensive control of the generation

📄 arxiv.org/abs/2412.07385

Code: ✅

tl;dr: a new method for understanding and controlling how MLLMs adapt during fine-tuning

by: P. Khayatan, M. Shukor, J. Parekh, A. Dapogny, @matthieucord.bsky.social

📄: arxiv.org/abs/2501.03012

tl;dr: a new method for understanding and controlling how MLLMs adapt during fine-tuning

by: P. Khayatan, M. Shukor, J. Parekh, A. Dapogny, @matthieucord.bsky.social

📄: arxiv.org/abs/2501.03012

tl;dr: a simple trick to boost open-vocabulary semantic segmentation by identifying class expert prompt templates

by: Y. Benigmim, M. Fahes, @tuanhungvu.bsky.social, @abursuc.bsky.social, R. de Charette.

📄: arxiv.org/abs/2504.10487

tl;dr: a simple trick to boost open-vocabulary semantic segmentation by identifying class expert prompt templates

by: Y. Benigmim, M. Fahes, @tuanhungvu.bsky.social, @abursuc.bsky.social, R. de Charette.

📄: arxiv.org/abs/2504.10487

tl;dr: a self-supervised learning of temporally consistent representations from video w/ motion cues

by: M. Salehi, S. Venkataramanan, I. Simion, E. Gavves, @cgmsnoek.bsky.social, Y. Asano

📄: arxiv.org/abs/2506.08694

tl;dr: a self-supervised learning of temporally consistent representations from video w/ motion cues

by: M. Salehi, S. Venkataramanan, I. Simion, E. Gavves, @cgmsnoek.bsky.social, Y. Asano

📄: arxiv.org/abs/2506.08694

tl;dr: a module for 3D occupancy learning that enforces 2D-3D consistency through differentiable Gaussian rendering

by: L. Chambon, @eloizablocki.bsky.social, @alexandreboulch.bsky.social, M. Chen, M. Cord

📄: arxiv.org/abs/2502.05040

tl;dr: a module for 3D occupancy learning that enforces 2D-3D consistency through differentiable Gaussian rendering

by: L. Chambon, @eloizablocki.bsky.social, @alexandreboulch.bsky.social, M. Chen, M. Cord

📄: arxiv.org/abs/2502.05040

@ssirko.bsky.social, @vobeckya.bsky.social, @abursuc.bsky.social , N. Thome, @spyrosgidaris.bsky.social

📄: arxiv.org/abs/2506.18463

bsky.app/profile/ssir...

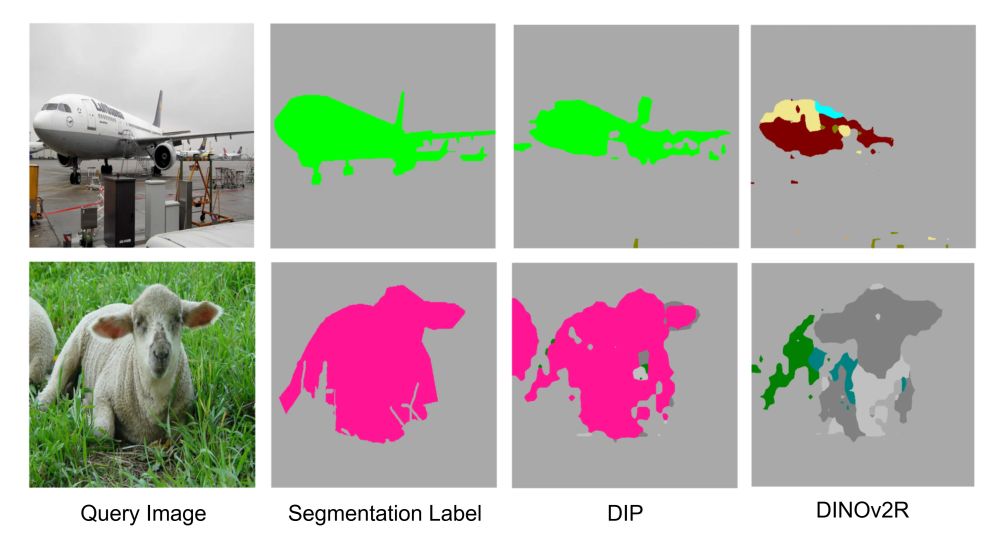

Introducing DIP: unsupervised post-training that enhances dense features in pretrained ViTs for dense in-context scene understanding

Below: Low-shot in-context semantic segmentation examples. DIP features outperform DINOv2!

@ssirko.bsky.social, @vobeckya.bsky.social, @abursuc.bsky.social , N. Thome, @spyrosgidaris.bsky.social

📄: arxiv.org/abs/2506.18463

bsky.app/profile/ssir...