Website: bjoernmichele.com

We'll present MuDDoS at BMVC: a method that boosts multimodal distillation for 3D semantic segmentation under domain shift.

📍 BMVC

🕚 Monday, Poster Session 1: Multimodal Learning (11:00–12:30)

📌 Hadfield Hall #859

We'll present MuDDoS at BMVC: a method that boosts multimodal distillation for 3D semantic segmentation under domain shift.

📍 BMVC

🕚 Monday, Poster Session 1: Multimodal Learning (11:00–12:30)

📌 Hadfield Hall #859

📝 Paper: bmva-archive.org.uk/bmvc/2025/a...

💻 Code: github.com/valeoai/muddos

This is a joint work with my great co-authors @alexandreboulch.bsky.social, @gillespuy.bsky.social, @tuanhungvu.bsky.social, Renaud Marlet, @ncourty.bsky.social and myself.

📝 Paper: bmva-archive.org.uk/bmvc/2025/a...

💻 Code: github.com/valeoai/muddos

This is a joint work with my great co-authors @alexandreboulch.bsky.social, @gillespuy.bsky.social, @tuanhungvu.bsky.social, Renaud Marlet, @ncourty.bsky.social and myself.

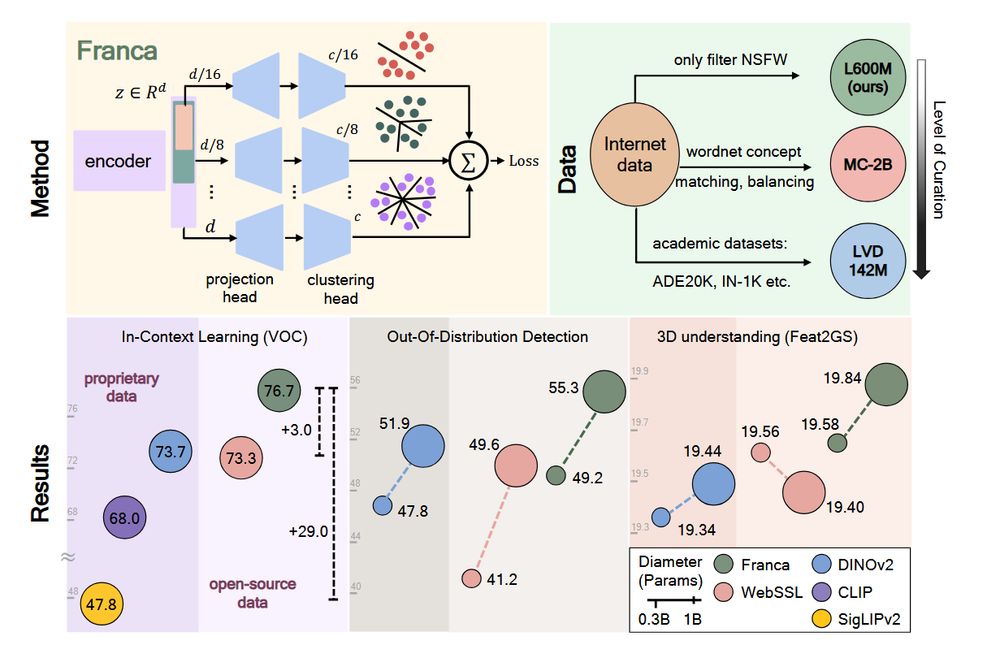

1️⃣ The LiDAR backbone architecture has a major impact on cross-domain generalization.

2️⃣ A single pretrained backbone can generalize to many domain shifts.

3️⃣ Freezing the pretrained backbone + training only a small MLP head gives the best results.

1️⃣ The LiDAR backbone architecture has a major impact on cross-domain generalization.

2️⃣ A single pretrained backbone can generalize to many domain shifts.

3️⃣ Freezing the pretrained backbone + training only a small MLP head gives the best results.

We'll present MuDDoS at BMVC: a method that boosts multimodal distillation for 3D semantic segmentation under domain shift.

📍 BMVC

🕚 Monday, Poster Session 1: Multimodal Learning (11:00–12:30)

📌 Hadfield Hall #859

We'll present MuDDoS at BMVC: a method that boosts multimodal distillation for 3D semantic segmentation under domain shift.

📍 BMVC

🕚 Monday, Poster Session 1: Multimodal Learning (11:00–12:30)

📌 Hadfield Hall #859

All infos here: nkeriven.github.io/malaga/

We’ll present 5 papers about:

💡 self-supervised & representation learning

🌍 3D occupancy & multi-sensor perception

🧩 open-vocabulary segmentation

🧠 multimodal LLMs & explainability

valeoai.github.io/posts/iccv-2...

We’ll present 5 papers about:

💡 self-supervised & representation learning

🌍 3D occupancy & multi-sensor perception

🧩 open-vocabulary segmentation

🧠 multimodal LLMs & explainability

valeoai.github.io/posts/iccv-2...

First conference as a PhD student, really excited to meet new people.

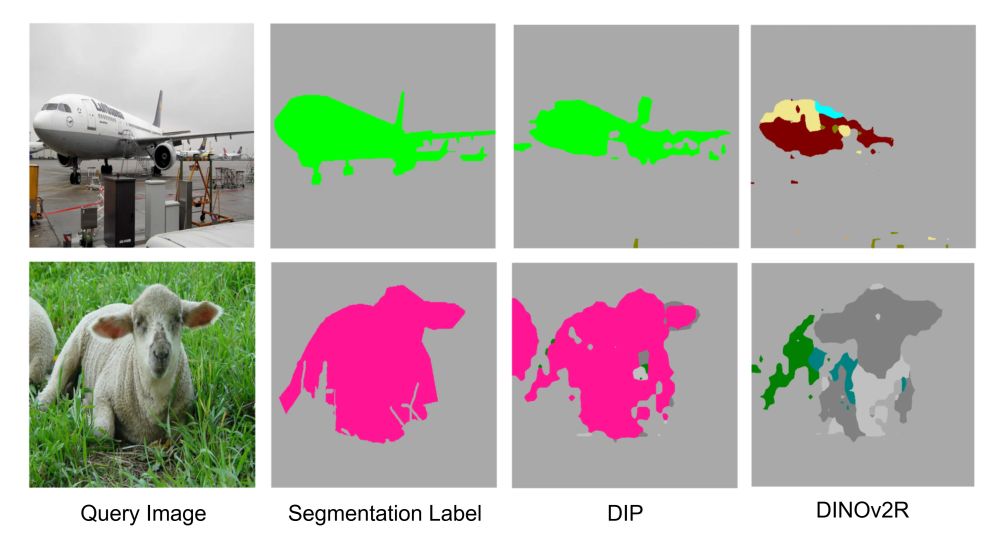

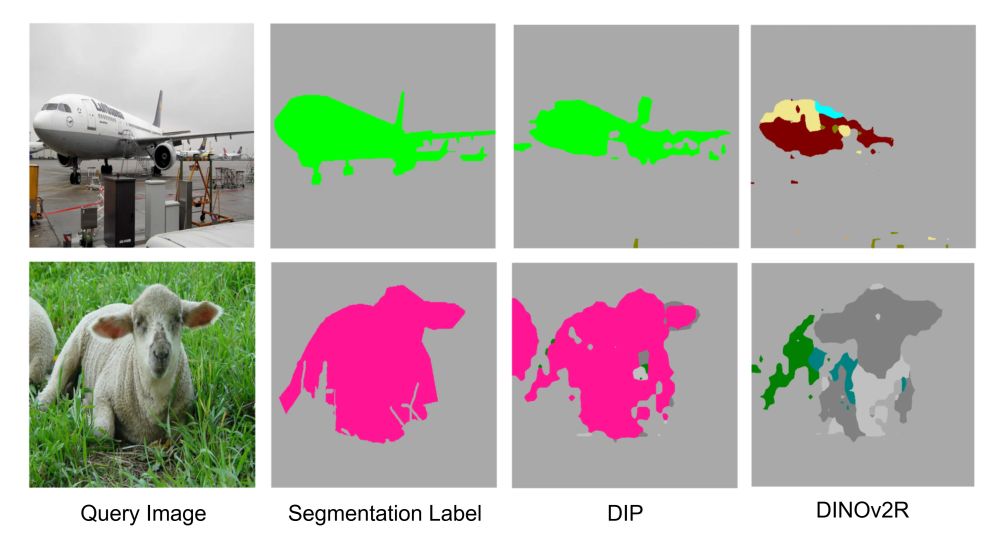

Introducing DIP: unsupervised post-training that enhances dense features in pretrained ViTs for dense in-context scene understanding

Below: Low-shot in-context semantic segmentation examples. DIP features outperform DINOv2!

First conference as a PhD student, really excited to meet new people.

programme below (or tinyurl.com/asbn5b5d) 🧵1/9

programme below (or tinyurl.com/asbn5b5d) 🧵1/9

His thesis «Learning Actionable LiDAR Representations w/o Annotations» covers the papers BEVContrast (learning self-sup LiDAR features), SLidR, ScaLR (distillation), UNIT and Alpine (solving tasks w/o labels).

His thesis «Learning Actionable LiDAR Representations w/o Annotations» covers the papers BEVContrast (learning self-sup LiDAR features), SLidR, ScaLR (distillation), UNIT and Alpine (solving tasks w/o labels).

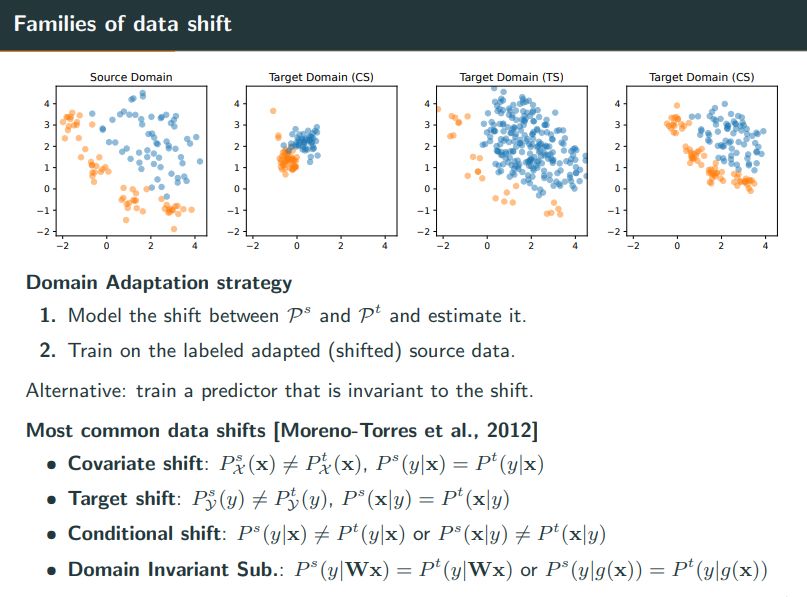

Today, @bjoernmichele.bsky.social is defending his PhD on "Domain Adaptation for 3D Data"

Best of luck! 🚀

Today, @bjoernmichele.bsky.social is defending his PhD on "Domain Adaptation for 3D Data"

Best of luck! 🚀

Andrei Bursuc @abursuc.bsky.social

Anh-Quan Cao @anhquancao.bsky.social

Renaud Marlet

Eloi Zablocki @eloizablocki.bsky.social

@iccv.bsky.social

iccv.thecvf.com/Conferences/...

Andrei Bursuc @abursuc.bsky.social

Anh-Quan Cao @anhquancao.bsky.social

Renaud Marlet

Eloi Zablocki @eloizablocki.bsky.social

@iccv.bsky.social

iccv.thecvf.com/Conferences/...

Papers popped up on different platforms, but mainly on ResearchGate with ~80 papers in just 3 weeks.

[1/]

Papers popped up on different platforms, but mainly on ResearchGate with ~80 papers in just 3 weeks.

[1/]

colab.research.google.com/drive/16GJyb...

Comments welcome!

colab.research.google.com/drive/16GJyb...

Comments welcome!

Introducing DIP: unsupervised post-training that enhances dense features in pretrained ViTs for dense in-context scene understanding

Below: Low-shot in-context semantic segmentation examples. DIP features outperform DINOv2!

Introducing DIP: unsupervised post-training that enhances dense features in pretrained ViTs for dense in-context scene understanding

Below: Low-shot in-context semantic segmentation examples. DIP features outperform DINOv2!