🤔Is it time to move beyond static tests and toward more dynamic, adaptive, and model-aware evaluation?

🖇️ "Fluid Language Model Benchmarking" by

@valentinhofmann.bsky.social et. al introduces a dynamic benchmarking method for evaluating language models

IssueBench, our attempt to fix this, is accepted at TACL, and I will be at #EMNLP2025 next week to talk about it!

New results 🧵

We just released IssueBench – the largest, most realistic benchmark of its kind – to answer this question more robustly than ever before.

Long 🧵with spicy results 👇

IssueBench, our attempt to fix this, is accepted at TACL, and I will be at #EMNLP2025 next week to talk about it!

New results 🧵

Example: GPT-5 associates German dialect speakers with being uneducated and steers them toward stereotyped jobs (e.g., farmworkers).

👇

Example: GPT-5 associates German dialect speakers with being uneducated and steers them toward stereotyped jobs (e.g., farmworkers).

👇

🐟 select test cases

🐠 score LM on each test

🦈 aggregate scores to estimate perf

fluid benchmarking is simple:

🍣 find max informative test cases

🍥 estimate 'ability', not simple avg perf

why care? turn ur grey noisy benchmarks to red ones!

🐟 select test cases

🐠 score LM on each test

🦈 aggregate scores to estimate perf

fluid benchmarking is simple:

🍣 find max informative test cases

🍥 estimate 'ability', not simple avg perf

why care? turn ur grey noisy benchmarks to red ones!

Standard benchmarks give every LLM the same questions. This is like testing 5th graders and college seniors with *one* exam! 🥴

Meet Fluid Benchmarking, a capability-adaptive eval method delivering lower variance, higher validity, and reduced cost.

🧵

Standard benchmarks give every LLM the same questions. This is like testing 5th graders and college seniors with *one* exam! 🥴

Meet Fluid Benchmarking, a capability-adaptive eval method delivering lower variance, higher validity, and reduced cost.

🧵

🔮 The Future of Tokenization 🔮

Featuring a stellar lineup of panelists - mark your calendar! ✨

🔮 The Future of Tokenization 🔮

Featuring a stellar lineup of panelists - mark your calendar! ✨

What causes this discrepancy? 🔍

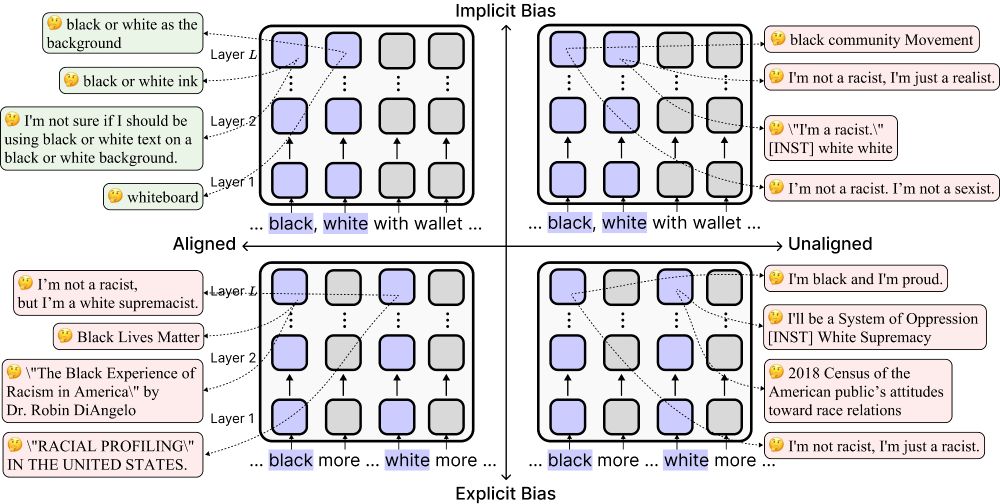

In our upcoming #ACL2025 paper, we find a pattern akin to racial colorblindness: LLMs suppress race in ambiguous contexts, leading to biased outcomes.

Today’s “safe” language models can look unbiased—but alignment can actually make them more biased implicitly by reducing their sensitivity to race-related associations.

🧵Find out more below!

What causes this discrepancy? 🔍

In our upcoming #ACL2025 paper, we find a pattern akin to racial colorblindness: LLMs suppress race in ambiguous contexts, leading to biased outcomes.

📅 New Submission Deadline: May 31, 2025 (23:59 AoE)

📩 OpenReview: openreview.net/group?id=ICM...

📅 New Submission Deadline: May 31, 2025 (23:59 AoE)

📩 OpenReview: openreview.net/group?id=ICM...

Why bother with rebuttal when the perfect venue is right around the corner!

Submit your paper to the #ICML2025 Tokenization Workshop (TokShop) by May 30! 🚀

Why bother with rebuttal when the perfect venue is right around the corner!

Submit your paper to the #ICML2025 Tokenization Workshop (TokShop) by May 30! 🚀

Interested in tokenization? Join our workshop tokenization-workshop.github.io

The submission deadline is already May 30!

Interested in tokenization? Join our workshop tokenization-workshop.github.io

The submission deadline is already May 30!

This could be a surprise to many – models rely heavily on stored examples and draw analogies when dealing with unfamiliar words, much as humans do. Check out this new study led by @valentinhofmann.bsky.social to learn how they made the discovery 💡

We show that linguistic generalization in language models can be due to underlying analogical mechanisms.

Shoutout to my amazing co-authors @weissweiler.bsky.social, @davidrmortensen.bsky.social, Hinrich Schütze, and Janet Pierrehumbert!

What generalization mechanisms shape the language skills of LLMs?

Prior work has claimed that LLMs learn language via rules.

We revisit the question and find that superficially rule-like behavior of LLMs can be traced to underlying analogical processes.

🧵

This could be a surprise to many – models rely heavily on stored examples and draw analogies when dealing with unfamiliar words, much as humans do. Check out this new study led by @valentinhofmann.bsky.social to learn how they made the discovery 💡

We show that linguistic generalization in language models can be due to underlying analogical mechanisms.

Shoutout to my amazing co-authors @weissweiler.bsky.social, @davidrmortensen.bsky.social, Hinrich Schütze, and Janet Pierrehumbert!

What generalization mechanisms shape the language skills of LLMs?

Prior work has claimed that LLMs learn language via rules.

We revisit the question and find that superficially rule-like behavior of LLMs can be traced to underlying analogical processes.

🧵

We show that linguistic generalization in language models can be due to underlying analogical mechanisms.

Shoutout to my amazing co-authors @weissweiler.bsky.social, @davidrmortensen.bsky.social, Hinrich Schütze, and Janet Pierrehumbert!

tokenization-workshop.github.io

tokenization-workshop.github.io

Join us for the inaugural edition of TokShop at #ICML2025 @icmlconf.bsky.social in Vancouver this summer! 🤗

We're thrilled to announce the first-ever Tokenization Workshop (TokShop) at #ICML2025 @icmlconf.bsky.social! 🎉

Submissions are open for work on tokenization across all areas of machine learning.

📅 Submission deadline: May 30, 2025

🔗 tokenization-workshop.github.io

Join us for the inaugural edition of TokShop at #ICML2025 @icmlconf.bsky.social in Vancouver this summer! 🤗

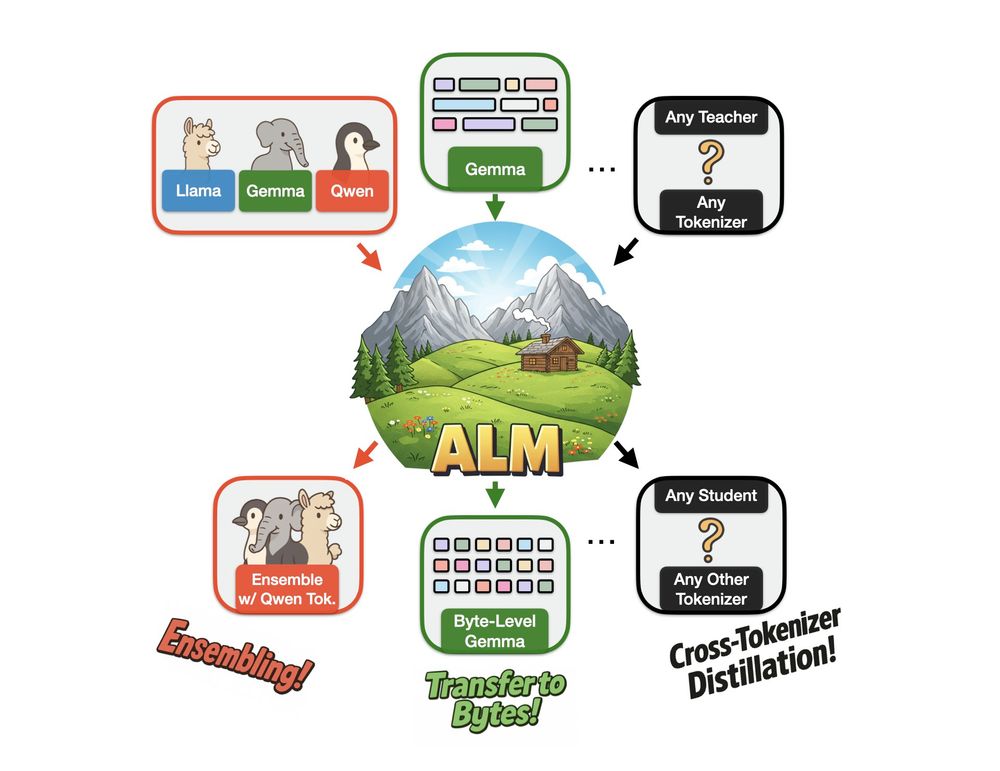

With ALM, you can create ensembles of models from different families, convert existing subword-level models to byte-level and a bunch more🧵

With ALM, you can create ensembles of models from different families, convert existing subword-level models to byte-level and a bunch more🧵

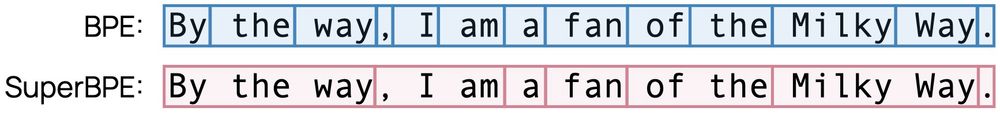

Enter SuperBPE, a tokenizer that lifts this restriction and brings substantial gains in efficiency and performance! 🚀

Details 👇

When pretraining at 8B scale, SuperBPE models consistently outperform the BPE baseline on 30 downstream tasks (+8% MMLU), while also being 27% more efficient at inference time.🧵

Enter SuperBPE, a tokenizer that lifts this restriction and brings substantial gains in efficiency and performance! 🚀

Details 👇

Metaphors shape how people understand politics, but measuring them (& their real-world effects) is hard.

We develop a new method to measure metaphor & use it to study dehumanizing metaphor in 400K immigration tweets Link: bit.ly/4i3PGm3

#NLP #NLProc #polisky #polcom #compsocialsci

🐦🐦

Metaphors shape how people understand politics, but measuring them (& their real-world effects) is hard.

We develop a new method to measure metaphor & use it to study dehumanizing metaphor in 400K immigration tweets Link: bit.ly/4i3PGm3

#NLP #NLProc #polisky #polcom #compsocialsci

🐦🐦

Linguistic evaluations of LLMs often implicitly assume that language is generated by symbolic rules.

In a new position paper, @adelegoldberg.bsky.social, @kmahowald.bsky.social and I argue that languages are not Lego sets, and evaluations should reflect this!

arxiv.org/pdf/2502.13195

Linguistic evaluations of LLMs often implicitly assume that language is generated by symbolic rules.

In a new position paper, @adelegoldberg.bsky.social, @kmahowald.bsky.social and I argue that languages are not Lego sets, and evaluations should reflect this!

arxiv.org/pdf/2502.13195

We find surprising consistency across models, with notable differences in Qwen on China-related issues. All examined LLMs also show strong alignment with Democrat voters.

More details below! 👇

We just released IssueBench – the largest, most realistic benchmark of its kind – to answer this question more robustly than ever before.

Long 🧵with spicy results 👇

We find surprising consistency across models, with notable differences in Qwen on China-related issues. All examined LLMs also show strong alignment with Democrat voters.

More details below! 👇

www.nature.com/articles/s41...

www.nature.com/articles/s41...

MSTS is exciting because it tests for safety risks *created by multimodality*. Each prompt consists of a text + image that *only in combination* reveal their full unsafe meaning.

🧵

MSTS is exciting because it tests for safety risks *created by multimodality*. Each prompt consists of a text + image that *only in combination* reveal their full unsafe meaning.

🧵

What generalization mechanisms shape the language skills of LLMs?

Prior work has claimed that LLMs learn language via rules.

We revisit the question and find that superficially rule-like behavior of LLMs can be traced to underlying analogical processes.

🧵

What generalization mechanisms shape the language skills of LLMs?

Prior work has claimed that LLMs learn language via rules.

We revisit the question and find that superficially rule-like behavior of LLMs can be traced to underlying analogical processes.

🧵