This fun analogy came up during my conversation with Harry, Jason, & Rory.

www.youtube.com/watch?v=sWkp...

tomtunguz.com/the-bacon-an...

This fun analogy came up during my conversation with Harry, Jason, & Rory.

www.youtube.com/watch?v=sWkp...

tomtunguz.com/the-bacon-an...

At that point, the fat begins to congeal.

At that point, the fat begins to congeal.

But this era of fluidity won’t last forever. The rate of improvement in AI models will eventually attenuate. When the performance gap between the best model & the second-best model shrinks, the incentive to switch evaporates.

But this era of fluidity won’t last forever. The rate of improvement in AI models will eventually attenuate. When the performance gap between the best model & the second-best model shrinks, the incentive to switch evaporates.

Who can take advantage of the next big leap in model performance fastest? Which sales team can reach the target customers first & write the RFP?

Who can take advantage of the next big leap in model performance fastest? Which sales team can reach the target customers first & write the RFP?

If the progress is material, then the benefit of switching is worth the activation energy.

If the progress is material, then the benefit of switching is worth the activation energy.

Together, these two data points dismantle the scaling wall thesis.

tomtunguz.com/gemini-3-pro...

Together, these two data points dismantle the scaling wall thesis.

tomtunguz.com/gemini-3-pro...

As Gavin Baker points out, Nvidia confirmed Blackwell Ultra delivers 5x faster training times than Hopper.

As Gavin Baker points out, Nvidia confirmed Blackwell Ultra delivers 5x faster training times than Hopper.

Second, Nvidia’s earnings call reinforced the demand.

Second, Nvidia’s earnings call reinforced the demand.

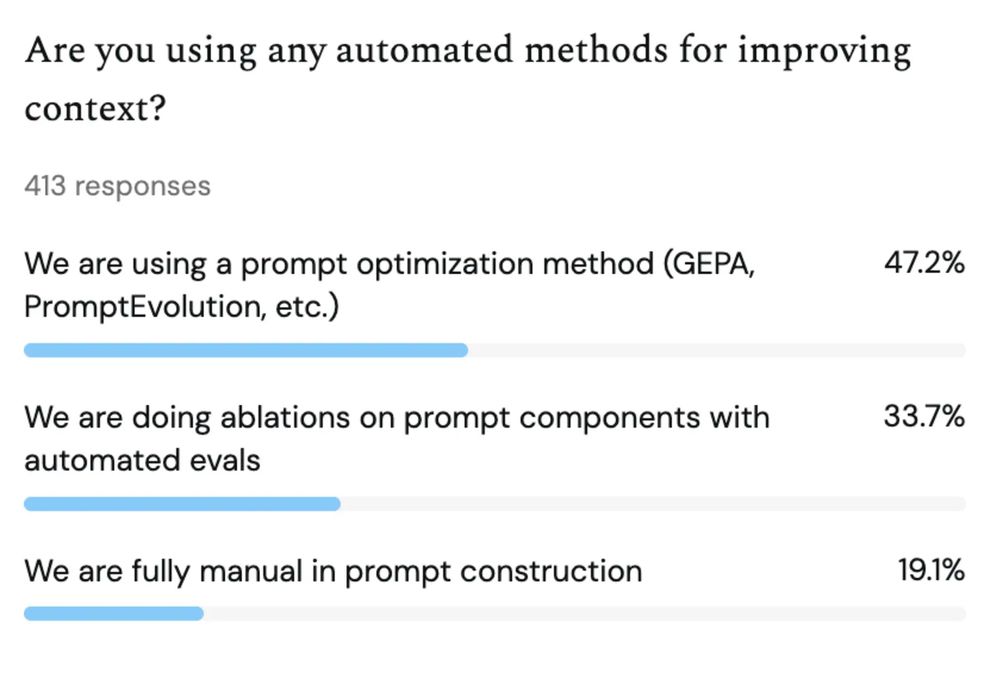

Explore the full interactive dataset here : survey.theoryvc.com or read Lauren’s complete analysis : theoryvc.com/blog-posts/a....

tomtunguz.com/ai-builders-...

Explore the full interactive dataset here : survey.theoryvc.com or read Lauren’s complete analysis : theoryvc.com/blog-posts/a....

tomtunguz.com/ai-builders-...

Teams need systems that verify correctness before they can scale production. The tools exist. The problem is harder than better retrieval can solve.

Teams need systems that verify correctness before they can scale production. The tools exist. The problem is harder than better retrieval can solve.