You can also find me at threads: @sung.kim.mw

SSA framework for long-context inference achieves state-of-the-art by explicitly encouraging sparser attention distributions, outperforming existing methods in perplexity across huge context windows

Paper: arxiv.org/abs/2511.20102

SSA framework for long-context inference achieves state-of-the-art by explicitly encouraging sparser attention distributions, outperforming existing methods in perplexity across huge context windows

Paper: arxiv.org/abs/2511.20102

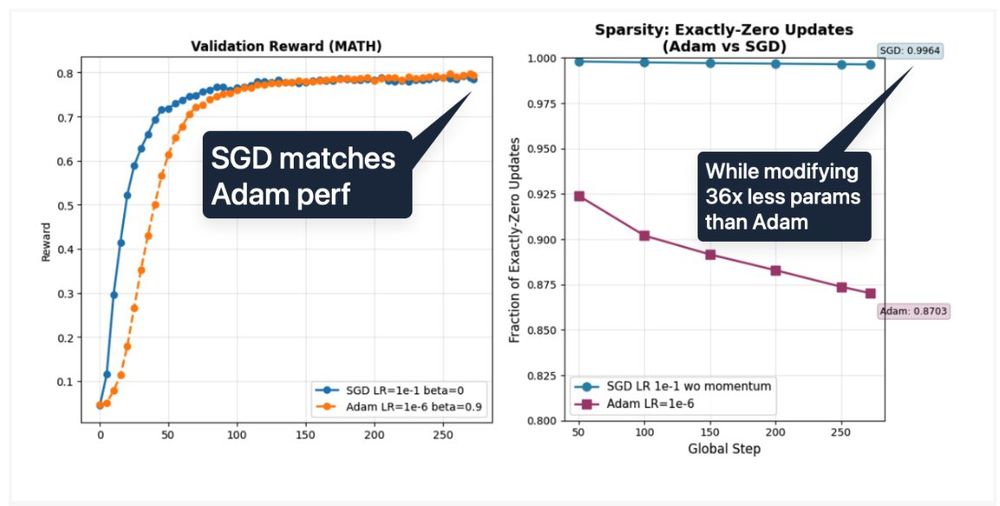

They found that vanilla SGD is

1. As performant as AdamW,

2. 36x more parameter efficient naturally. (much more than a rank 1 lora)

"Who is Adam? SGD Might Be All We Need For RLVR In LLMs"

www.notion.so/sagnikm/Who-...

They found that vanilla SGD is

1. As performant as AdamW,

2. 36x more parameter efficient naturally. (much more than a rank 1 lora)

"Who is Adam? SGD Might Be All We Need For RLVR In LLMs"

www.notion.so/sagnikm/Who-...

They have optimized and fine-tuned Whisper models to handle arbitrary audio chunks and compressed them with ANNA, and added streaming inference support for both Apple's M processor and Nvidia GPUs (e.g., L40).

They have optimized and fine-tuned Whisper models to handle arbitrary audio chunks and compressed them with ANNA, and added streaming inference support for both Apple's M processor and Nvidia GPUs (e.g., L40).

- Dimension-robust orthogonalization via adaptive Newton iterations with size-aware coefficients

- Optimization-robust updates using proximal methods that dampen harmful outliers while preserving useful gradient

- Dimension-robust orthogonalization via adaptive Newton iterations with size-aware coefficients

- Optimization-robust updates using proximal methods that dampen harmful outliers while preserving useful gradient

How to effectively and efficiently learn a low-dimensional distribution of data in a high-dimensional space and then transform the distribution to a compact and structured representation?

How to effectively and efficiently learn a low-dimensional distribution of data in a high-dimensional space and then transform the distribution to a compact and structured representation?

CLIP is a popular method for learning multimodal latent spaces with well-organized semantics. Despite its wide range of applications, CLIP's latent space is known to fail at handling complex visual-textual interactions.

CLIP is a popular method for learning multimodal latent spaces with well-organized semantics. Despite its wide range of applications, CLIP's latent space is known to fail at handling complex visual-textual interactions.

At the core of the attention mechanism in LLMs are three matrices: Query, Key, and Value. These matrices are how transformers actually pay attention to different parts of the input.

At the core of the attention mechanism in LLMs are three matrices: Query, Key, and Value. These matrices are how transformers actually pay attention to different parts of the input.

Parallel decoding is a fight to increase speed while maintaining fluency and diversity, especially with the proliferation of diffusion language models.

danielmisrael.github.io/posts/2025/1...

Parallel decoding is a fight to increase speed while maintaining fluency and diversity, especially with the proliferation of diffusion language models.

danielmisrael.github.io/posts/2025/1...

kimi.com/slides

kimi.com/slides

You could even argue that crypto is helping drive gold’s price higher.

You could even argue that crypto is helping drive gold’s price higher.

Fully open source, local semantic code search for Claude Code that works. osgrep -v2 is live!

36% faster answers, 23% cheaper, 70% win rate

Fully open source, local semantic code search for Claude Code that works. osgrep -v2 is live!

36% faster answers, 23% cheaper, 70% win rate

Project, paper, supplement, and code (???): light.princeton.edu/publication/...

Project, paper, supplement, and code (???): light.princeton.edu/publication/...

1️⃣ Prediction: learns to "ride" the teacher's vector field, constructing the generative path.

2️⃣ Correction: stabilizes the trajectory by actively rectifying compounding errors.

1️⃣ Prediction: learns to "ride" the teacher's vector field, constructing the generative path.

2️⃣ Correction: stabilizes the trajectory by actively rectifying compounding errors.

Repo: github.com/Tongyi-MAI/Z-Image

ModelScope: modelscope.ai/models/Tongy...

HuggingFace: huggingface.co/Tongyi-MAI/Z...

Z-Image gallery : modelscope.cn/studios/Tong...

Repo: github.com/Tongyi-MAI/Z-Image

ModelScope: modelscope.ai/models/Tongy...

HuggingFace: huggingface.co/Tongyi-MAI/Z...

Z-Image gallery : modelscope.cn/studios/Tong...

Through systematic optimization, it proves that top-tier performance is achievable without relying on enormous model sizes, delivering strong results in photorealistic generation and bilingual

Through systematic optimization, it proves that top-tier performance is achievable without relying on enormous model sizes, delivering strong results in photorealistic generation and bilingual

Homepage: research.nvidia.com/labs/lpr/Too...

Model: huggingface.co/nvidia/Orche...

Data: huggingface.co/datasets/nvi...

Code: github.com/NVlabs/ToolO...

Findings:

👉 Just prompting the agent workflow won’t cut it. It’s not how you build the best agent.

👉 Without learning, workflows plateau fast.

Findings:

👉 Just prompting the agent workflow won’t cut it. It’s not how you build the best agent.

👉 Without learning, workflows plateau fast.

⚡ 1.55× faster async rollout dispatch

🛠 Lightweight tool + task integration

🔄 Backend-agnostic (SkyRL-train / VeRL / Tinker)

Repo: github.com/NovaSky-AI/S...

Paper: arxiv.org/abs/2511.16108

⚡ 1.55× faster async rollout dispatch

🛠 Lightweight tool + task integration

🔄 Backend-agnostic (SkyRL-train / VeRL / Tinker)

Repo: github.com/NovaSky-AI/S...

Paper: arxiv.org/abs/2511.16108

Paper: www.arxiv.org/abs/2511.089...

Model: ???

Paper: www.arxiv.org/abs/2511.089...

Model: ???

ageron.github.io/homlp/HOMLP_...

ageron.github.io/homlp/HOMLP_...