I have seen some teams be successful by replacing prompts with smaller, more deterministic components and improved reliability with fine-tuning. Anyone else have success with this approach?

Seems to help a lot with agents

I have seen some teams be successful by replacing prompts with smaller, more deterministic components and improved reliability with fine-tuning. Anyone else have success with this approach?

Seems to help a lot with agents

America is cooked

America is cooked

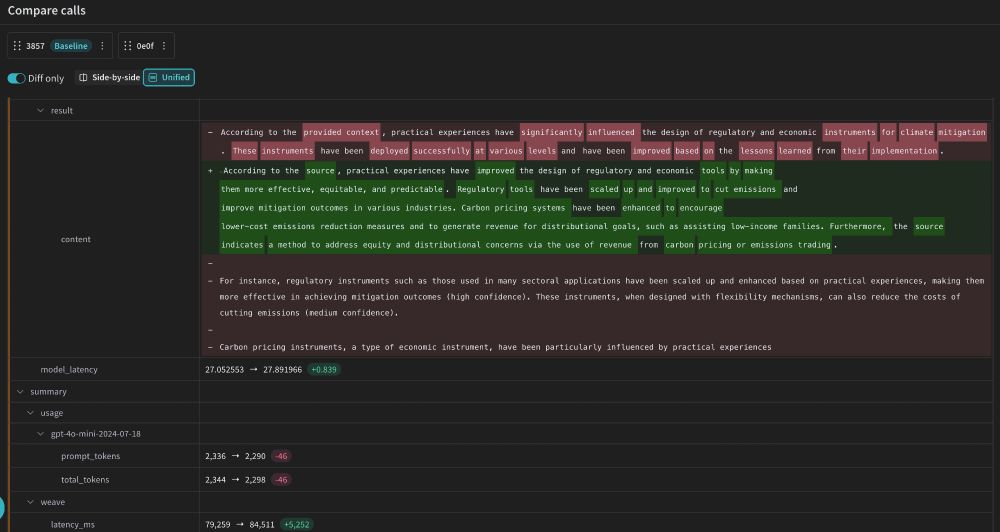

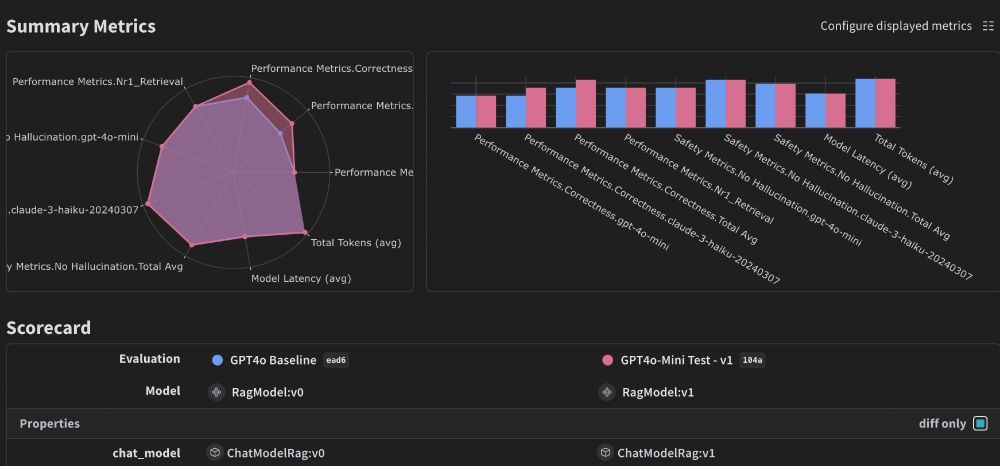

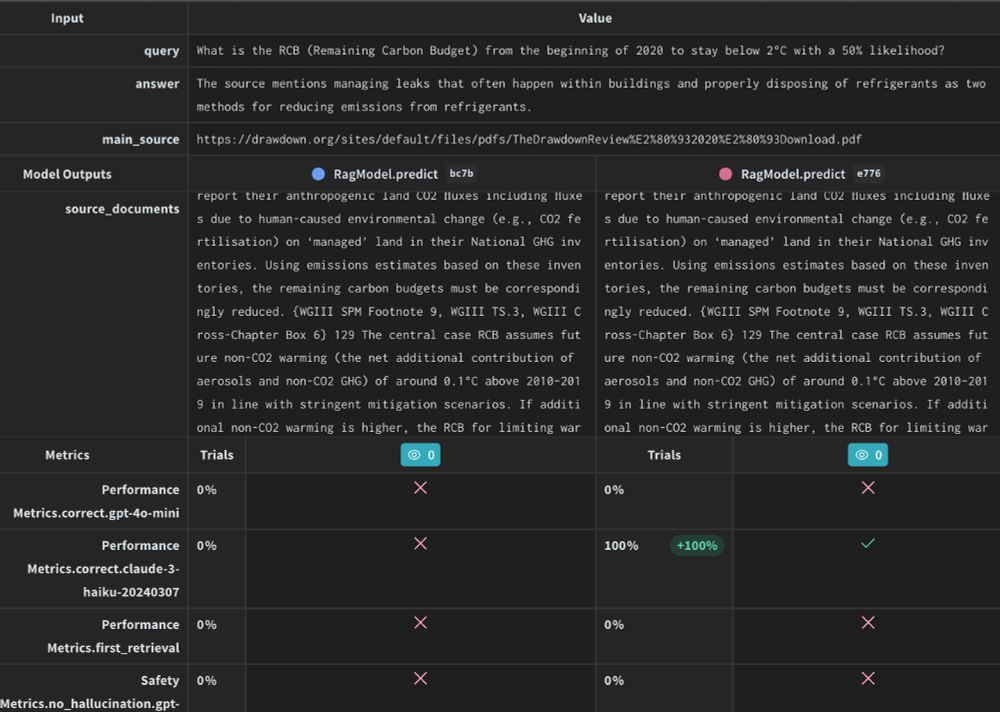

At wandb, we've spent years thinking about experiment comparison. We've added new tools for LLM app dev: code, prompts, models, configs, outputs, eval metrics, eval predictions, eval scores..

wandb.me/weave

At wandb, we've spent years thinking about experiment comparison. We've added new tools for LLM app dev: code, prompts, models, configs, outputs, eval metrics, eval predictions, eval scores..

wandb.me/weave

It’s a minefield out there people

Learning to use LLMs really is a whole lot easier if you apply "person" metaphors to them

I trust people to figure out that they're not sci-fi AI entities once they really start digging in and using them

It’s a minefield out there people

An llms.txt file is a way to tell a LLM about your website. In the .txt file, you include links to other files with info to learn more.

- the llms.txt file isn't the file you send to an LLM, you use it to generate a llms .md file

An llms.txt file is a way to tell a LLM about your website. In the .txt file, you include links to other files with info to learn more.

- the llms.txt file isn't the file you send to an LLM, you use it to generate a llms .md file

Then, you can transition from manual to automated evaluation once you have inter-annotator agreement between LLM & human. You now have a faster iteration speed and the annotator can focus on finding edge cases!

Then, you can transition from manual to automated evaluation once you have inter-annotator agreement between LLM & human. You now have a faster iteration speed and the annotator can focus on finding edge cases!

And they nonchalantly said "I'll write it in Redstone", to which I almost let loose a chuckle until...

And they nonchalantly said "I'll write it in Redstone", to which I almost let loose a chuckle until...

Weave is a lightweight llm tracing and evaluations toolkit, that focuses on letting you iterate fast and make sure that your production LLM based application is not degrading when you change prompts or models!

Weave is a lightweight llm tracing and evaluations toolkit, that focuses on letting you iterate fast and make sure that your production LLM based application is not degrading when you change prompts or models!