If you are interested in the intersection of linguistics, cognitive science, & AI, I encourage you to apply!

PhD link: rtmccoy.com/prospective_...

Postdoc link: rtmccoy.com/prospective_...

If you are interested in the intersection of linguistics, cognitive science, & AI, I encourage you to apply!

PhD link: rtmccoy.com/prospective_...

Postdoc link: rtmccoy.com/prospective_...

What skills do you need to be a successful researcher?

The list seems long: collaborating, writing, presenting, reviewing, etc

But I argue that many of these skills can be unified under a single overarching ability: theory of mind

rtmccoy.com/posts/theory...

What skills do you need to be a successful researcher?

The list seems long: collaborating, writing, presenting, reviewing, etc

But I argue that many of these skills can be unified under a single overarching ability: theory of mind

rtmccoy.com/posts/theory...

[very minor spoilers for both]

1/n

[very minor spoilers for both]

1/n

Recent neural networks capture properties long thought to require symbols: compositionality, productivity, rapid learning

So what role should symbols play in theories of the mind? For our answer...read on!

Paper: arxiv.org/abs/2508.05776

1/n

Recent neural networks capture properties long thought to require symbols: compositionality, productivity, rapid learning

So what role should symbols play in theories of the mind? For our answer...read on!

Paper: arxiv.org/abs/2508.05776

1/n

(Though at least we're not as bad as mathematicians!)

(Though at least we're not as bad as mathematicians!)

13/n

13/n

12/n

12/n

10/n

10/n

8/n

8/n

7/n

7/n

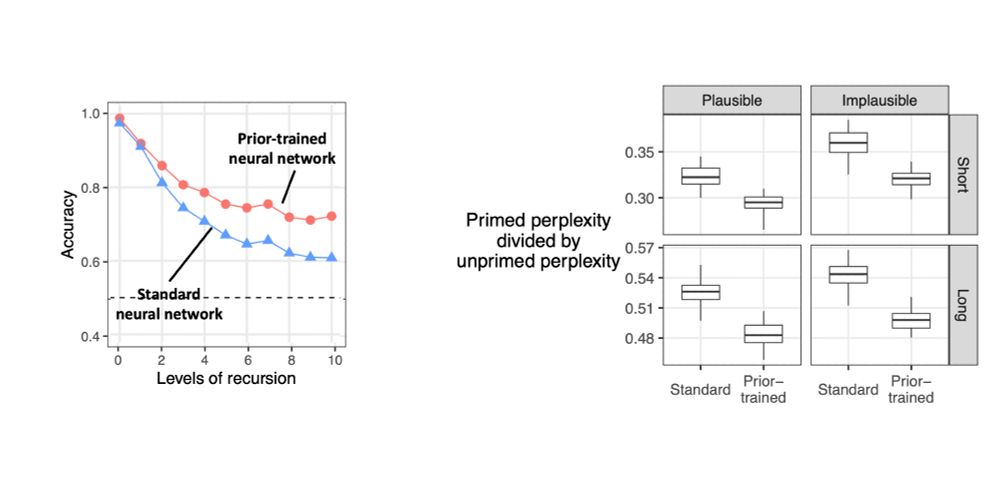

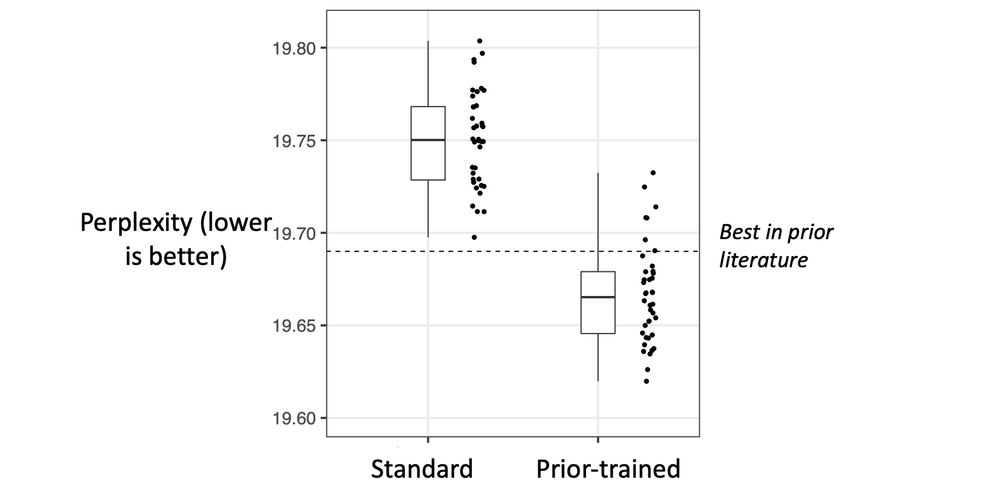

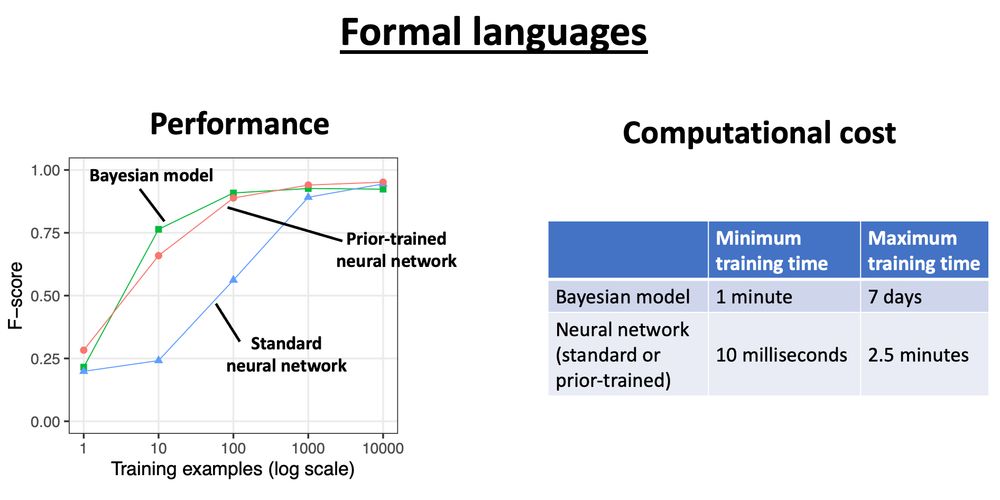

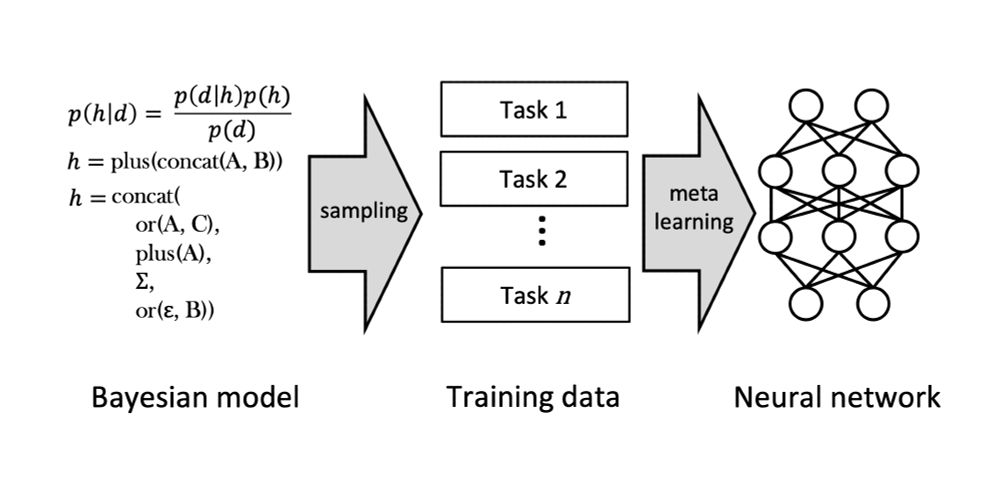

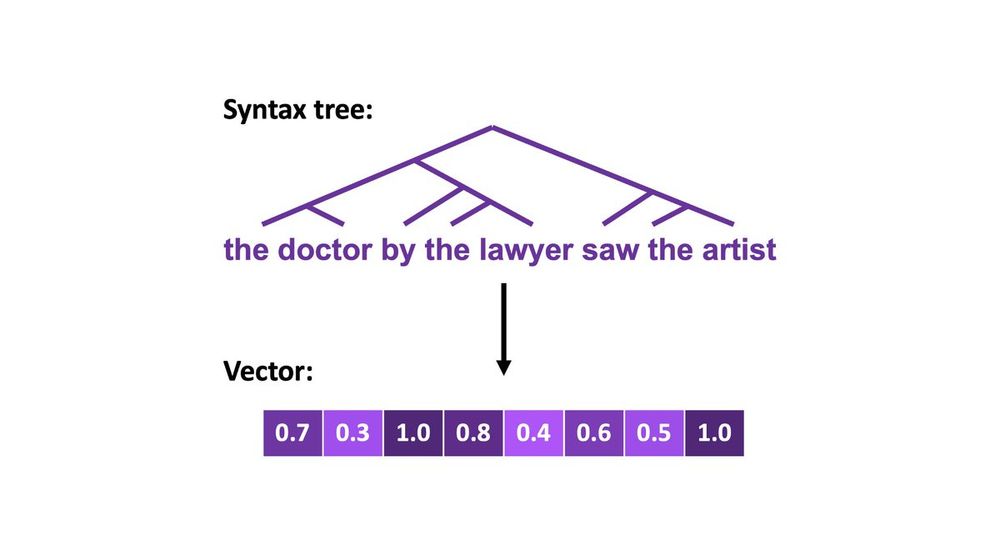

1. Use a Bayesian model to define an inductive bias (a prior)

2. Sample learning tasks from the Bayesian model

3. Have a neural network meta-learn from these sampled tasks, to give it the Bayesian model's prior

5/n

1. Use a Bayesian model to define an inductive bias (a prior)

2. Sample learning tasks from the Bayesian model

3. Have a neural network meta-learn from these sampled tasks, to give it the Bayesian model's prior

5/n

3/n

3/n

2/n

2/n

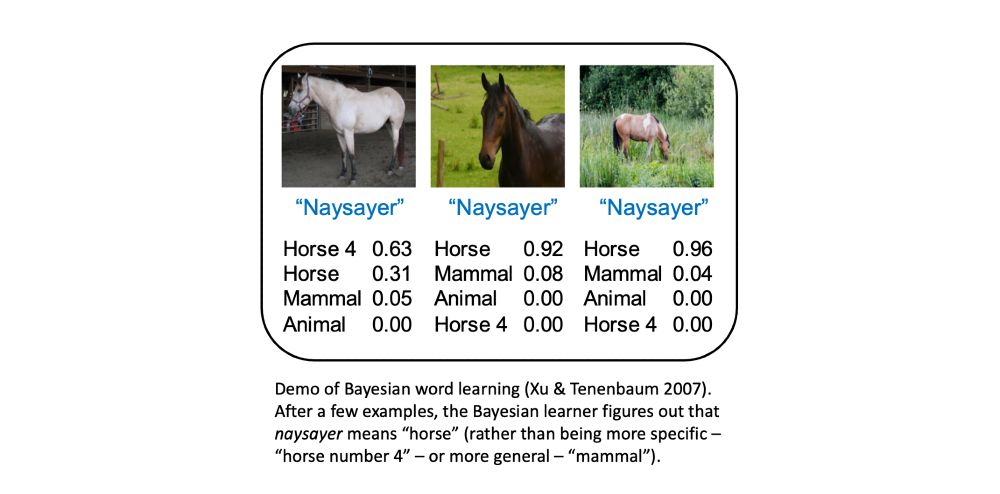

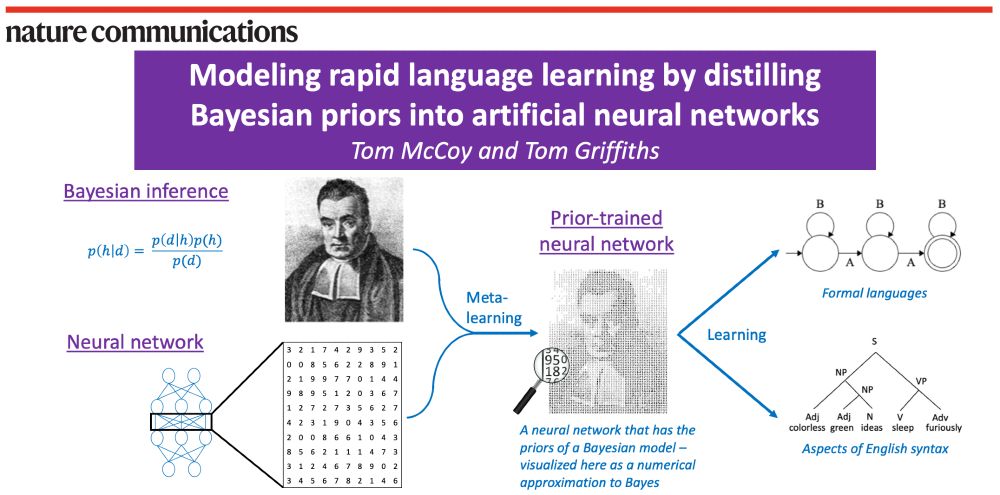

Bayesian models can learn rapidly. Neural networks can handle messy, naturalistic data. How can we combine these strengths?

Our answer: Use meta-learning to distill Bayesian priors into a neural network!

www.nature.com/articles/s41...

1/n

Bayesian models can learn rapidly. Neural networks can handle messy, naturalistic data. How can we combine these strengths?

Our answer: Use meta-learning to distill Bayesian priors into a neural network!

www.nature.com/articles/s41...

1/n

This one has some personal connections, described at the WordPlay article by @samcorbin.bsky.social (contains spoilers): www.nytimes.com/2025/05/06/c...

I hope you enjoy!

This one has some personal connections, described at the WordPlay article by @samcorbin.bsky.social (contains spoilers): www.nytimes.com/2025/05/06/c...

I hope you enjoy!

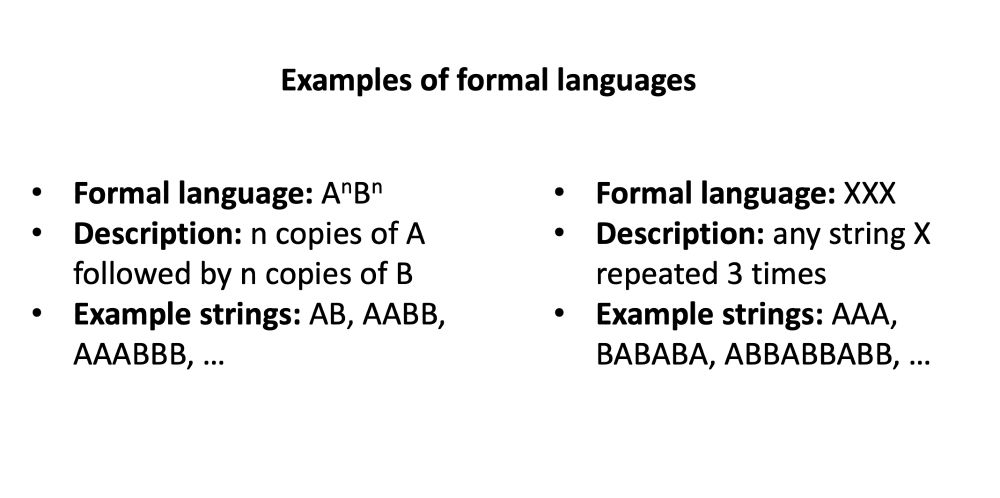

- I trained a Transformer language model on sentences sampled from a PCFG

- The students' task: Given the Transformer, try to infer the PCFG (w/ a leaderboard for who got closest)

Would recommend!

1/n

- I trained a Transformer language model on sentences sampled from a PCFG

- The students' task: Given the Transformer, try to infer the PCFG (w/ a leaderboard for who got closest)

Would recommend!

1/n

- Choose a linguistic phenomenon in a language other than English

- Give a 3-minute presentation about that phenomenon & how it would pose a challenge for computational models

Would recommend!

1/n

- Choose a linguistic phenomenon in a language other than English

- Give a 3-minute presentation about that phenomenon & how it would pose a challenge for computational models

Would recommend!

1/n

Interestingly, the effects don’t just show up in accuracy (top) but also in how many tokens o1 consumes to perform the task (bottom)!

3/4

Interestingly, the effects don’t just show up in accuracy (top) but also in how many tokens o1 consumes to perform the task (bottom)!

3/4

2/4

2/4

Here's a shameless plug for our work comparing o1 to previous LLMs (extending "Embers of Autoregression"): arxiv.org/abs/2410.01792

- o1 shows big improvements over GPT-4

- But qualitatively it is still sensitive to probability

1/4

Here's a shameless plug for our work comparing o1 to previous LLMs (extending "Embers of Autoregression"): arxiv.org/abs/2410.01792

- o1 shows big improvements over GPT-4

- But qualitatively it is still sensitive to probability

1/4

Topic: Will scaling current LLMs be sufficient to resolve major open math conjectures?

Topic: Will scaling current LLMs be sufficient to resolve major open math conjectures?

If you are interested in the intersection of linguistics, cognitive science, and AI, I encourage you to apply!

Postdoc link: rtmccoy.com/prospective_...

PhD link: rtmccoy.com/prospective_...

If you are interested in the intersection of linguistics, cognitive science, and AI, I encourage you to apply!

Postdoc link: rtmccoy.com/prospective_...

PhD link: rtmccoy.com/prospective_...