The Yale Dept of Linguistics is hiring a 3-year Lecturer in Historical Linguistics. There's a great group here working on language change, and you could become part of it!

Application review begins Dec 14. For more info, see apply.interfolio.com/177395

The Yale Dept of Linguistics is hiring a 3-year Lecturer in Historical Linguistics. There's a great group here working on language change, and you could become part of it!

Application review begins Dec 14. For more info, see apply.interfolio.com/177395

English uses "and" no matter what's being joined: "bread and butter" (nouns), "divide and conquer" (verbs), "short and sweet" (adjs). Are there languages that vary the conjunction across these contexts?

English uses "and" no matter what's being joined: "bread and butter" (nouns), "divide and conquer" (verbs), "short and sweet" (adjs). Are there languages that vary the conjunction across these contexts?

If you are interested in the intersection of linguistics, cognitive science, & AI, I encourage you to apply!

PhD link: rtmccoy.com/prospective_...

Postdoc link: rtmccoy.com/prospective_...

If you are interested in the intersection of linguistics, cognitive science, & AI, I encourage you to apply!

PhD link: rtmccoy.com/prospective_...

Postdoc link: rtmccoy.com/prospective_...

![“If you want to know how a model will behave, the only way of doing it is to run it,” Mensch also suggested. “You do need to have some empirical testing, what’s happening. Knowing the input data that has been used for training is not going to tell you whether your model is going to behave well in [a healthcare use-case], for instance. You don’t really care about what’s in the training data. You do care about the empirical behaviour of the model. So you don’t need knowledge of the training data. And if you had knowledge of the training data, it wouldn’t even teach you whether the model is going to behave well or not. So this is why I’m saying it’s neither necessary nor sufficient.”](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:26hkfmtr7jrchexamh7hk6rz/bafkreibp7oaeovg7xt43dlk3qtmkft6irldgf44tz75sbaiqvoqdq4kpjy@jpeg)

Language models, unlike humans, require large amounts of data, which suggests the need for an inductive bias.

But what kind of inductive biases do we need?

Language models, unlike humans, require large amounts of data, which suggests the need for an inductive bias.

But what kind of inductive biases do we need?

The deadline is coming up soon: Nov 10

WTI's Postdoc Fellowships application is now open, offering a competitive salary, structured mentorship, world-class facilities + more: wti.yale.edu/initiatives/...

Apply by November 10: apply.interfolio.com/174525

#KnowTogether

The deadline is coming up soon: Nov 10

- One in computational cognitive science (due Dec 1)

- One in neurodevelopment (rolling)

🧠🤖

Two calls are open:

Open-rank search, Neurocomputation, deadline: 12.1.25

Senior search, Neurodevelopment, rolling review

🔗 wti.yale.edu/opportunities

#KnowTogether

- One in computational cognitive science (due Dec 1)

- One in neurodevelopment (rolling)

🧠🤖

- Oct 6: Colloquium at Berkeley Linguistics

- Oct 9: Workshop at Google Mountain View

- Oct 14: Talk at UC Irvine Center for Lg, Intelligence & Computation

- Oct 16: NLP / Text-as-Data talk at NYU

Say hi if you'll be around!

- Oct 6: Colloquium at Berkeley Linguistics

- Oct 9: Workshop at Google Mountain View

- Oct 14: Talk at UC Irvine Center for Lg, Intelligence & Computation

- Oct 16: NLP / Text-as-Data talk at NYU

Say hi if you'll be around!

@rtommccoy.bsky.social

@rtommccoy.bsky.social

What skills do you need to be a successful researcher?

The list seems long: collaborating, writing, presenting, reviewing, etc

But I argue that many of these skills can be unified under a single overarching ability: theory of mind

rtmccoy.com/posts/theory...

What skills do you need to be a successful researcher?

The list seems long: collaborating, writing, presenting, reviewing, etc

But I argue that many of these skills can be unified under a single overarching ability: theory of mind

rtmccoy.com/posts/theory...

[very minor spoilers for both]

1/n

[very minor spoilers for both]

1/n

Recent neural networks capture properties long thought to require symbols: compositionality, productivity, rapid learning

So what role should symbols play in theories of the mind? For our answer...read on!

Paper: arxiv.org/abs/2508.05776

1/n

Recent neural networks capture properties long thought to require symbols: compositionality, productivity, rapid learning

So what role should symbols play in theories of the mind? For our answer...read on!

Paper: arxiv.org/abs/2508.05776

1/n

Recent neural networks capture properties long thought to require symbols: compositionality, productivity, rapid learning

So what role should symbols play in theories of the mind? For our answer...read on!

Paper: arxiv.org/abs/2508.05776

1/n

(Though at least we're not as bad as mathematicians!)

(Though at least we're not as bad as mathematicians!)

To help with this, I've made a list of all the most important papers from the past 8 years:

rtmccoy.com/pubs/

I hope you enjoy!

To help with this, I've made a list of all the most important papers from the past 8 years:

rtmccoy.com/pubs/

I hope you enjoy!

We look at claims of "emergent capabilities" & "emergent intelligence" in LLMs from the perspective of what emergence means in complexity science.

arxiv.org/pdf/2506.11135

We look at claims of "emergent capabilities" & "emergent intelligence" in LLMs from the perspective of what emergence means in complexity science.

arxiv.org/pdf/2506.11135

1. Undry

2. Dry

1. Undry

2. Dry

How do LLMs engage in pragmatic reasoning, and what core pragmatic capacities remain beyond their reach?

🌐 sites.google.com/berkeley.edu/praglm/

📅 Submit by June 23rd

How do LLMs engage in pragmatic reasoning, and what core pragmatic capacities remain beyond their reach?

🌐 sites.google.com/berkeley.edu/praglm/

📅 Submit by June 23rd

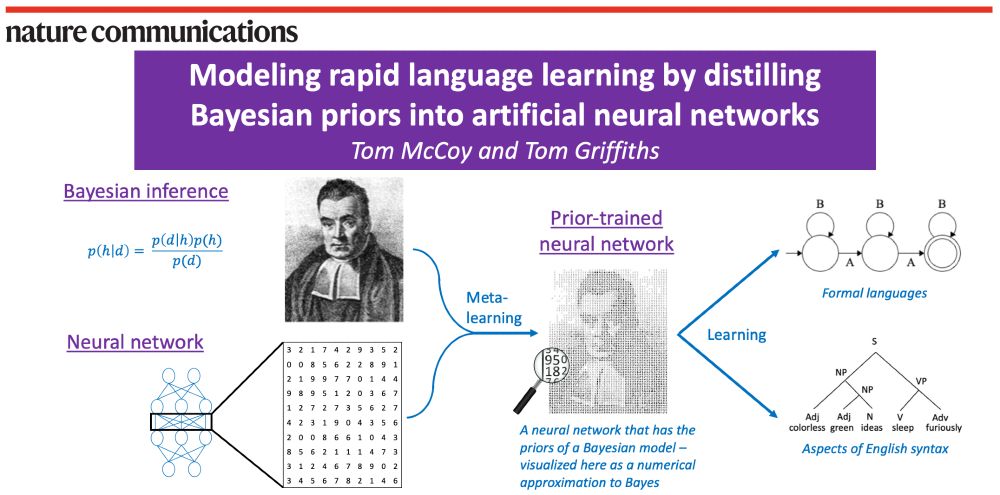

Bayesian models can learn rapidly. Neural networks can handle messy, naturalistic data. How can we combine these strengths?

Our answer: Use meta-learning to distill Bayesian priors into a neural network!

www.nature.com/articles/s41...

1/n

Bayesian models can learn rapidly. Neural networks can handle messy, naturalistic data. How can we combine these strengths?

Our answer: Use meta-learning to distill Bayesian priors into a neural network!

www.nature.com/articles/s41...

1/n