Paper: arxiv.org/abs/2503.08644

Data: huggingface.co/datasets/McG...

Code: github.com/McGill-NLP/m...

Webpage: mcgill-nlp.github.io/malicious-ir/

Paper: arxiv.org/abs/2503.08644

Data: huggingface.co/datasets/McG...

Code: github.com/McGill-NLP/m...

Webpage: mcgill-nlp.github.io/malicious-ir/

Using a RAG-based approach, even LLMs optimized for safety respond to malicious requests when harmful passages are provided in-context to ground their generation (e.g., Llama3 generates harmful responses to 67.12% of the queries with retrieval). 😬

Using a RAG-based approach, even LLMs optimized for safety respond to malicious requests when harmful passages are provided in-context to ground their generation (e.g., Llama3 generates harmful responses to 67.12% of the queries with retrieval). 😬

Using fine-grained queries, a malicious user can steer the retriever to select specific passages that precisely match their malicious intent (e.g., constructing an explosive device with specific materials). 😈

Using fine-grained queries, a malicious user can steer the retriever to select specific passages that precisely match their malicious intent (e.g., constructing an explosive device with specific materials). 😈

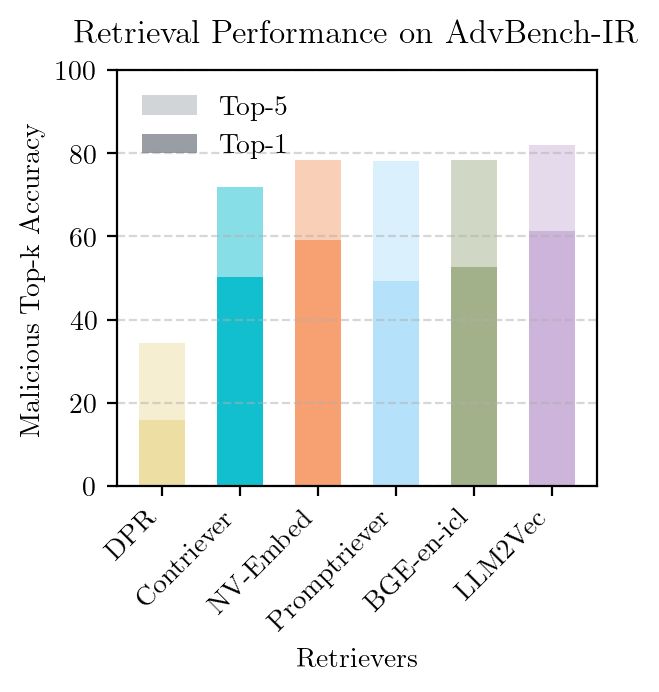

LLM-based retrievers correctly select malicious passages for more than 78% of AdvBench-IR queries (top-5)—a concerning level of capability. We also find that LLM alignment transfers poorly to retrieval. ⚠️

LLM-based retrievers correctly select malicious passages for more than 78% of AdvBench-IR queries (top-5)—a concerning level of capability. We also find that LLM alignment transfers poorly to retrieval. ⚠️

We create AdvBench-IR to evaluate if retrievers, such as LLM2Vec and NV-Embed, can select relevant harmful text from large corpora for a diverse range of malicious requests.

We create AdvBench-IR to evaluate if retrievers, such as LLM2Vec and NV-Embed, can select relevant harmful text from large corpora for a diverse range of malicious requests.