7/7

7/7

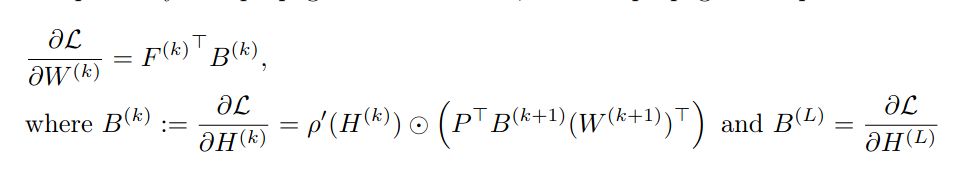

6/7

6/7

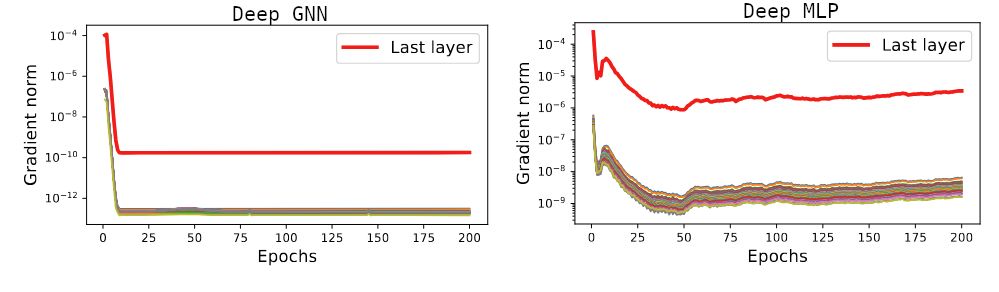

(*at every layers*, due to further mechanisms).

That's the main result of this paper.

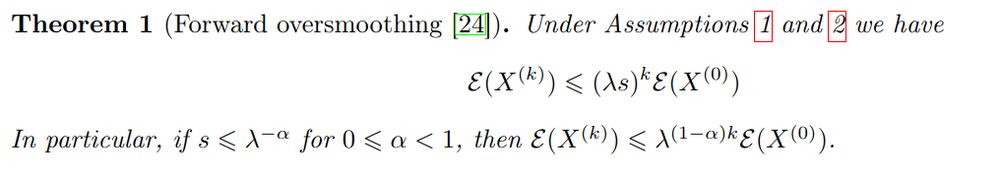

5/7

(*at every layers*, due to further mechanisms).

That's the main result of this paper.

5/7

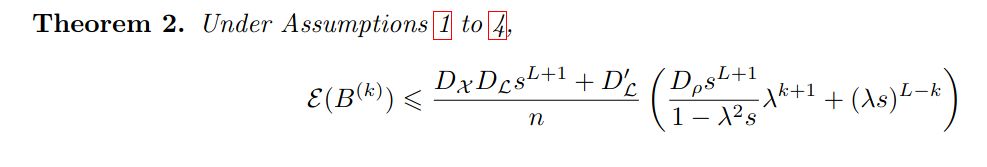

So we *can* compute its oversmoothed limit! It is the **average** of the prediction error...

4/7

So we *can* compute its oversmoothed limit! It is the **average** of the prediction error...

4/7

Why is it bad for training? Well...

3/7

Why is it bad for training? Well...

3/7

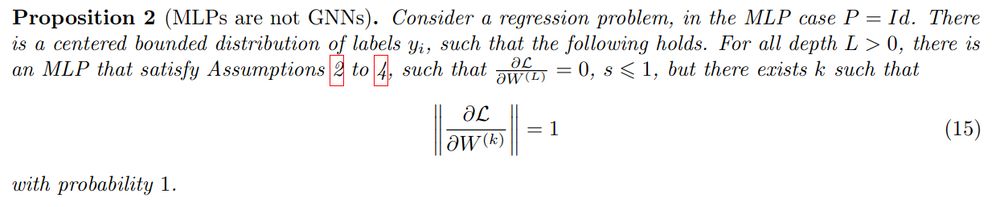

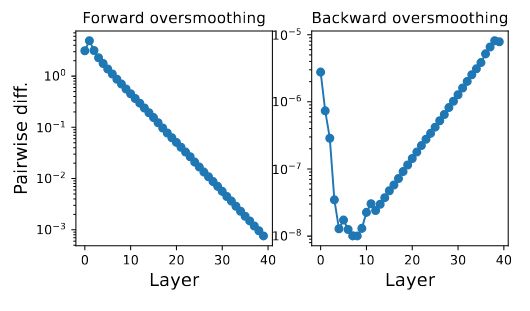

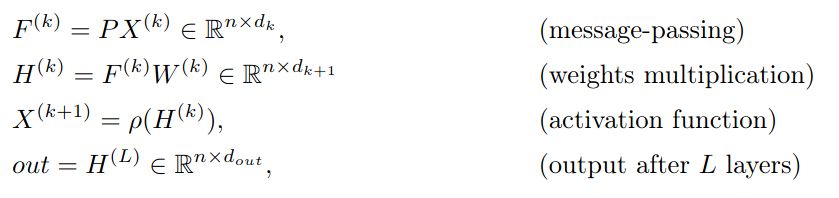

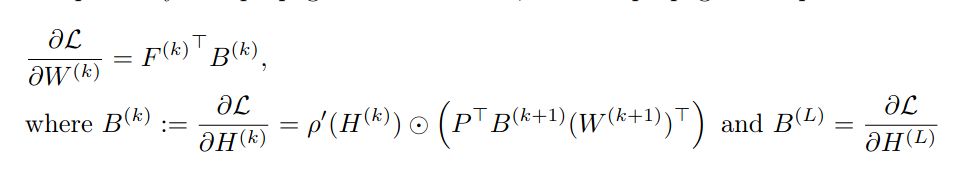

Like the forward, the backward is multiplied by the graph matrix, so it will oversmooth...

2/7

Like the forward, the backward is multiplied by the graph matrix, so it will oversmooth...

2/7