Cheers!

Cheers!

Plz share if you like it 🙏🏼🙏🏼😊

Cheers

Plz share if you like it 🙏🏼🙏🏼😊

Cheers

6/7

6/7

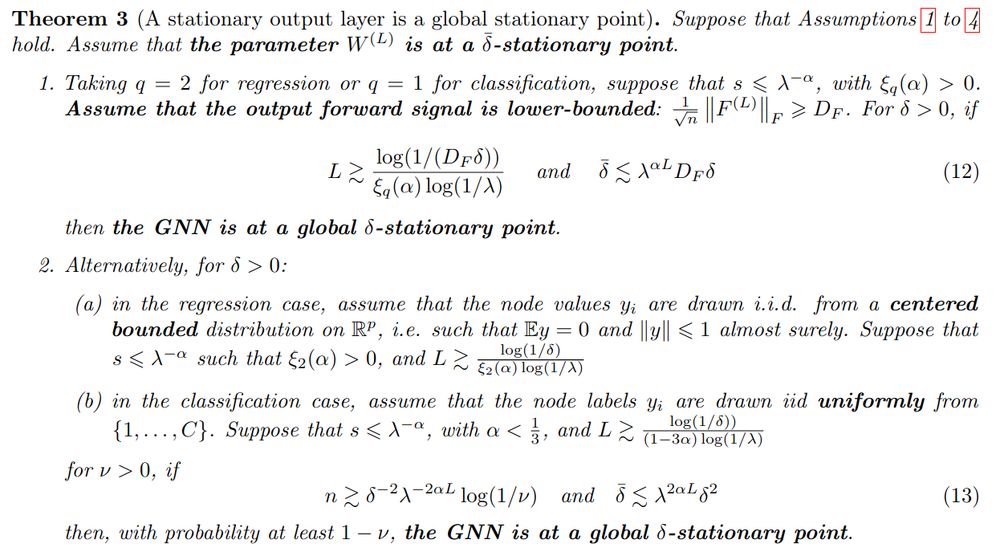

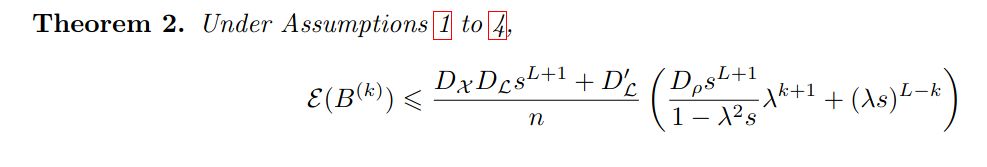

(*at every layers*, due to further mechanisms).

That's the main result of this paper.

5/7

(*at every layers*, due to further mechanisms).

That's the main result of this paper.

5/7

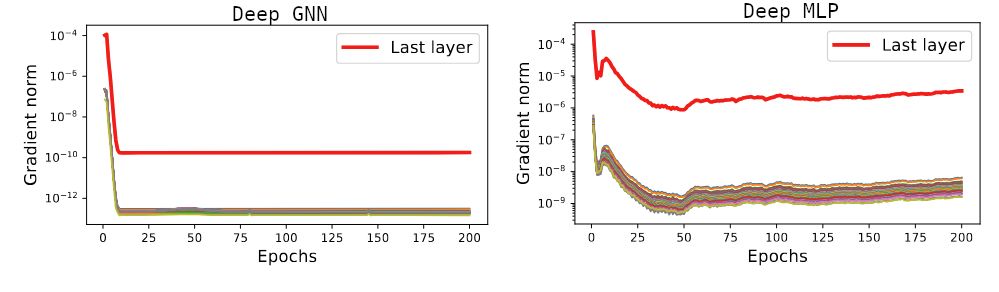

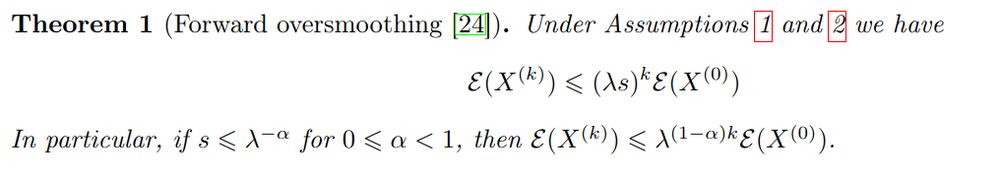

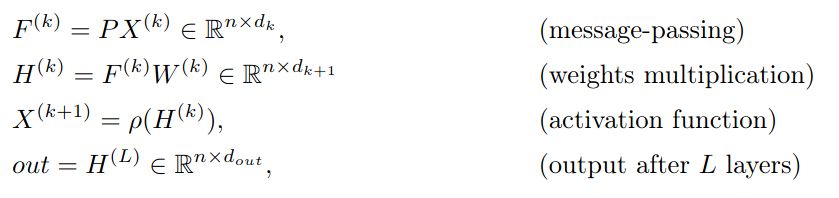

So we *can* compute its oversmoothed limit! It is the **average** of the prediction error...

4/7

So we *can* compute its oversmoothed limit! It is the **average** of the prediction error...

4/7

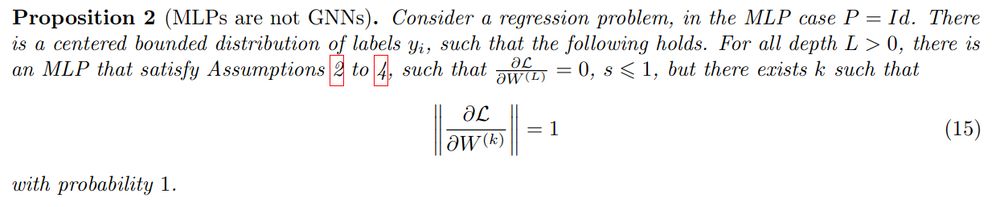

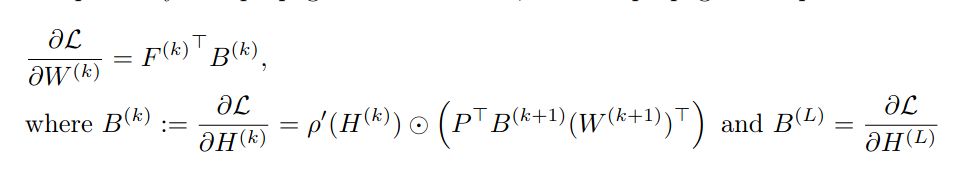

Why is it bad for training? Well...

3/7

Why is it bad for training? Well...

3/7

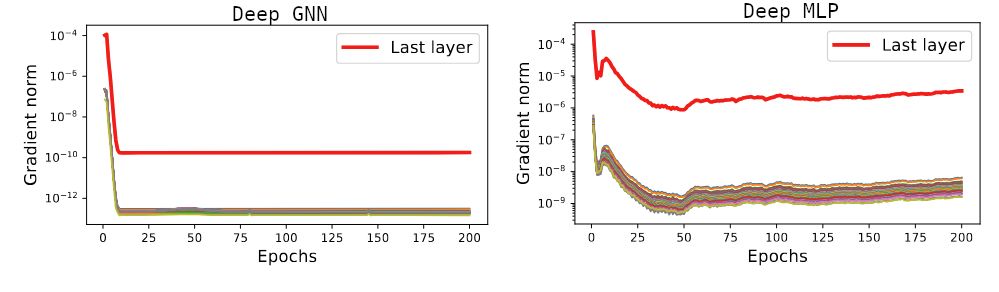

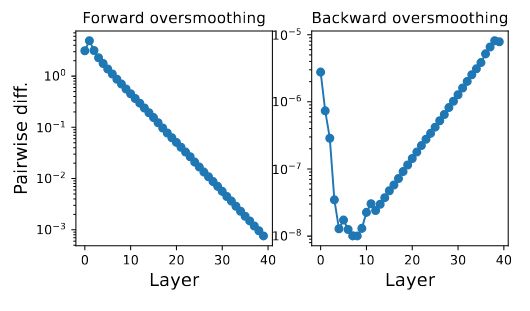

Like the forward, the backward is multiplied by the graph matrix, so it will oversmooth...

2/7

Like the forward, the backward is multiplied by the graph matrix, so it will oversmooth...

2/7

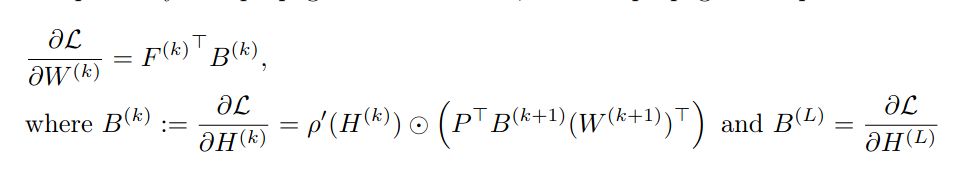

"Backward Oversmoothing: why is it hard to train deep Graph Neural Networks?"

GNNs could learn to anti-oversmooth. But they generally don't. Why is that? We uncover many interesting stuff 🤔 1/7

arxiv.org/abs/2505.16736

"Backward Oversmoothing: why is it hard to train deep Graph Neural Networks?"

GNNs could learn to anti-oversmooth. But they generally don't. Why is that? We uncover many interesting stuff 🤔 1/7

arxiv.org/abs/2505.16736

En extérieur (abrité, c'est la Bretagne) avec buvette et food truck, viendez ça va être chouette !

En extérieur (abrité, c'est la Bretagne) avec buvette et food truck, viendez ça va être chouette !

I hope you like it! And don't hesitate to follow Blue Curl ;)

Cheers

I hope you like it! And don't hesitate to follow Blue Curl ;)

Cheers

But a lot of questions remain, this is for specific cases and weird algorithms not used in practice.

6/7

But a lot of questions remain, this is for specific cases and weird algorithms not used in practice.

6/7

4/7

4/7

2/7

2/7

nkeriven.github.io/malaga/

(if you don't find the position for you but are interested in the topic anyway, contact me!)

nkeriven.github.io/malaga/

(if you don't find the position for you but are interested in the topic anyway, contact me!)