Ida Momennejad

@neuroai.bsky.social

Principal Researcher @ Microsoft Research.

AI, RL, cog neuro, philosophy.

www.momen-nejad.org

AI, RL, cog neuro, philosophy.

www.momen-nejad.org

Great paper. Last author (Pratyusha) recently joined MSR NYC :)

October 29, 2025 at 6:50 PM

Great paper. Last author (Pratyusha) recently joined MSR NYC :)

Finally, we're collecting human reasoning data on the

same tasks to compare model-human algorithmic alignment.

A future direction is algorithmic training/finetuning, & self-improving models with algorithmic self-play: generating/evaluating compositional algorithmic solutions.

Thank you!

same tasks to compare model-human algorithmic alignment.

A future direction is algorithmic training/finetuning, & self-improving models with algorithmic self-play: generating/evaluating compositional algorithmic solutions.

Thank you!

October 27, 2025 at 6:13 PM

Finally, we're collecting human reasoning data on the

same tasks to compare model-human algorithmic alignment.

A future direction is algorithmic training/finetuning, & self-improving models with algorithmic self-play: generating/evaluating compositional algorithmic solutions.

Thank you!

same tasks to compare model-human algorithmic alignment.

A future direction is algorithmic training/finetuning, & self-improving models with algorithmic self-play: generating/evaluating compositional algorithmic solutions.

Thank you!

Our algorithmic tracing & steering framework applies to any architecture (diffusion, multi-modal) & domain w implications in explainability/safety.

More detailed manifold analysis can capture the compositional geometry of primitives beyond linear composition & establish primitive libraries.

7/n

More detailed manifold analysis can capture the compositional geometry of primitives beyond linear composition & establish primitive libraries.

7/n

October 27, 2025 at 6:13 PM

Our algorithmic tracing & steering framework applies to any architecture (diffusion, multi-modal) & domain w implications in explainability/safety.

More detailed manifold analysis can capture the compositional geometry of primitives beyond linear composition & establish primitive libraries.

7/n

More detailed manifold analysis can capture the compositional geometry of primitives beyond linear composition & establish primitive libraries.

7/n

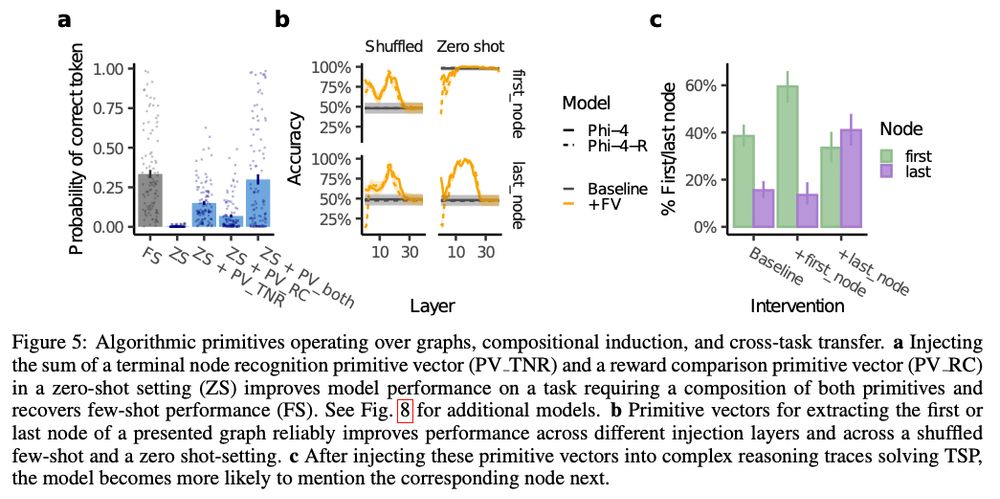

Importantly we find that primitive vectors are compositional. Injecting a primitive vector to prompts with misleading example recovers performance, and injecting two helpful primitive raises performance to having multiple correct examples. Improvement is model-specific, but robust across models.

6/n

6/n

October 27, 2025 at 6:13 PM

Importantly we find that primitive vectors are compositional. Injecting a primitive vector to prompts with misleading example recovers performance, and injecting two helpful primitive raises performance to having multiple correct examples. Improvement is model-specific, but robust across models.

6/n

6/n

We compared (dis)similarities of primitive use across tasks & models.

Comparing Phi-4 with its reasoning-fine tuned counterpart, Phi-4-reasoning, shows that Phi-4 used more brute force path generation primitives while Phi-4-R used primitives involving comparison & verification & higher accuracy.

5/n

Comparing Phi-4 with its reasoning-fine tuned counterpart, Phi-4-reasoning, shows that Phi-4 used more brute force path generation primitives while Phi-4-R used primitives involving comparison & verification & higher accuracy.

5/n

October 27, 2025 at 6:13 PM

We compared (dis)similarities of primitive use across tasks & models.

Comparing Phi-4 with its reasoning-fine tuned counterpart, Phi-4-reasoning, shows that Phi-4 used more brute force path generation primitives while Phi-4-R used primitives involving comparison & verification & higher accuracy.

5/n

Comparing Phi-4 with its reasoning-fine tuned counterpart, Phi-4-reasoning, shows that Phi-4 used more brute force path generation primitives while Phi-4-R used primitives involving comparison & verification & higher accuracy.

5/n

4 tasks: Traveling Salesperson Prob (TSP), 3SAT, AIME, graph navigation.

We detect Algorithmic primitives for each task by clustering latent neural activations+labeling their matched reasoning traces.

We track the evolution of each primitive across layers & tokens->identify transition patterns.

4/n

We detect Algorithmic primitives for each task by clustering latent neural activations+labeling their matched reasoning traces.

We track the evolution of each primitive across layers & tokens->identify transition patterns.

4/n

October 27, 2025 at 6:13 PM

4 tasks: Traveling Salesperson Prob (TSP), 3SAT, AIME, graph navigation.

We detect Algorithmic primitives for each task by clustering latent neural activations+labeling their matched reasoning traces.

We track the evolution of each primitive across layers & tokens->identify transition patterns.

4/n

We detect Algorithmic primitives for each task by clustering latent neural activations+labeling their matched reasoning traces.

We track the evolution of each primitive across layers & tokens->identify transition patterns.

4/n

We validate primitives with causal interventions:

capture algorithmic primitives as function vectors in the latent space when performing a task,

inject primitive vector into residual stream of an LLM performing same or different ask (transfer),

& measure the effect on steering response.

3/n

capture algorithmic primitives as function vectors in the latent space when performing a task,

inject primitive vector into residual stream of an LLM performing same or different ask (transfer),

& measure the effect on steering response.

3/n

October 27, 2025 at 6:13 PM

We validate primitives with causal interventions:

capture algorithmic primitives as function vectors in the latent space when performing a task,

inject primitive vector into residual stream of an LLM performing same or different ask (transfer),

& measure the effect on steering response.

3/n

capture algorithmic primitives as function vectors in the latent space when performing a task,

inject primitive vector into residual stream of an LLM performing same or different ask (transfer),

& measure the effect on steering response.

3/n

How do latent representations & inference time compute shape multi-step LLM solutions? We introduce a framework for tracing & steering algorithmic primitives that underlie multi-step tasks. We link

"reasoning traces" (output) to internal activation patterns & validate with causal intervention

2/n

"reasoning traces" (output) to internal activation patterns & validate with causal intervention

2/n

October 27, 2025 at 6:13 PM

How do latent representations & inference time compute shape multi-step LLM solutions? We introduce a framework for tracing & steering algorithmic primitives that underlie multi-step tasks. We link

"reasoning traces" (output) to internal activation patterns & validate with causal intervention

2/n

"reasoning traces" (output) to internal activation patterns & validate with causal intervention

2/n

Seems like Sarah had good judgment not to reply. He’d have dried a forest for simple texts.

October 12, 2025 at 12:46 PM

Seems like Sarah had good judgment not to reply. He’d have dried a forest for simple texts.

Be ware of AI psychosis :) turns out many begin to have messianic beliefs about themselves due to excessive “excellence” lol

October 9, 2025 at 3:48 PM

Be ware of AI psychosis :) turns out many begin to have messianic beliefs about themselves due to excessive “excellence” lol

Awesome! Will check with colleagues and send an email! Hope we can find a date that works for everyone in person as I believe this is an important discussion.

October 9, 2025 at 2:39 PM

Awesome! Will check with colleagues and send an email! Hope we can find a date that works for everyone in person as I believe this is an important discussion.

Excited about this Ned! Would be great to have you talk about this at MSR for a talk some Tuesday :-)

October 8, 2025 at 3:45 PM

Excited about this Ned! Would be great to have you talk about this at MSR for a talk some Tuesday :-)

Perhaps it helps to think more concretely. The task decomposer proposes a subgoal. The specific subgoal is dependent on the task & you can also see the difference when it’s not used (ablation). Quite a well-known step in solving complex/hierarchical tasks across cognitive science & computer science.

October 7, 2025 at 1:02 PM

Perhaps it helps to think more concretely. The task decomposer proposes a subgoal. The specific subgoal is dependent on the task & you can also see the difference when it’s not used (ablation). Quite a well-known step in solving complex/hierarchical tasks across cognitive science & computer science.

Thank you! @taylorwwebb.bsky.social and I will post a thread next week :-)

October 5, 2025 at 12:25 AM

Thank you! @taylorwwebb.bsky.social and I will post a thread next week :-)

Of your deriving the vector calculus. I assumed by hand, on a whiteboard or piece of paper or an iPad.

October 2, 2025 at 10:00 PM

Of your deriving the vector calculus. I assumed by hand, on a whiteboard or piece of paper or an iPad.

Congrats! So well deserved.

October 2, 2025 at 9:14 PM

Congrats! So well deserved.

You should post screenshots!

October 2, 2025 at 9:13 PM

You should post screenshots!

Right, the papers I shared are not physiology but I think I see what you mean. again, thanks for sharing your work.

September 27, 2025 at 7:38 PM

Right, the papers I shared are not physiology but I think I see what you mean. again, thanks for sharing your work.