Ida Momennejad

@neuroai.bsky.social

Principal Researcher @ Microsoft Research.

AI, RL, cog neuro, philosophy.

www.momen-nejad.org

AI, RL, cog neuro, philosophy.

www.momen-nejad.org

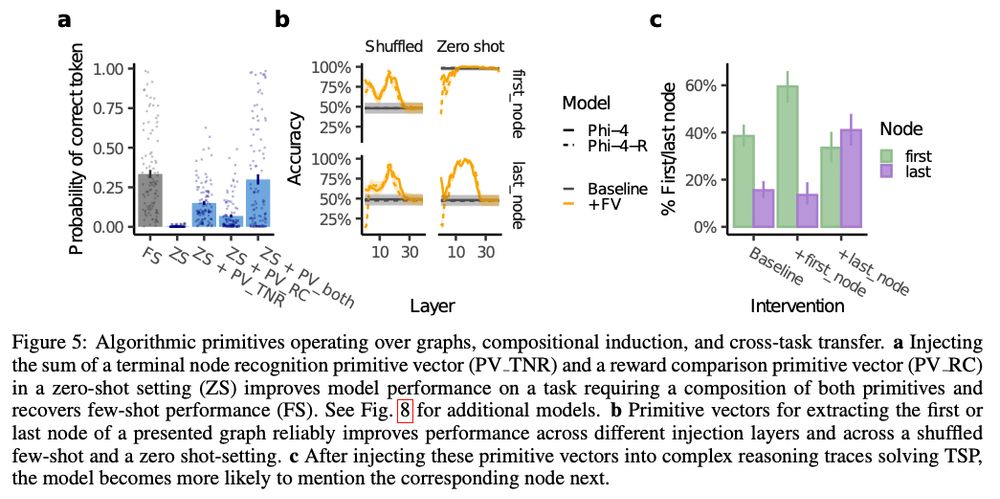

Importantly we find that primitive vectors are compositional. Injecting a primitive vector to prompts with misleading example recovers performance, and injecting two helpful primitive raises performance to having multiple correct examples. Improvement is model-specific, but robust across models.

6/n

6/n

October 27, 2025 at 6:13 PM

Importantly we find that primitive vectors are compositional. Injecting a primitive vector to prompts with misleading example recovers performance, and injecting two helpful primitive raises performance to having multiple correct examples. Improvement is model-specific, but robust across models.

6/n

6/n

We compared (dis)similarities of primitive use across tasks & models.

Comparing Phi-4 with its reasoning-fine tuned counterpart, Phi-4-reasoning, shows that Phi-4 used more brute force path generation primitives while Phi-4-R used primitives involving comparison & verification & higher accuracy.

5/n

Comparing Phi-4 with its reasoning-fine tuned counterpart, Phi-4-reasoning, shows that Phi-4 used more brute force path generation primitives while Phi-4-R used primitives involving comparison & verification & higher accuracy.

5/n

October 27, 2025 at 6:13 PM

We compared (dis)similarities of primitive use across tasks & models.

Comparing Phi-4 with its reasoning-fine tuned counterpart, Phi-4-reasoning, shows that Phi-4 used more brute force path generation primitives while Phi-4-R used primitives involving comparison & verification & higher accuracy.

5/n

Comparing Phi-4 with its reasoning-fine tuned counterpart, Phi-4-reasoning, shows that Phi-4 used more brute force path generation primitives while Phi-4-R used primitives involving comparison & verification & higher accuracy.

5/n

4 tasks: Traveling Salesperson Prob (TSP), 3SAT, AIME, graph navigation.

We detect Algorithmic primitives for each task by clustering latent neural activations+labeling their matched reasoning traces.

We track the evolution of each primitive across layers & tokens->identify transition patterns.

4/n

We detect Algorithmic primitives for each task by clustering latent neural activations+labeling their matched reasoning traces.

We track the evolution of each primitive across layers & tokens->identify transition patterns.

4/n

October 27, 2025 at 6:13 PM

4 tasks: Traveling Salesperson Prob (TSP), 3SAT, AIME, graph navigation.

We detect Algorithmic primitives for each task by clustering latent neural activations+labeling their matched reasoning traces.

We track the evolution of each primitive across layers & tokens->identify transition patterns.

4/n

We detect Algorithmic primitives for each task by clustering latent neural activations+labeling their matched reasoning traces.

We track the evolution of each primitive across layers & tokens->identify transition patterns.

4/n

We validate primitives with causal interventions:

capture algorithmic primitives as function vectors in the latent space when performing a task,

inject primitive vector into residual stream of an LLM performing same or different ask (transfer),

& measure the effect on steering response.

3/n

capture algorithmic primitives as function vectors in the latent space when performing a task,

inject primitive vector into residual stream of an LLM performing same or different ask (transfer),

& measure the effect on steering response.

3/n

October 27, 2025 at 6:13 PM

We validate primitives with causal interventions:

capture algorithmic primitives as function vectors in the latent space when performing a task,

inject primitive vector into residual stream of an LLM performing same or different ask (transfer),

& measure the effect on steering response.

3/n

capture algorithmic primitives as function vectors in the latent space when performing a task,

inject primitive vector into residual stream of an LLM performing same or different ask (transfer),

& measure the effect on steering response.

3/n

Pleased to share new work with @sflippl.bsky.social @eberleoliver.bsky.social @thomasmcgee.bsky.social & undergrad interns at Institute for Pure and Applied Mathematics, UCLA.

Algorithmic Primitives and Compositional Geometry of Reasoning in Language Models

www.arxiv.org/pdf/2510.15987

🧵1/n

Algorithmic Primitives and Compositional Geometry of Reasoning in Language Models

www.arxiv.org/pdf/2510.15987

🧵1/n

October 27, 2025 at 6:13 PM

Pleased to share new work with @sflippl.bsky.social @eberleoliver.bsky.social @thomasmcgee.bsky.social & undergrad interns at Institute for Pure and Applied Mathematics, UCLA.

Algorithmic Primitives and Compositional Geometry of Reasoning in Language Models

www.arxiv.org/pdf/2510.15987

🧵1/n

Algorithmic Primitives and Compositional Geometry of Reasoning in Language Models

www.arxiv.org/pdf/2510.15987

🧵1/n

This summer I have the great pleasure of working w incredible students!

Grads: Thomas McGee (UCLA), Sam Lippl (Columbia), Millie Preece (Cambridge),

undergrads: Salma, Kimberly, Ziwen, Pierce, the RIPS program at IPAM (Institute for pure & applied math) UCLA.

Excited to share our new research soon📝.

Grads: Thomas McGee (UCLA), Sam Lippl (Columbia), Millie Preece (Cambridge),

undergrads: Salma, Kimberly, Ziwen, Pierce, the RIPS program at IPAM (Institute for pure & applied math) UCLA.

Excited to share our new research soon📝.

August 25, 2025 at 2:46 PM

This summer I have the great pleasure of working w incredible students!

Grads: Thomas McGee (UCLA), Sam Lippl (Columbia), Millie Preece (Cambridge),

undergrads: Salma, Kimberly, Ziwen, Pierce, the RIPS program at IPAM (Institute for pure & applied math) UCLA.

Excited to share our new research soon📝.

Grads: Thomas McGee (UCLA), Sam Lippl (Columbia), Millie Preece (Cambridge),

undergrads: Salma, Kimberly, Ziwen, Pierce, the RIPS program at IPAM (Institute for pure & applied math) UCLA.

Excited to share our new research soon📝.

Adorable! Akiko says hi 🐾

August 19, 2025 at 9:03 PM

Adorable! Akiko says hi 🐾

"The aim of science is not to open the door to infinite wisdom but to set a limit to infinite error.”

- Bertolt Brecht

The Life of Galileo

- Bertolt Brecht

The Life of Galileo

August 18, 2025 at 6:06 PM

"The aim of science is not to open the door to infinite wisdom but to set a limit to infinite error.”

- Bertolt Brecht

The Life of Galileo

- Bertolt Brecht

The Life of Galileo

1- The neuroscience & psychology chapters of Sutton & Barto 2nd edition.

full PDF: incompleteideas.net/book/RLbook2...

2- A brief history of intelligence. Not neuroAI, but popular among grad students at RLC & our MSR interns as a deep conceptual introduction. www.abriefhistoryofintelligence.com/book

full PDF: incompleteideas.net/book/RLbook2...

2- A brief history of intelligence. Not neuroAI, but popular among grad students at RLC & our MSR interns as a deep conceptual introduction. www.abriefhistoryofintelligence.com/book

July 17, 2025 at 8:28 PM

1- The neuroscience & psychology chapters of Sutton & Barto 2nd edition.

full PDF: incompleteideas.net/book/RLbook2...

2- A brief history of intelligence. Not neuroAI, but popular among grad students at RLC & our MSR interns as a deep conceptual introduction. www.abriefhistoryofintelligence.com/book

full PDF: incompleteideas.net/book/RLbook2...

2- A brief history of intelligence. Not neuroAI, but popular among grad students at RLC & our MSR interns as a deep conceptual introduction. www.abriefhistoryofintelligence.com/book

Let’s not forget Lashley thought he could remove his frontal cortex and nothing would go wrong. Much grain of salt required when reading his work.

languagelog.ldc.upenn.edu/myl/Lashley1...

languagelog.ldc.upenn.edu/myl/Lashley1...

July 6, 2025 at 12:57 PM

Let’s not forget Lashley thought he could remove his frontal cortex and nothing would go wrong. Much grain of salt required when reading his work.

languagelog.ldc.upenn.edu/myl/Lashley1...

languagelog.ldc.upenn.edu/myl/Lashley1...

Thank you, means a lot coming from you!

Gridworlds are common in tabular RL: can turn most tasks (spatial navigation, social planning, etc) into grid worlds. The cells become the task states, can have reward, & agent takes actions to transition btwn/navigate them.

www.nature.com/articles/s41...

Gridworlds are common in tabular RL: can turn most tasks (spatial navigation, social planning, etc) into grid worlds. The cells become the task states, can have reward, & agent takes actions to transition btwn/navigate them.

www.nature.com/articles/s41...

July 3, 2025 at 2:43 PM

Thank you, means a lot coming from you!

Gridworlds are common in tabular RL: can turn most tasks (spatial navigation, social planning, etc) into grid worlds. The cells become the task states, can have reward, & agent takes actions to transition btwn/navigate them.

www.nature.com/articles/s41...

Gridworlds are common in tabular RL: can turn most tasks (spatial navigation, social planning, etc) into grid worlds. The cells become the task states, can have reward, & agent takes actions to transition btwn/navigate them.

www.nature.com/articles/s41...

I've been building a model of how intrusive thoughts can warp cognitive maps (world model) in PTSD & derail goal-directed behavior. It combines successor representation & prioritized replay. I started off with a grid world, then embeddings. Today in a story recall task, reading the text was moving:

July 2, 2025 at 6:48 PM

I've been building a model of how intrusive thoughts can warp cognitive maps (world model) in PTSD & derail goal-directed behavior. It combines successor representation & prioritized replay. I started off with a grid world, then embeddings. Today in a story recall task, reading the text was moving:

“WE THE PEOPLES OF THE UNITED NATIONS DETERMINED

to save succeeding generations from the scourge of war,

which twice in our lifetime has brought untold sorrow to mankind, &

to reaffirm faith in fundamental human rights, in the dignity & worth of the human person, in the equal rights of men & women”

to save succeeding generations from the scourge of war,

which twice in our lifetime has brought untold sorrow to mankind, &

to reaffirm faith in fundamental human rights, in the dignity & worth of the human person, in the equal rights of men & women”

June 21, 2025 at 1:51 AM

“WE THE PEOPLES OF THE UNITED NATIONS DETERMINED

to save succeeding generations from the scourge of war,

which twice in our lifetime has brought untold sorrow to mankind, &

to reaffirm faith in fundamental human rights, in the dignity & worth of the human person, in the equal rights of men & women”

to save succeeding generations from the scourge of war,

which twice in our lifetime has brought untold sorrow to mankind, &

to reaffirm faith in fundamental human rights, in the dignity & worth of the human person, in the equal rights of men & women”

This research could impact more than understanding, toward more efficient & lower-emission training, fine-tuning, & designing novel architectures with algorithms in mind.

Very grateful to coauthors & UCLA's Institute for Pure & Applied Mathematics, where we met.

n/n🧵

openreview.net/forum?id=eax...

Very grateful to coauthors & UCLA's Institute for Pure & Applied Mathematics, where we met.

n/n🧵

openreview.net/forum?id=eax...

June 20, 2025 at 3:48 PM

This research could impact more than understanding, toward more efficient & lower-emission training, fine-tuning, & designing novel architectures with algorithms in mind.

Very grateful to coauthors & UCLA's Institute for Pure & Applied Mathematics, where we met.

n/n🧵

openreview.net/forum?id=eax...

Very grateful to coauthors & UCLA's Institute for Pure & Applied Mathematics, where we met.

n/n🧵

openreview.net/forum?id=eax...

We analyzed latent representations of room/node tokens described in the prompt & plot the separation of room representations across layers (Llama 3 8B & 70B).

At layer0 everything is similar, but they gradually diverge: goal W/subgoal Q closest to goal & competing room Q separated the furthest.

5/n

At layer0 everything is similar, but they gradually diverge: goal W/subgoal Q closest to goal & competing room Q separated the furthest.

5/n

June 20, 2025 at 3:48 PM

We analyzed latent representations of room/node tokens described in the prompt & plot the separation of room representations across layers (Llama 3 8B & 70B).

At layer0 everything is similar, but they gradually diverge: goal W/subgoal Q closest to goal & competing room Q separated the furthest.

5/n

At layer0 everything is similar, but they gradually diverge: goal W/subgoal Q closest to goal & competing room Q separated the furthest.

5/n

In a planning task (2-step tree) we extracted attention heatmaps across the layers from the goal token to the correct & incorrect paths.

Results don't match BF or DF search. Attention is

mostly to the goal location M, followed by nodes on the correct path, more like backward planning from goal.

4/n

Results don't match BF or DF search. Attention is

mostly to the goal location M, followed by nodes on the correct path, more like backward planning from goal.

4/n

June 20, 2025 at 3:48 PM

In a planning task (2-step tree) we extracted attention heatmaps across the layers from the goal token to the correct & incorrect paths.

Results don't match BF or DF search. Attention is

mostly to the goal location M, followed by nodes on the correct path, more like backward planning from goal.

4/n

Results don't match BF or DF search. Attention is

mostly to the goal location M, followed by nodes on the correct path, more like backward planning from goal.

4/n

Case study: navigation on deterministic graphs, a task LLMs can't do well (our NeurIPS 2023).

AlgEval

a) identifies algorithmic hypotheses, e.g. BFS, DFS

b) uses neuroscience/mech interp methods to test them, e.g. does the sequence of attended/activated tokens along the layers fit a hypothesis?

3/n

AlgEval

a) identifies algorithmic hypotheses, e.g. BFS, DFS

b) uses neuroscience/mech interp methods to test them, e.g. does the sequence of attended/activated tokens along the layers fit a hypothesis?

3/n

June 20, 2025 at 3:48 PM

Case study: navigation on deterministic graphs, a task LLMs can't do well (our NeurIPS 2023).

AlgEval

a) identifies algorithmic hypotheses, e.g. BFS, DFS

b) uses neuroscience/mech interp methods to test them, e.g. does the sequence of attended/activated tokens along the layers fit a hypothesis?

3/n

AlgEval

a) identifies algorithmic hypotheses, e.g. BFS, DFS

b) uses neuroscience/mech interp methods to test them, e.g. does the sequence of attended/activated tokens along the layers fit a hypothesis?

3/n

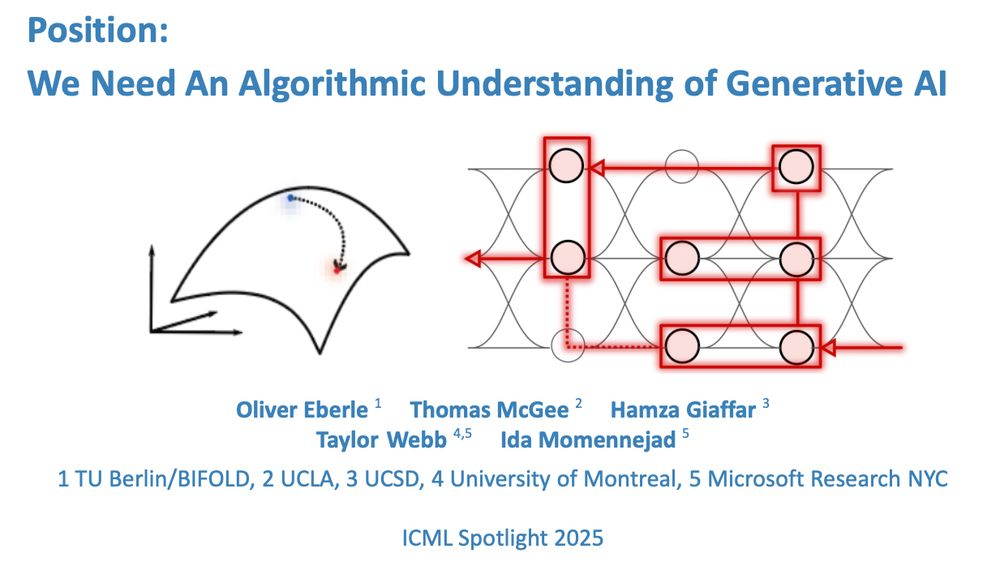

Our position paper argues that the ML community

should prioritize research on algorithmic understanding of generative AI. Scaling is not enough.

We propose AlgEval: A methodological path to algorithmic evaluation, bringing cognitive computational neuroscience theory/methods to interpretability.

2/n

should prioritize research on algorithmic understanding of generative AI. Scaling is not enough.

We propose AlgEval: A methodological path to algorithmic evaluation, bringing cognitive computational neuroscience theory/methods to interpretability.

2/n

June 20, 2025 at 3:48 PM

Our position paper argues that the ML community

should prioritize research on algorithmic understanding of generative AI. Scaling is not enough.

We propose AlgEval: A methodological path to algorithmic evaluation, bringing cognitive computational neuroscience theory/methods to interpretability.

2/n

should prioritize research on algorithmic understanding of generative AI. Scaling is not enough.

We propose AlgEval: A methodological path to algorithmic evaluation, bringing cognitive computational neuroscience theory/methods to interpretability.

2/n

Pleased to share our ICML Spotlight with @eberleoliver.bsky.social, Thomas McGee, Hamza Giaffar, @taylorwwebb.bsky.social.

Position: We Need An Algorithmic Understanding of Generative AI

What algorithms do LLMs actually learn and use to solve problems?🧵1/n

openreview.net/forum?id=eax...

Position: We Need An Algorithmic Understanding of Generative AI

What algorithms do LLMs actually learn and use to solve problems?🧵1/n

openreview.net/forum?id=eax...

June 20, 2025 at 3:48 PM

Pleased to share our ICML Spotlight with @eberleoliver.bsky.social, Thomas McGee, Hamza Giaffar, @taylorwwebb.bsky.social.

Position: We Need An Algorithmic Understanding of Generative AI

What algorithms do LLMs actually learn and use to solve problems?🧵1/n

openreview.net/forum?id=eax...

Position: We Need An Algorithmic Understanding of Generative AI

What algorithms do LLMs actually learn and use to solve problems?🧵1/n

openreview.net/forum?id=eax...

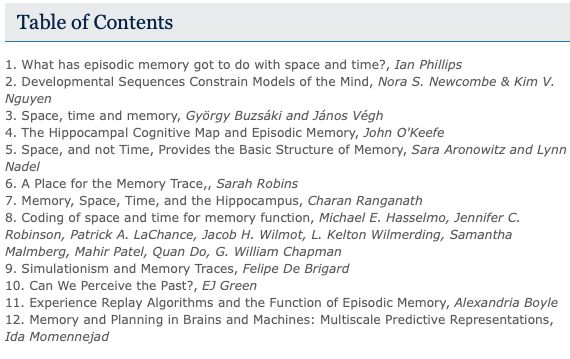

Pleased to say "Space, Time, and Memory", an academic book by Oxford University Press edited by the inimitable Lynn Nadel & Sara Aronowitz is now out.

I contributed a chapter, "Memory and Planning in Brains and Machines".

You can download the entire book for free:

library.oapen.org/bitstream/ha...

I contributed a chapter, "Memory and Planning in Brains and Machines".

You can download the entire book for free:

library.oapen.org/bitstream/ha...

June 9, 2025 at 8:48 PM

Pleased to say "Space, Time, and Memory", an academic book by Oxford University Press edited by the inimitable Lynn Nadel & Sara Aronowitz is now out.

I contributed a chapter, "Memory and Planning in Brains and Machines".

You can download the entire book for free:

library.oapen.org/bitstream/ha...

I contributed a chapter, "Memory and Planning in Brains and Machines".

You can download the entire book for free:

library.oapen.org/bitstream/ha...

See below! The third one is when I asked for a ref with prompt “ref”

May 10, 2025 at 1:31 PM

See below! The third one is when I asked for a ref with prompt “ref”

a) Serious failure modes getting worse --> the promise of scale didn't deliver. Inaccuracy a major issue any application should take seriously & why big tech invests in new architectures.

b) our neurips 2023 shows how hallucinations impair reasoning on simple MDPs in 8 LLMs

c) helpful but not novel

b) our neurips 2023 shows how hallucinations impair reasoning on simple MDPs in 8 LLMs

c) helpful but not novel

May 8, 2025 at 8:13 PM

a) Serious failure modes getting worse --> the promise of scale didn't deliver. Inaccuracy a major issue any application should take seriously & why big tech invests in new architectures.

b) our neurips 2023 shows how hallucinations impair reasoning on simple MDPs in 8 LLMs

c) helpful but not novel

b) our neurips 2023 shows how hallucinations impair reasoning on simple MDPs in 8 LLMs

c) helpful but not novel

a) then you disagree with openAi's own report.

O3 hallucinated on 33% of known benchmark tasks, O4-mini on 48%, O1, on 16%. see below.

b) if foundation models are used to make any predictions then hallucinations are a serious limitation

c) not a paradigm shift then

cdn.openai.com/pdf/2221c875...

O3 hallucinated on 33% of known benchmark tasks, O4-mini on 48%, O1, on 16%. see below.

b) if foundation models are used to make any predictions then hallucinations are a serious limitation

c) not a paradigm shift then

cdn.openai.com/pdf/2221c875...

May 8, 2025 at 7:48 PM

a) then you disagree with openAi's own report.

O3 hallucinated on 33% of known benchmark tasks, O4-mini on 48%, O1, on 16%. see below.

b) if foundation models are used to make any predictions then hallucinations are a serious limitation

c) not a paradigm shift then

cdn.openai.com/pdf/2221c875...

O3 hallucinated on 33% of known benchmark tasks, O4-mini on 48%, O1, on 16%. see below.

b) if foundation models are used to make any predictions then hallucinations are a serious limitation

c) not a paradigm shift then

cdn.openai.com/pdf/2221c875...

Yesss! I spend so much time learning her language my partner calls me a dog whisperer! What a privilege.

May 2, 2025 at 5:27 PM

Yesss! I spend so much time learning her language my partner calls me a dog whisperer! What a privilege.