home.msbstats.info

(He/Him)

Also - grade (and degree!) inflation is crazy.

Also - grade (and degree!) inflation is crazy.

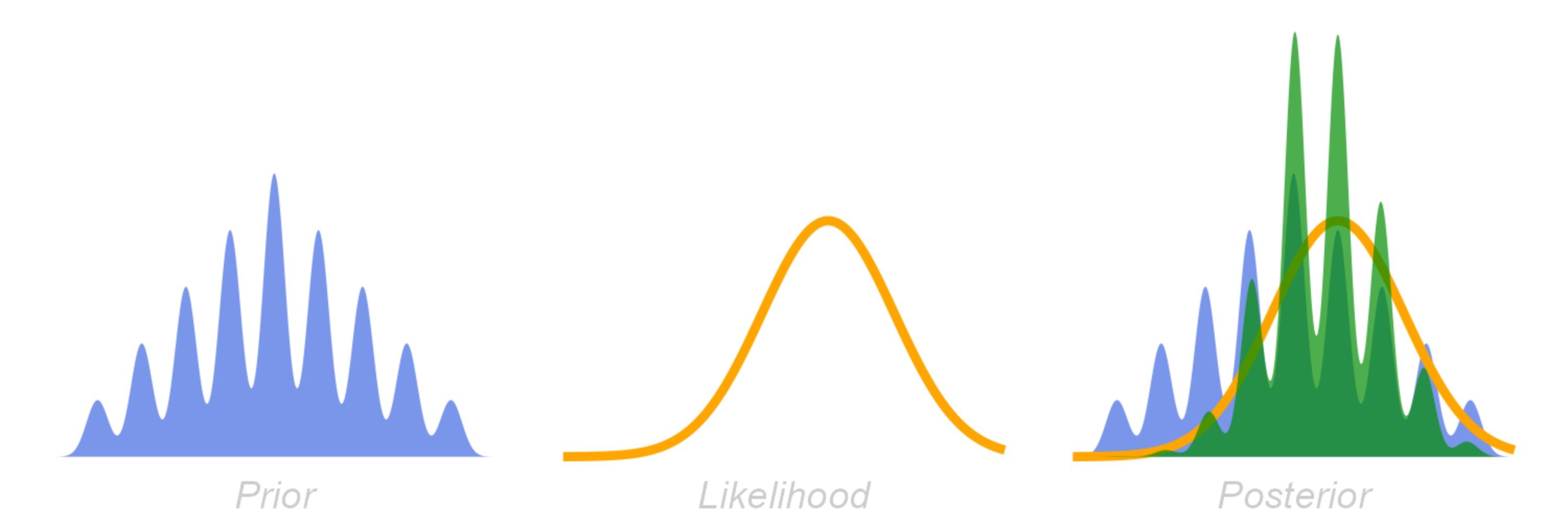

I like spike-and-slab priors (or weighed posteriors) for the same reason! There's something really satisfying about forcing a model comparison problem into an estimation problem (I think people tend to go the other way)

I like spike-and-slab priors (or weighed posteriors) for the same reason! There's something really satisfying about forcing a model comparison problem into an estimation problem (I think people tend to go the other way)

If we write the joint probability as:

p(data | parameters, model) p(parameters | model) p(model)

I would say the first term is the likelihood and both the second and third terms are the prior,

If we write the joint probability as:

p(data | parameters, model) p(parameters | model) p(model)

I would say the first term is the likelihood and both the second and third terms are the prior,

discourse.mc-stan.org/t/understand...

discourse.mc-stan.org/t/understand...

I'm not against all uses of NHST, but if it's between using it how it's being used and not using it at all, I'd prefer the latter 🤷♂️

I'm not against all uses of NHST, but if it's between using it how it's being used and not using it at all, I'd prefer the latter 🤷♂️