lisaalaz.github.io

LLM agents performing real-world tasks should be able to combine these different types of reasoning, but are they fit for the job? 🤔

🧵⬇️

LLM agents performing real-world tasks should be able to combine these different types of reasoning, but are they fit for the job? 🤔

🧵⬇️

We demonstrate that human preferences can be reverse engineered effectively by pipelining LLMs to optimise upstream preambles via reinforcement learning 🧵⬇️

We demonstrate that human preferences can be reverse engineered effectively by pipelining LLMs to optimise upstream preambles via reinforcement learning 🧵⬇️

Check out the poster presentation on Sunday 27th April in Singapore!

Check out the poster presentation on Sunday 27th April in Singapore!

Check it out at youtu.be/DL7qwmWWk88?...

Check it out at youtu.be/DL7qwmWWk88?...

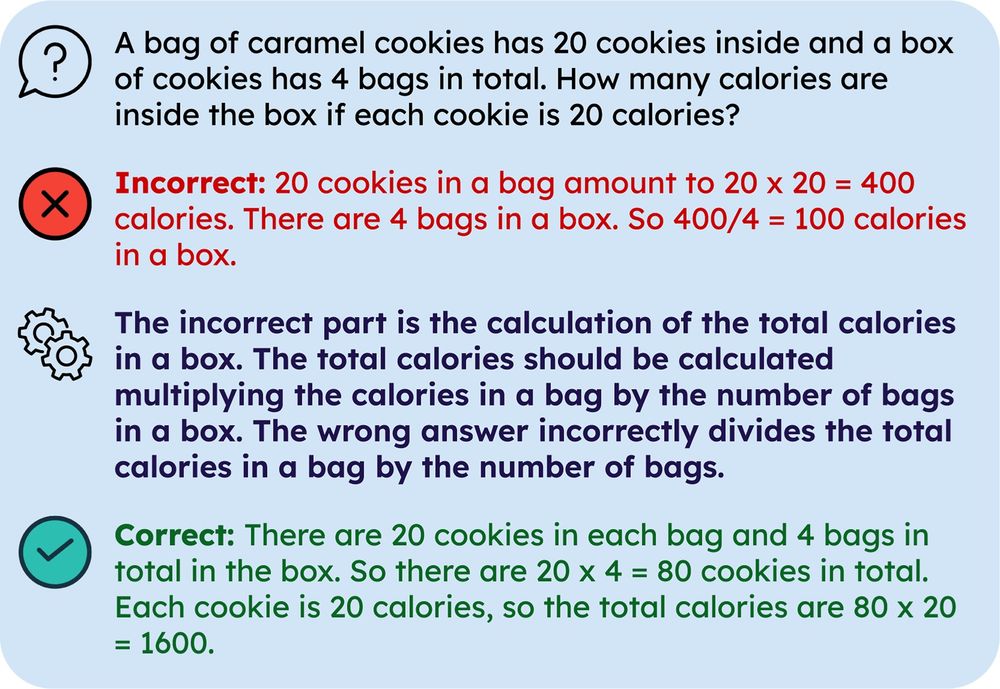

When LLMs learn from previous incorrect answers, they typically observe corrective feedback in the form of rationales explaining each mistake. In our new preprint, we find these rationales do not help, in fact they hurt performance!

🧵

When LLMs learn from previous incorrect answers, they typically observe corrective feedback in the form of rationales explaining each mistake. In our new preprint, we find these rationales do not help, in fact they hurt performance!

🧵

Students do different research, go on the job market, and recruit other students. Ping me and I'll add you!

Students do different research, go on the job market, and recruit other students. Ping me and I'll add you!

storage.googleapis.com/deepmind-med...

(also soon to be up on Arxiv, once it's been processed there)

storage.googleapis.com/deepmind-med...

(also soon to be up on Arxiv, once it's been processed there)

US: apply.workable.com/huggingface/...

EMEA: apply.workable.com/huggingface/...

US: apply.workable.com/huggingface/...

EMEA: apply.workable.com/huggingface/...

go.bsky.app/LUrLWXe

#LLMAgents #LLMReasoning

go.bsky.app/LUrLWXe

#LLMAgents #LLMReasoning

I am PhD-ing at Imperial College under @marekrei.bsky.social’s supervision. I am broadly interested in LLM/LVLM reasoning & planning 🤖 (here’s our latest work arxiv.org/abs/2411.04535)

Do reach out if you are interested in these (or related) topics!

I am PhD-ing at Imperial College under @marekrei.bsky.social’s supervision. I am broadly interested in LLM/LVLM reasoning & planning 🤖 (here’s our latest work arxiv.org/abs/2411.04535)

Do reach out if you are interested in these (or related) topics!

To follow us all click 'follow all' in the starter pack below

go.bsky.app/Bv5thAb

To follow us all click 'follow all' in the starter pack below

go.bsky.app/Bv5thAb

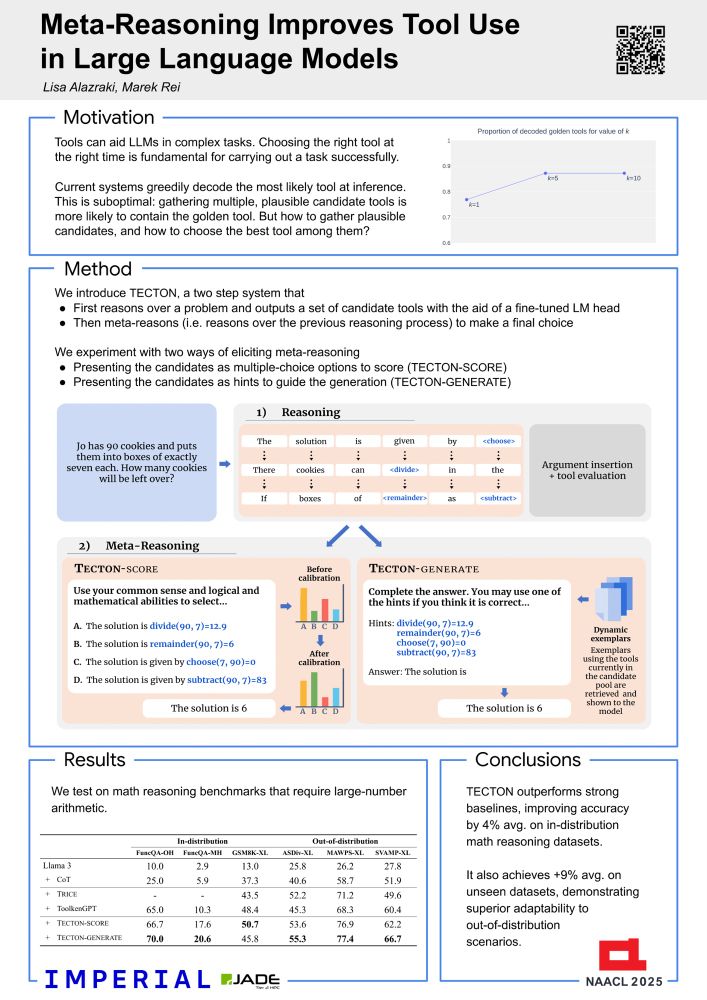

Meta-Reasoning Improves Tool Use in Large Language Models

https://arxiv.org/abs/2411.04535

Meta-Reasoning Improves Tool Use in Large Language Models

https://arxiv.org/abs/2411.04535

Meta-Reasoning Improves Tool Use in Large Language Models

https://arxiv.org/abs/2411.04535

go.bsky.app/JgneRQk

go.bsky.app/JgneRQk