Me and my co-authors will also be at ICLR presenting this work, so feel free to come chat with us to discuss more about SymDiff!

Me and my co-authors will also be at ICLR presenting this work, so feel free to come chat with us to discuss more about SymDiff!

(8/9)

(8/9)

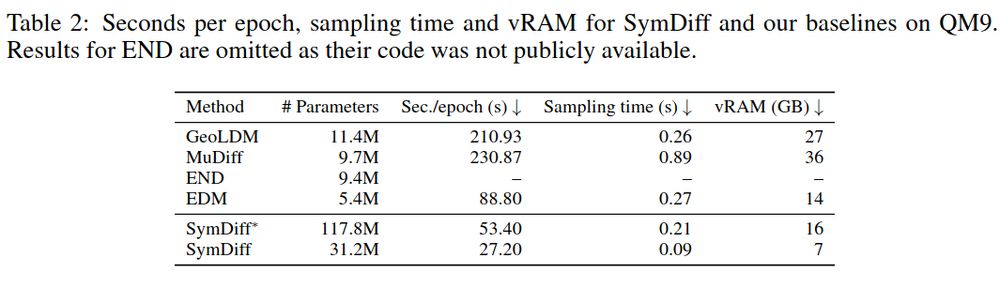

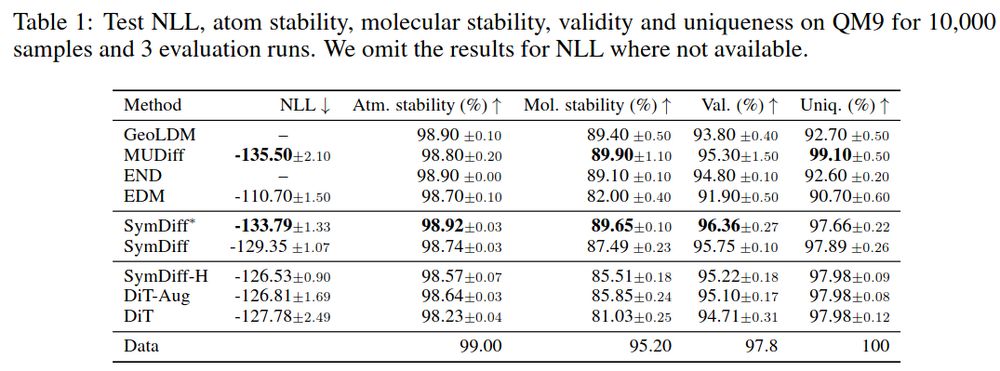

We take the basic molecule generation framework of EDM (Hoogeboom et al. 2022) and use SymDiff with Diffusion Transformers as a drop-in replacement for the EGNN they use in their reverse process

We take the basic molecule generation framework of EDM (Hoogeboom et al. 2022) and use SymDiff with Diffusion Transformers as a drop-in replacement for the EGNN they use in their reverse process

✅ We also sketch how to extend SymDiff to score and flow-based models as well

✅ We also sketch how to extend SymDiff to score and flow-based models as well

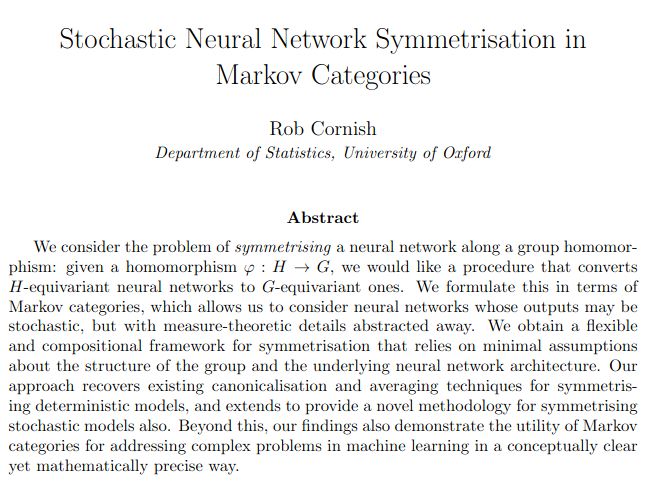

✅ We overcome previous issues with symmetrisation concerning pathologies of cannonicalisation, and the computational cost and errors involved in evaluating frame averaging/probabilistic symmetrisation

✅ We overcome previous issues with symmetrisation concerning pathologies of cannonicalisation, and the computational cost and errors involved in evaluating frame averaging/probabilistic symmetrisation

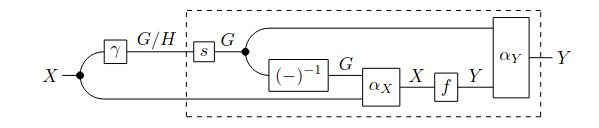

Our key idea is to "symmetrise" the reverse kernels of a diffusion model to build equivariance with unconstrained architectures

Our key idea is to "symmetrise" the reverse kernels of a diffusion model to build equivariance with unconstrained architectures

📄Paper link: arxiv.org/abs/2410.06262

📄Paper link: arxiv.org/abs/2410.06262