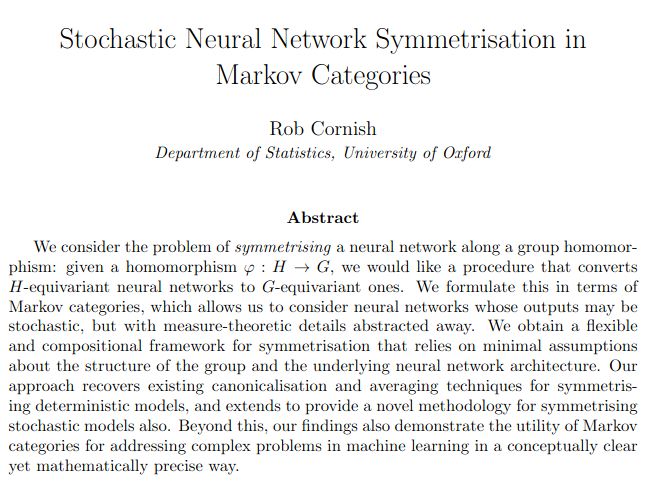

(8/9)

(8/9)

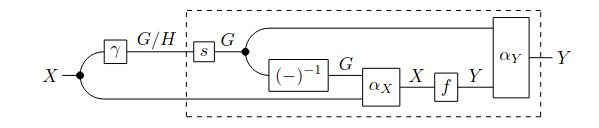

This allows us to ensure E(3)-equivariance with just highly scalable standard architectures such as Diffusion Transformers, instead of EGNNs, for molecular generation

This allows us to ensure E(3)-equivariance with just highly scalable standard architectures such as Diffusion Transformers, instead of EGNNs, for molecular generation