Come chat with us. We would love to discuss implications for AI policy, better auditing methods, and next steps for algorithmic fairness research.

#AIFairness #XAI

Come chat with us. We would love to discuss implications for AI policy, better auditing methods, and next steps for algorithmic fairness research.

#AIFairness #XAI

Current explanation-based auditing is, therefore, fundamentally flawed, and we need additional safeguards.

Current explanation-based auditing is, therefore, fundamentally flawed, and we need additional safeguards.

GDPR ✓ Digital Services Act ✓ Algorithmic Accountability Act ✓ GDPD (Brazil) ✓

GDPR ✓ Digital Services Act ✓ Algorithmic Accountability Act ✓ GDPD (Brazil) ✓

1️⃣ They target individuals, while discrimination operates on groups

2️⃣ Users’ causal models are flawed

3️⃣ Users overestimate proxy strength and treat its presence in the explanation as discrimination

4️⃣ Feature-outcome relationships bias user claims

1️⃣ They target individuals, while discrimination operates on groups

2️⃣ Users’ causal models are flawed

3️⃣ Users overestimate proxy strength and treat its presence in the explanation as discrimination

4️⃣ Feature-outcome relationships bias user claims

When participants flag discrimination, they are correct ~50% of the time, miss 55% of the discriminatory predictions and keep a 30% FPR.

Additional knowledge (protected attributes, proxy strength) improves the detection to roughly 60% without affecting other measures.

When participants flag discrimination, they are correct ~50% of the time, miss 55% of the discriminatory predictions and keep a 30% FPR.

Additional knowledge (protected attributes, proxy strength) improves the detection to roughly 60% without affecting other measures.

So, how did they do?

So, how did they do?

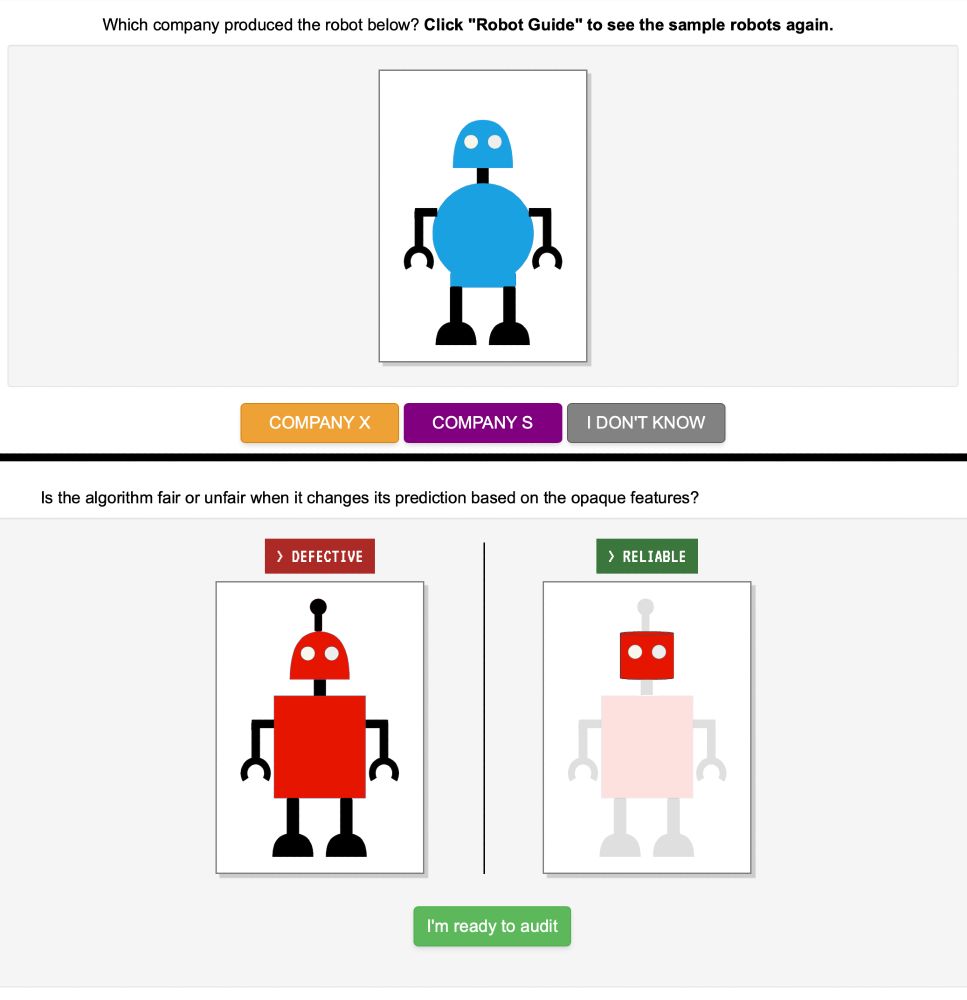

We then saw how well they could flag unfair predictions based on counterfactual explanations and feature attribution scores.

We then saw how well they could flag unfair predictions based on counterfactual explanations and feature attribution scores.

Our model predicts failure based on robot body parts. It can discriminate against Company X by predicting that robots without an antenna fail.

Our model predicts failure based on robot body parts. It can discriminate against Company X by predicting that robots without an antenna fail.

To tackle this challenge, we introduce a synthetic task where we:

- Teach users how to use explanations

- Control their beliefs

- Adapt the world to fit their beliefs

- Control the explanation content

To tackle this challenge, we introduce a synthetic task where we:

- Teach users how to use explanations

- Control their beliefs

- Adapt the world to fit their beliefs

- Control the explanation content

- Proxies not being revealed by explanations

- Issues with interpreting explanations

- Wrong assumptions about proxy strength

- Unknown protected class

- Incorrect causal beliefs

- Proxies not being revealed by explanations

- Issues with interpreting explanations

- Wrong assumptions about proxy strength

- Unknown protected class

- Incorrect causal beliefs

Would a rejected female applicant get approved if she somehow applied as a man?

If yes, her prediction was discriminatory.

Fairness requires predictions to stay the same regardless of the protected class.

Would a rejected female applicant get approved if she somehow applied as a man?

If yes, her prediction was discriminatory.

Fairness requires predictions to stay the same regardless of the protected class.