GDPR ✓ Digital Services Act ✓ Algorithmic Accountability Act ✓ GDPD (Brazil) ✓

GDPR ✓ Digital Services Act ✓ Algorithmic Accountability Act ✓ GDPD (Brazil) ✓

When participants flag discrimination, they are correct ~50% of the time, miss 55% of the discriminatory predictions and keep a 30% FPR.

Additional knowledge (protected attributes, proxy strength) improves the detection to roughly 60% without affecting other measures.

When participants flag discrimination, they are correct ~50% of the time, miss 55% of the discriminatory predictions and keep a 30% FPR.

Additional knowledge (protected attributes, proxy strength) improves the detection to roughly 60% without affecting other measures.

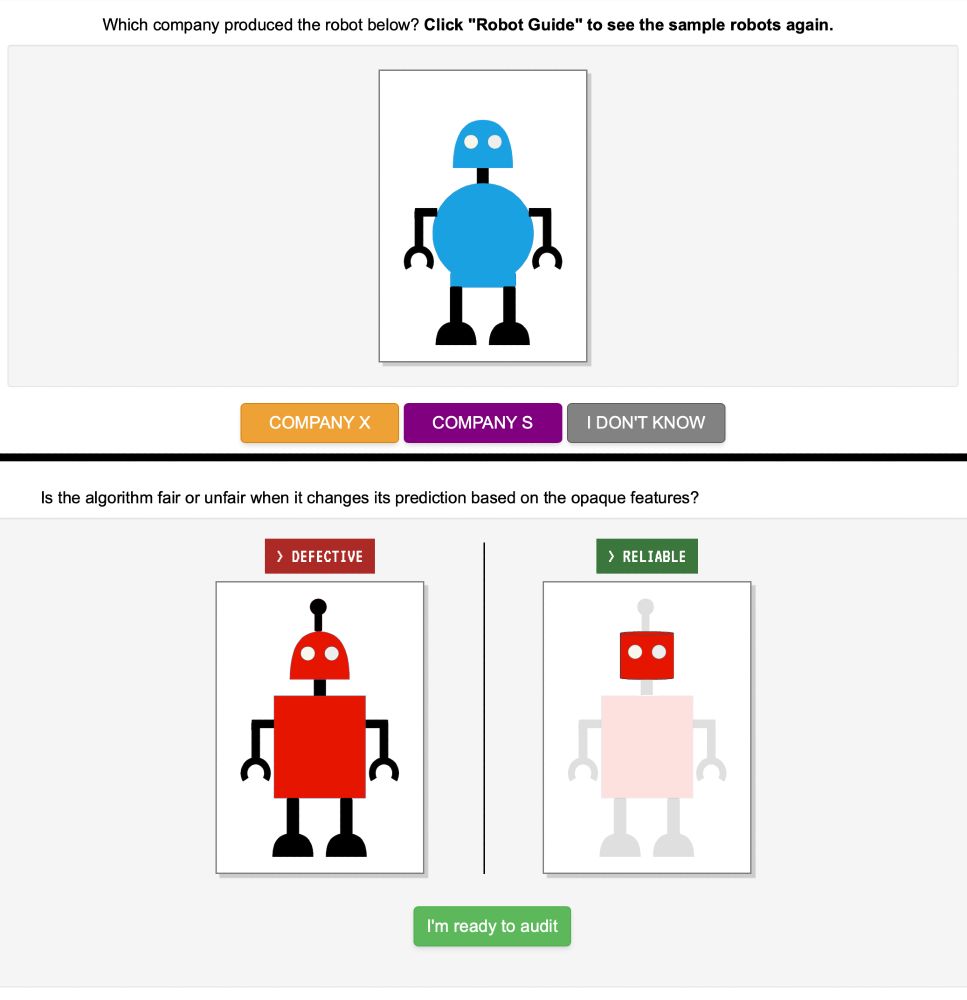

We then saw how well they could flag unfair predictions based on counterfactual explanations and feature attribution scores.

We then saw how well they could flag unfair predictions based on counterfactual explanations and feature attribution scores.

Our model predicts failure based on robot body parts. It can discriminate against Company X by predicting that robots without an antenna fail.

Our model predicts failure based on robot body parts. It can discriminate against Company X by predicting that robots without an antenna fail.

Would a rejected female applicant get approved if she somehow applied as a man?

If yes, her prediction was discriminatory.

Fairness requires predictions to stay the same regardless of the protected class.

Would a rejected female applicant get approved if she somehow applied as a man?

If yes, her prediction was discriminatory.

Fairness requires predictions to stay the same regardless of the protected class.

But is there any evidence for that?

In our latest work w/ David Danks @berkustun, we show explanations fail to help people, even under optimal conditions.

PDF shorturl.at/yaRua

But is there any evidence for that?

In our latest work w/ David Danks @berkustun, we show explanations fail to help people, even under optimal conditions.

PDF shorturl.at/yaRua