claude.site/artifacts/f8...

Try it yourself — What stands out to you? 👀

claude.site/artifacts/f8...

Try it yourself — What stands out to you? 👀

- Trump's language was heavy on negation and control ("don't," "not," "no").

- Trump's language was heavy on negation and control ("don't," "not," "no").

Key Insights in thread

Key Insights in thread

Alternatively, do your own agentic or chain of thought prompting. Or go full professional with #dspy or #adalflow for auto prompt optimization.

R squared is

0.845 for GPT4oMini

0.714 for Qwen2.4 Coder 32B

Many more comparisons to follow :)

Do you know a good LLM for European Languages?

R squared is

0.845 for GPT4oMini

0.714 for Qwen2.4 Coder 32B

Many more comparisons to follow :)

Do you know a good LLM for European Languages?

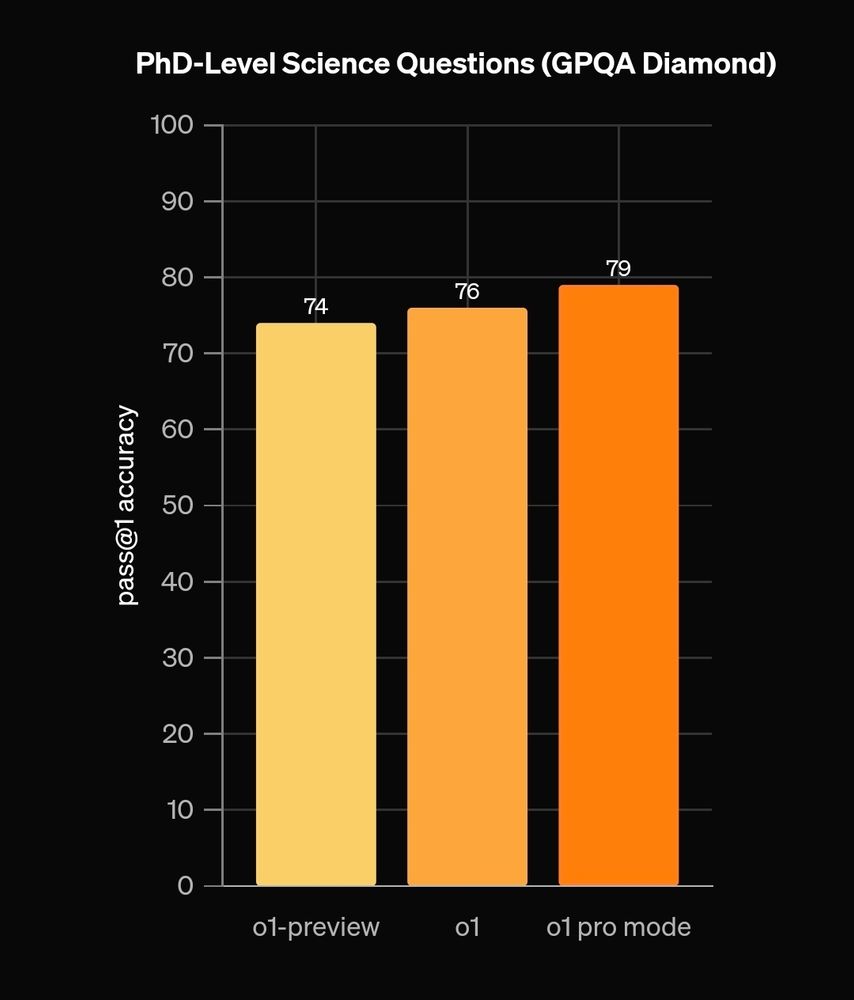

Could help to make research more efficient Imho. Especially since they've older models to achieve this!

www.nature.com/articles/s41...

Could help to make research more efficient Imho. Especially since they've older models to achieve this!

www.nature.com/articles/s41...

Could it be because it's often better than openai? - for coding it's my go to model.

Could it be because it's often better than openai? - for coding it's my go to model.

@alanthompson.net

@alanthompson.net