claude.site/artifacts/f8...

Try it yourself — What stands out to you? 👀

claude.site/artifacts/f8...

Try it yourself — What stands out to you? 👀

Key Insights in thread

Key Insights in thread

Paper: huggingface.co/papers/2410....

Paper: huggingface.co/papers/2410....

NONE!

NONE!

Alternatively, do your own agentic or chain of thought prompting. Or go full professional with #dspy or #adalflow for auto prompt optimization.

R squared is

0.845 for GPT4oMini

0.714 for Qwen2.4 Coder 32B

Many more comparisons to follow :)

Do you know a good LLM for European Languages?

R squared is

0.845 for GPT4oMini

0.714 for Qwen2.4 Coder 32B

Many more comparisons to follow :)

Do you know a good LLM for European Languages?

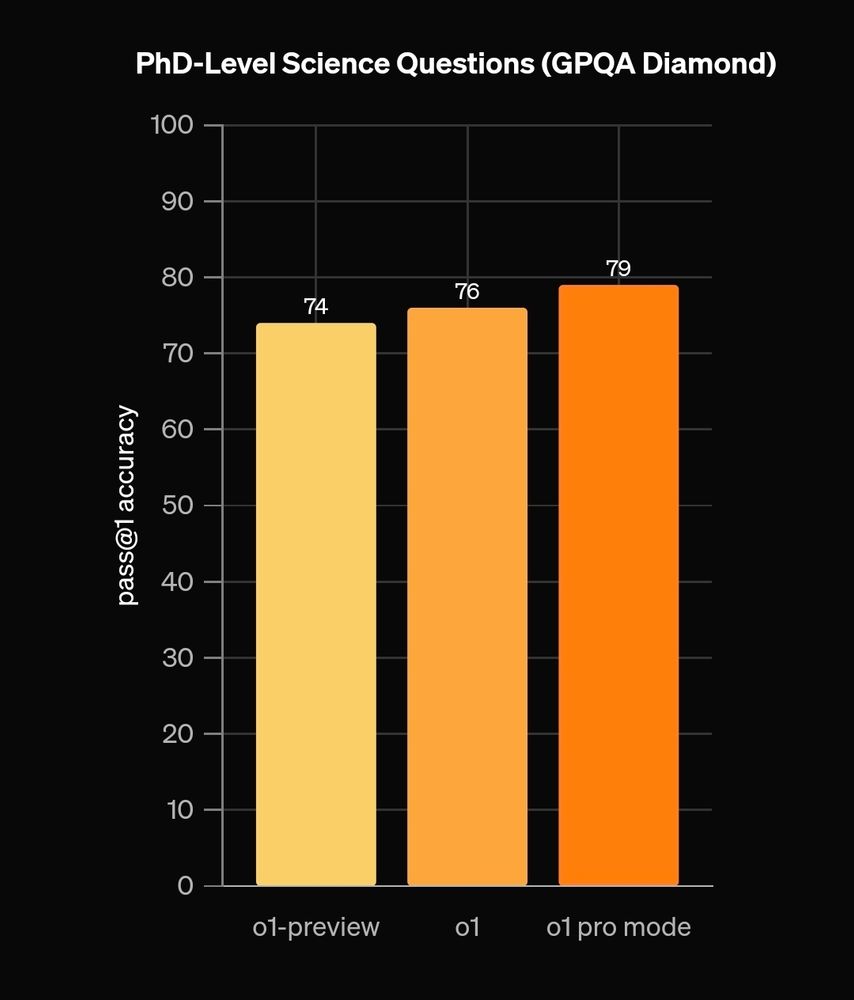

Could help to make research more efficient Imho. Especially since they've older models to achieve this!

www.nature.com/articles/s41...

Could help to make research more efficient Imho. Especially since they've older models to achieve this!

www.nature.com/articles/s41...

Could it be because it's often better than openai? - for coding it's my go to model.

Could it be because it's often better than openai? - for coding it's my go to model.

@alanthompson.net

@alanthompson.net

paper: arxiv.org/abs/2305.18466

Video: www.youtube.com/watch?v=vei7...

paper: arxiv.org/abs/2305.18466

Video: www.youtube.com/watch?v=vei7...

Prompt engineering can mitigate, but its certainly different from the DaVinci days (gpt3)...old school completion.

Prompt engineering can mitigate, but its certainly different from the DaVinci days (gpt3)...old school completion.

While you are drinking wine and drowning in turkey, I would encourage folks to think about:

What if Bluesky really is our shot at breaking the web2/socmedia stranglehold on collective sensemaking...

What kinds of trillionaire and sovereign attacks are coming?

While you are drinking wine and drowning in turkey, I would encourage folks to think about:

What if Bluesky really is our shot at breaking the web2/socmedia stranglehold on collective sensemaking...

What kinds of trillionaire and sovereign attacks are coming?