Rising third-year undergrad at the University of Chicago, working on LLM tool use, evaluation, and hypothesis generation.

✅ Works across out-of-distribution (OOD) tasks

✅ Generated hypothesis can be transferred to different LLMs (e.g., GPT-4o-mini ↔ LLAMA-3.3-70B)

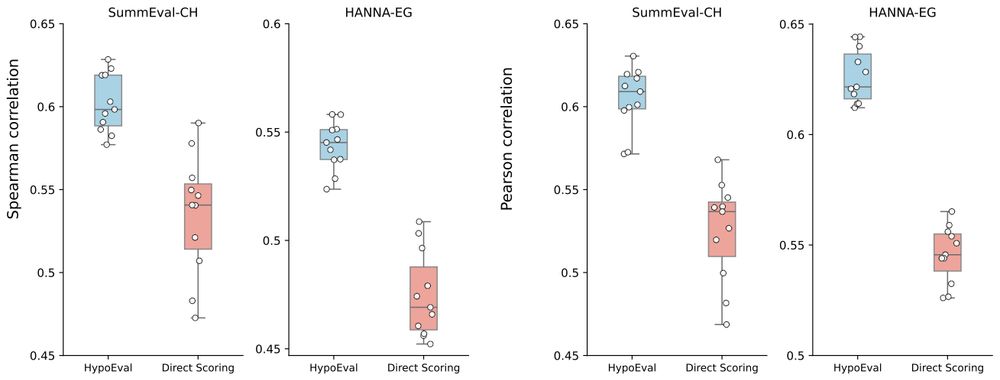

✅ Reduces sensitivity to prompt variations compared to direct scoring

✅ Works across out-of-distribution (OOD) tasks

✅ Generated hypothesis can be transferred to different LLMs (e.g., GPT-4o-mini ↔ LLAMA-3.3-70B)

✅ Reduces sensitivity to prompt variations compared to direct scoring

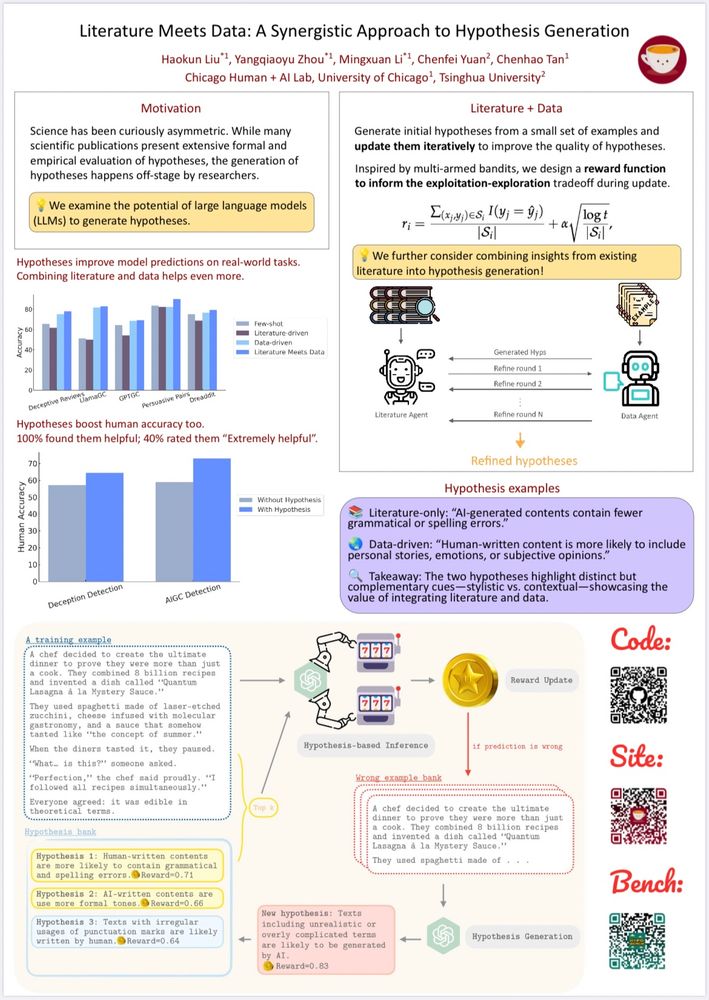

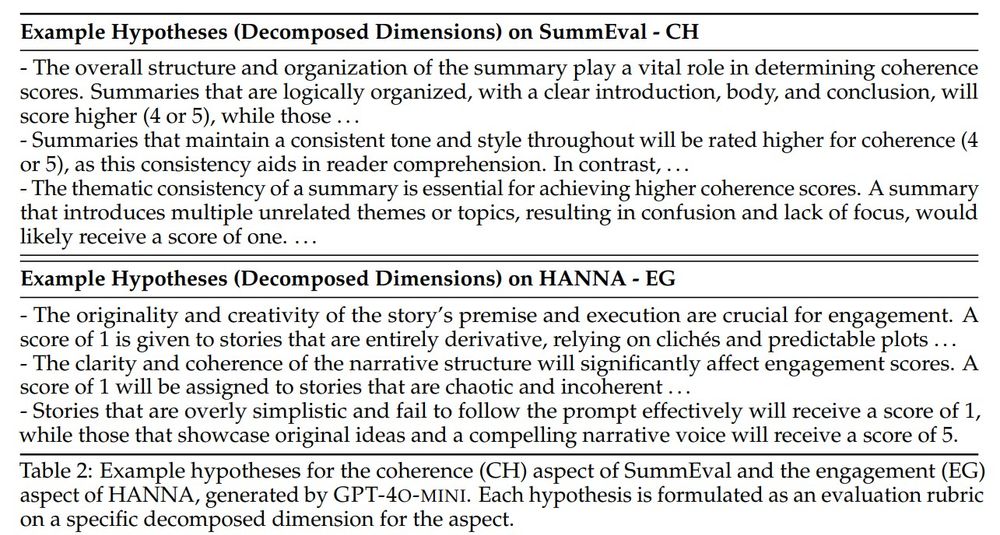

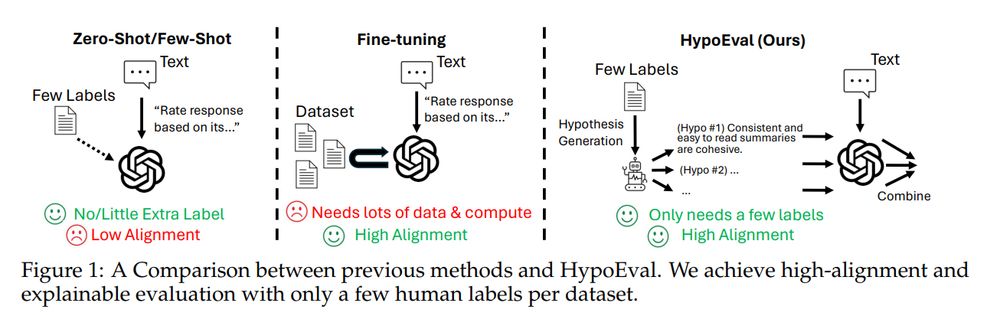

Building upon SOTA hypothesis generation methods, we generate hypotheses — decomposed rubrics (similar to checklists, but more systematic and explainable) — from existing literature and just 30 human annotations (scores) of texts.

Building upon SOTA hypothesis generation methods, we generate hypotheses — decomposed rubrics (similar to checklists, but more systematic and explainable) — from existing literature and just 30 human annotations (scores) of texts.