Rising third-year undergrad at the University of Chicago, working on LLM tool use, evaluation, and hypothesis generation.

Great thanks to my wonderful collaborators Hanchen Li and my advisor @chenhaotan.bsky.social!

Check out full paper here at (arxiv.org/abs/2504.07174)

Great thanks to my wonderful collaborators Hanchen Li and my advisor @chenhaotan.bsky.social!

Check out full paper here at (arxiv.org/abs/2504.07174)

This is a sample-efficient method for LLM-as-a-judge, grounded upon human judgments — paving the way for personalized evaluators and alignment!

This is a sample-efficient method for LLM-as-a-judge, grounded upon human judgments — paving the way for personalized evaluators and alignment!

We have released to repositories for HypoEval:

For replicating results/building upon: github.com/ChicagoHAI/H...

For off-the-shelf 0-shot evaluators for summaries and stories🚀: github.com/ChicagoHAI/H...

We have released to repositories for HypoEval:

For replicating results/building upon: github.com/ChicagoHAI/H...

For off-the-shelf 0-shot evaluators for summaries and stories🚀: github.com/ChicagoHAI/H...

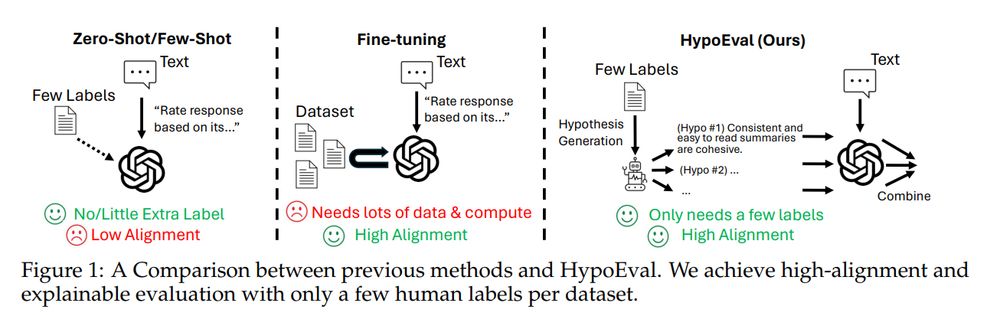

We push forward LLM-as-a-judge research by showing you can get:

Sample efficiency

Interpretable automated evaluation

Strong human alignment

…without massive fine-tuning.

We push forward LLM-as-a-judge research by showing you can get:

Sample efficiency

Interpretable automated evaluation

Strong human alignment

…without massive fine-tuning.

Dropping hypothesis generation → performance drops ~7%

Combining all hypotheses into one criterion → performance drops ~8% (Better to let LLMs rate one sub-dimension at a time!)

Dropping hypothesis generation → performance drops ~7%

Combining all hypotheses into one criterion → performance drops ~8% (Better to let LLMs rate one sub-dimension at a time!)

✅ Works across out-of-distribution (OOD) tasks

✅ Generated hypothesis can be transferred to different LLMs (e.g., GPT-4o-mini ↔ LLAMA-3.3-70B)

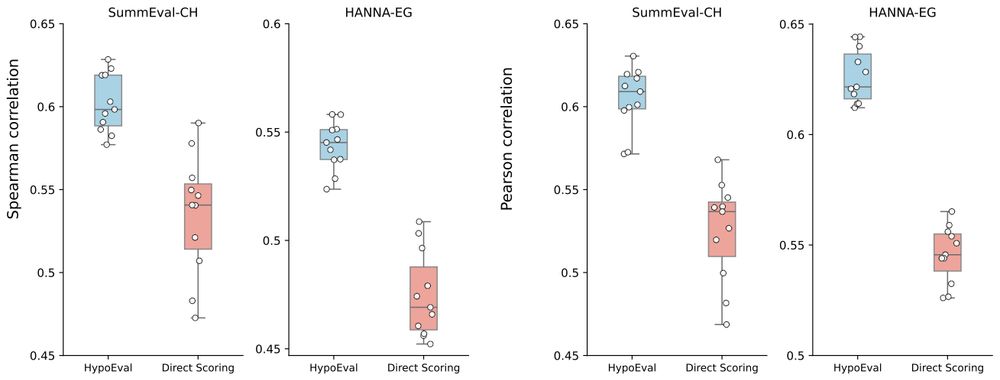

✅ Reduces sensitivity to prompt variations compared to direct scoring

✅ Works across out-of-distribution (OOD) tasks

✅ Generated hypothesis can be transferred to different LLMs (e.g., GPT-4o-mini ↔ LLAMA-3.3-70B)

✅ Reduces sensitivity to prompt variations compared to direct scoring

Across summarization (SummEval, NewsRoom) and story generation (HANNA, WritingPrompt)

We show state-of-the-art correlations with human judgments, for both rankings (Spearman correlation) and scores (Pearson correlation)! 📈

Across summarization (SummEval, NewsRoom) and story generation (HANNA, WritingPrompt)

We show state-of-the-art correlations with human judgments, for both rankings (Spearman correlation) and scores (Pearson correlation)! 📈

By combining small-scale human data + literature + non-binary checklists, HypoEval:

🔹 Outperforms G-Eval by ~12%

🔹 Beats fine-tuned models using 3x more human labels

🔹 Adds interpretable evaluation

By combining small-scale human data + literature + non-binary checklists, HypoEval:

🔹 Outperforms G-Eval by ~12%

🔹 Beats fine-tuned models using 3x more human labels

🔹 Adds interpretable evaluation

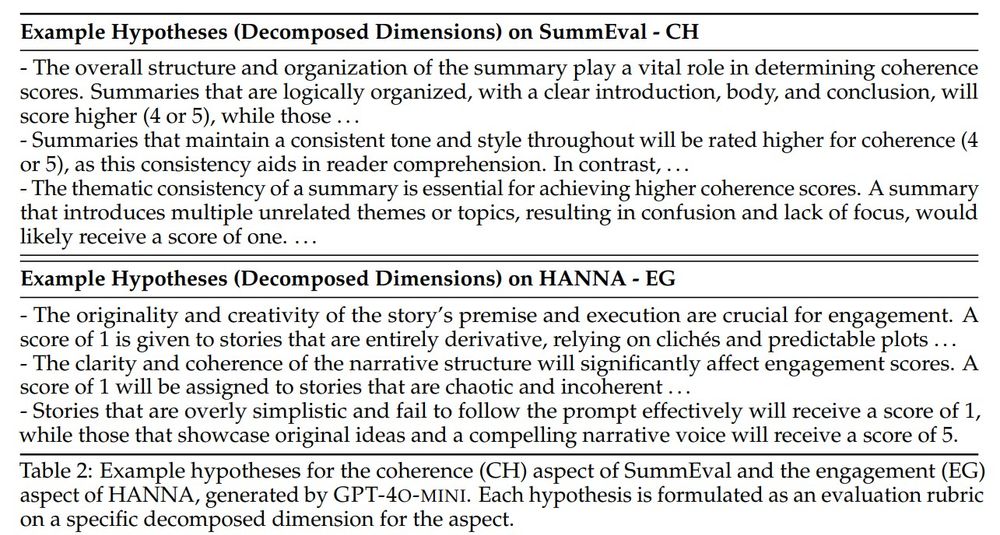

Building upon SOTA hypothesis generation methods, we generate hypotheses — decomposed rubrics (similar to checklists, but more systematic and explainable) — from existing literature and just 30 human annotations (scores) of texts.

Building upon SOTA hypothesis generation methods, we generate hypotheses — decomposed rubrics (similar to checklists, but more systematic and explainable) — from existing literature and just 30 human annotations (scores) of texts.

Most LLM-as-a-judge studies either:

❌ Achieve lower alignment with humans

⚙️ Requires extensive fine-tuning -> expensive data and compute.

❓ Lack of interpretability

Most LLM-as-a-judge studies either:

❌ Achieve lower alignment with humans

⚙️ Requires extensive fine-tuning -> expensive data and compute.

❓ Lack of interpretability